ultralytics 8.0.100 add Mosaic9() augmentation (#2605)

Co-authored-by: Ayush Chaurasia <ayush.chaurarsia@gmail.com> Co-authored-by: Tommy in Tongji <36354458+TommyZihao@users.noreply.github.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: BIGBOSS-FOX <47949596+BIGBOSS-FOX@users.noreply.github.com> Co-authored-by: xbkaishui <xxkaishui@gmail.com>

This commit is contained in:

@ -49,4 +49,19 @@ model.predict("path/to/image.jpg") # predict

|

||||

| Validation | :heavy_check_mark: |

|

||||

| Training | :x: (Coming soon) |

|

||||

|

||||

For more information about the RT-DETR model, please refer to the [original paper](https://arxiv.org/abs/2304.08069) and the [PaddleDetection repository](https://github.com/PaddlePaddle/PaddleDetection).

|

||||

# Citations and Acknowledgements

|

||||

|

||||

If you use RT-DETR in your research or development work, please cite the [original paper](https://arxiv.org/abs/2304.08069):

|

||||

|

||||

```bibtex

|

||||

@misc{lv2023detrs,

|

||||

title={DETRs Beat YOLOs on Real-time Object Detection},

|

||||

author={Wenyu Lv and Shangliang Xu and Yian Zhao and Guanzhong Wang and Jinman Wei and Cheng Cui and Yuning Du and Qingqing Dang and Yi Liu},

|

||||

year={2023},

|

||||

eprint={2304.08069},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Baidu's [PaddlePaddle]((https://github.com/PaddlePaddle/PaddleDetection)) team for creating and maintaining this valuable resource for the computer vision community.

|

||||

|

||||

@ -9,7 +9,8 @@ description: Learn about the Segment Anything Model (SAM) and how it provides pr

|

||||

|

||||

The Segment Anything Model (SAM) is a groundbreaking image segmentation model that enables promptable segmentation with real-time performance. It forms the foundation for the Segment Anything project, which introduces a new task, model, and dataset for image segmentation. SAM is designed to be promptable, allowing it to transfer zero-shot to new image distributions and tasks. The model is trained on the [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/), which contains over 1 billion masks on 11 million licensed and privacy-respecting images. SAM has demonstrated impressive zero-shot performance, often surpassing prior fully supervised results.

|

||||

|

||||

|

||||

|

||||

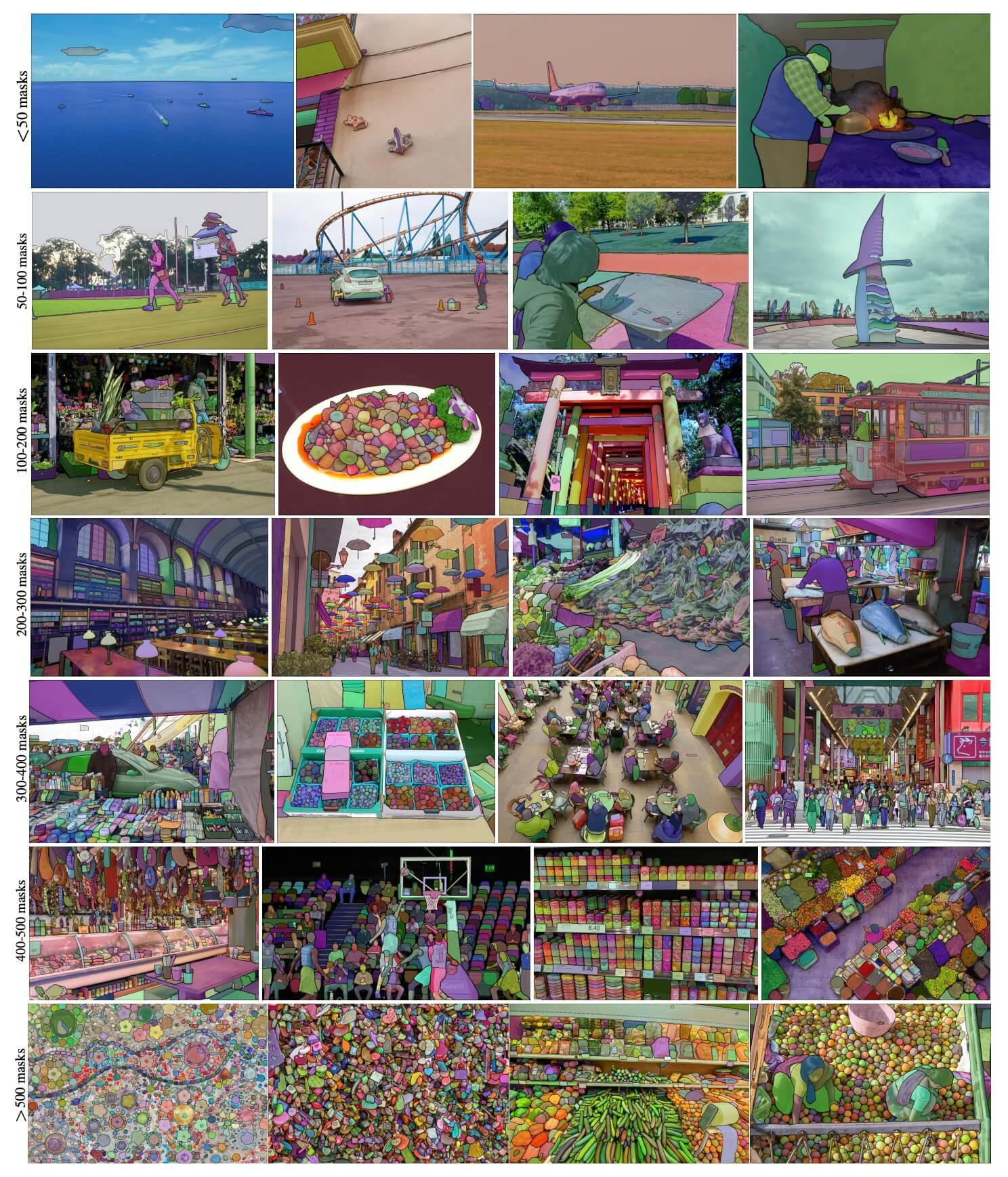

Example images with overlaid masks from our newly introduced dataset, SA-1B. SA-1B contains 11M diverse, high-resolution, licensed, and privacy protecting images and 1.1B high-quality segmentation masks. These masks were annotated fully automatically by SAM, and as verified by human ratings and numerous experiments, are of high quality and diversity. Images are grouped by number of masks per image for visualization (there are ∼100 masks per image on average).

|

||||

|

||||

## Key Features

|

||||

|

||||

@ -47,7 +48,33 @@ model.predict('path/to/image.jpg') # predict

|

||||

| Validation | :x: |

|

||||

| Training | :x: |

|

||||

|

||||

# Citations and Acknowledgements

|

||||

## Auto-Annotation

|

||||

|

||||

Auto-annotation is an essential feature that allows you to generate a [segmentation dataset](https://docs.ultralytics.com/datasets/segment) using a pre-trained detection model. It enables you to quickly and accurately annotate a large number of images without the need for manual labeling, saving time and effort.

|

||||

|

||||

### Generate Segmentation Dataset Using a Detection Model

|

||||

|

||||

To auto-annotate your dataset using the Ultralytics framework, you can use the `auto_annotate` function as shown below:

|

||||

|

||||

```python

|

||||

from ultralytics.yolo.data import auto_annotate

|

||||

|

||||

auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model='sam_b.pt')

|

||||

```

|

||||

|

||||

| Argument | Type | Description | Default |

|

||||

|------------|---------------------|---------------------------------------------------------------------------------------------------------|--------------|

|

||||

| data | str | Path to a folder containing images to be annotated. | |

|

||||

| det_model | str, optional | Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | 'yolov8x.pt' |

|

||||

| sam_model | str, optional | Pre-trained SAM segmentation model. Defaults to 'sam_b.pt'. | 'sam_b.pt' |

|

||||

| device | str, optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

|

||||

| output_dir | str, None, optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | None |

|

||||

|

||||

The `auto_annotate` function takes the path to your images, along with optional arguments for specifying the pre-trained detection and SAM segmentation models, the device to run the models on, and the output directory for saving the annotated results.

|

||||

|

||||

By leveraging the power of pre-trained models, auto-annotation can significantly reduce the time and effort required for creating high-quality segmentation datasets. This feature is particularly useful for researchers and developers working with large image collections, as it allows them to focus on model development and evaluation rather than manual annotation.

|

||||

|

||||

## Citations and Acknowledgements

|

||||

|

||||

If you use SAM in your research or development work, please cite the following paper:

|

||||

|

||||

|

||||

Reference in New Issue

Block a user