ultralytics 8.0.84 results JSON outputs (#2171)

Co-authored-by: Laughing <61612323+Laughing-q@users.noreply.github.com> Co-authored-by: Yonghye Kwon <developer.0hye@gmail.com> Co-authored-by: Ayush Chaurasia <ayush.chaurarsia@gmail.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Talia Bender <85292283+taliabender@users.noreply.github.com>

This commit is contained in:

2

.github/workflows/greetings.yml

vendored

2

.github/workflows/greetings.yml

vendored

@ -30,7 +30,7 @@ jobs:

|

|||||||

|

|

||||||

If this is a 🐛 Bug Report, please provide a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us debug it.

|

If this is a 🐛 Bug Report, please provide a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us debug it.

|

||||||

|

|

||||||

If this is a custom training ❓ Question, please provide as much information as possible, including dataset image examples and training logs, and verify you are following our [Tips for Best Training Results](https://docs.ultralytics.com/yolov5/tips_for_best_training_results/).

|

If this is a custom training ❓ Question, please provide as much information as possible, including dataset image examples and training logs, and verify you are following our [Tips for Best Training Results](https://docs.ultralytics.com/yolov5/tutorials/tips_for_best_training_results/).

|

||||||

|

|

||||||

## Install

|

## Install

|

||||||

|

|

||||||

|

|||||||

4

.github/workflows/links.yml

vendored

4

.github/workflows/links.yml

vendored

@ -23,7 +23,7 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

fail: true

|

fail: true

|

||||||

# accept 429(Instagram, 'too many requests'), 999(LinkedIn, 'unknown status code'), Timeout(Twitter)

|

# accept 429(Instagram, 'too many requests'), 999(LinkedIn, 'unknown status code'), Timeout(Twitter)

|

||||||

args: --accept 429,999 --exclude-loopback --exclude twitter.com --verbose --no-progress './**/*.md' './**/*.html'

|

args: --accept 429,999 --exclude-loopback --exclude twitter.com --exclude-mail './**/*.md' './**/*.html'

|

||||||

env:

|

env:

|

||||||

GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}

|

GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}

|

||||||

|

|

||||||

@ -33,6 +33,6 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

fail: true

|

fail: true

|

||||||

# accept 429(Instagram, 'too many requests'), 999(LinkedIn, 'unknown status code'), Timeout(Twitter)

|

# accept 429(Instagram, 'too many requests'), 999(LinkedIn, 'unknown status code'), Timeout(Twitter)

|

||||||

args: --accept 429,999 --exclude-loopback --exclude twitter.com,url.com --verbose --no-progress './**/*.md' './**/*.html' './**/*.yml' './**/*.yaml' './**/*.py' './**/*.ipynb'

|

args: --accept 429,999 --exclude-loopback --exclude twitter.com,url.com --exclude-mail './**/*.md' './**/*.html' './**/*.yml' './**/*.yaml' './**/*.py' './**/*.ipynb'

|

||||||

env:

|

env:

|

||||||

GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}

|

GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}

|

||||||

|

|||||||

12

README.md

12

README.md

@ -18,7 +18,9 @@

|

|||||||

</div>

|

</div>

|

||||||

<br>

|

<br>

|

||||||

|

|

||||||

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics), developed by [Ultralytics](https://ultralytics.com), is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection, image segmentation and image classification tasks.

|

[Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection and tracking, instance segmentation, image classification and pose estimation tasks.

|

||||||

|

|

||||||

|

We hope that the resources here will help you get the most out of YOLOv8. Please browse the YOLOv8 <a href="https://docs.ultralytics.com/">Docs</a> for details, raise an issue on <a href="https://github.com/ultralytics/ultralytics">GitHub</a> for support, and join our <a href="https://discord.gg/n6cFeSPZdD">Discord</a> community for questions and discussions!

|

||||||

|

|

||||||

To request an Enterprise License please complete the form at [Ultralytics Licensing](https://ultralytics.com/license).

|

To request an Enterprise License please complete the form at [Ultralytics Licensing](https://ultralytics.com/license).

|

||||||

|

|

||||||

@ -42,6 +44,9 @@ To request an Enterprise License please complete the form at [Ultralytics Licens

|

|||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://discord.gg/n6cFeSPZdD" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="2%" alt="" /></a>

|

||||||

</div>

|

</div>

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

@ -230,7 +235,7 @@ YOLOv8 is available under two different licenses:

|

|||||||

|

|

||||||

## <div align="center">Contact</div>

|

## <div align="center">Contact</div>

|

||||||

|

|

||||||

For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/ultralytics/issues) or the [Ultralytics Community Forum](https://community.ultralytics.com/).

|

For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/ultralytics/issues), and join our [Discord](https://discord.gg/n6cFeSPZdD) community for questions and discussions!

|

||||||

|

|

||||||

<br>

|

<br>

|

||||||

<div align="center">

|

<div align="center">

|

||||||

@ -251,4 +256,7 @@ For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https:/

|

|||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

||||||

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

||||||

|

<a href="https://discord.gg/n6cFeSPZdD" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="3%" alt="" /></a>

|

||||||

</div>

|

</div>

|

||||||

|

|||||||

@ -18,9 +18,11 @@

|

|||||||

</div>

|

</div>

|

||||||

<br>

|

<br>

|

||||||

|

|

||||||

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics),由 [Ultralytics](https://ultralytics.com) 开发,是一种尖端的、最先进(SOTA)的模型,它在之前 YOLO 版本的成功基础上进行了建设,并引入了新的特性和改进,以进一步提高性能和灵活性。YOLOv8 旨在快速、准确且易于使用,使其成为广泛的对象检测、图像分割和图像分类任务的绝佳选择。

|

[Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) 是一款前沿、最先进(SOTA)的模型,基于先前 YOLO 版本的成功,引入了新功能和改进,进一步提升性能和灵活性。YOLOv8 设计快速、准确且易于使用,使其成为各种物体检测与跟踪、实例分割、图像分类和姿态估计任务的绝佳选择。

|

||||||

|

|

||||||

如需申请企业许可,请在 [Ultralytics 授权](https://ultralytics.com/license) 完成表格。

|

我们希望这里的资源能帮助您充分利用 YOLOv8。请浏览 YOLOv8 <a href="https://docs.ultralytics.com/">文档</a> 了解详细信息,在 <a href="https://github.com/ultralytics/ultralytics">GitHub</a> 上提交问题以获得支持,并加入我们的 <a href="https://discord.gg/n6cFeSPZdD">Discord</a> 社区进行问题和讨论!

|

||||||

|

|

||||||

|

如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格

|

||||||

|

|

||||||

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/yolo-comparison-plots.png"></a>

|

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/yolo-comparison-plots.png"></a>

|

||||||

|

|

||||||

@ -42,6 +44,9 @@

|

|||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://discord.gg/n6cFeSPZdD" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="2%" alt="" /></a>

|

||||||

</div>

|

</div>

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

@ -229,7 +234,7 @@ YOLOv8 提供两种不同的许可证:

|

|||||||

|

|

||||||

## <div align="center">联系方式</div>

|

## <div align="center">联系方式</div>

|

||||||

|

|

||||||

如需报告 YOLOv8 的错误或提出功能需求,请访问 [GitHub Issues](https://github.com/ultralytics/ultralytics/issues) 或 [Ultralytics 社区论坛](https://community.ultralytics.com/)。

|

对于 YOLOv8 的错误报告和功能请求,请访问 [GitHub Issues](https://github.com/ultralytics/ultralytics/issues),并加入我们的 [Discord](https://discord.gg/n6cFeSPZdD) 社区进行问题和讨论!

|

||||||

|

|

||||||

<br>

|

<br>

|

||||||

<div align="center">

|

<div align="center">

|

||||||

@ -250,4 +255,6 @@ YOLOv8 提供两种不同的许可证:

|

|||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

||||||

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

||||||

|

<a href="https://discord.gg/n6cFeSPZdD" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="3%" alt="" /></a>

|

||||||

</div>

|

</div>

|

||||||

|

|||||||

425

docs/inference_api.md

Normal file

425

docs/inference_api.md

Normal file

@ -0,0 +1,425 @@

|

|||||||

|

# YOLO Inference API (UNDER CONSTRUCTION)

|

||||||

|

|

||||||

|

The YOLO Inference API allows you to access the YOLOv8 object detection capabilities via a RESTful API. This enables you to run object detection on images without the need to install and set up the YOLOv8 environment locally.

|

||||||

|

|

||||||

|

## API URL

|

||||||

|

|

||||||

|

The API URL is the address used to access the YOLO Inference API. In this case, the base URL is:

|

||||||

|

|

||||||

|

```

|

||||||

|

https://api.ultralytics.com/inference/v1

|

||||||

|

```

|

||||||

|

|

||||||

|

To access the API with a specific model and your API key, you can include them as query parameters in the API URL. The `model` parameter refers to the `MODEL_ID` you want to use for inference, and the `key` parameter corresponds to your `API_KEY`.

|

||||||

|

|

||||||

|

The complete API URL with the model and API key parameters would be:

|

||||||

|

|

||||||

|

```

|

||||||

|

https://api.ultralytics.com/inference/v1?model=MODEL_ID&key=API_KEY

|

||||||

|

```

|

||||||

|

|

||||||

|

Replace `MODEL_ID` with the ID of the model you want to use and `API_KEY` with your actual API key from [https://hub.ultralytics.com/settings?tab=api+keys](https://hub.ultralytics.com/settings?tab=api+keys).

|

||||||

|

|

||||||

|

## Example Usage in Python

|

||||||

|

|

||||||

|

To access the YOLO Inference API with the specified model and API key using Python, you can use the following code:

|

||||||

|

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

|

||||||

|

api_key = "API_KEY"

|

||||||

|

model_id = "MODEL_ID"

|

||||||

|

url = f"https://api.ultralytics.com/inference/v1?model={model_id}&key={api_key}"

|

||||||

|

image_path = "image.jpg"

|

||||||

|

|

||||||

|

with open(image_path, "rb") as image_file:

|

||||||

|

files = {"image": image_file}

|

||||||

|

response = requests.post(url, files=files)

|

||||||

|

|

||||||

|

print(response.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

In this example, replace `API_KEY` with your actual API key, `MODEL_ID` with the desired model ID, and `image.jpg` with the path to the image you want to analyze.

|

||||||

|

|

||||||

|

|

||||||

|

## Example Usage with CLI

|

||||||

|

|

||||||

|

You can use the YOLO Inference API with the command-line interface (CLI) by utilizing the `curl` command. Replace `API_KEY` with your actual API key, `MODEL_ID` with the desired model ID, and `image.jpg` with the path to the image you want to analyze:

|

||||||

|

|

||||||

|

```commandline

|

||||||

|

curl -X POST -F image=@image.jpg "https://api.ultralytics.com/inference/v1?model=MODEL_ID&key=API_KEY"

|

||||||

|

```

|

||||||

|

|

||||||

|

## Passing Arguments

|

||||||

|

|

||||||

|

This command sends a POST request to the YOLO Inference API with the specified `model` and `key` parameters in the URL, along with the image file specified by `@image.jpg`.

|

||||||

|

|

||||||

|

Here's an example of passing the `model`, `key`, and `normalize` arguments via the API URL using the `requests` library in Python:

|

||||||

|

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

|

||||||

|

api_key = "API_KEY"

|

||||||

|

model_id = "MODEL_ID"

|

||||||

|

url = "https://api.ultralytics.com/inference/v1"

|

||||||

|

|

||||||

|

# Define your query parameters

|

||||||

|

params = {

|

||||||

|

"key": api_key,

|

||||||

|

"model": model_id,

|

||||||

|

"normalize": "True"

|

||||||

|

}

|

||||||

|

|

||||||

|

image_path = "image.jpg"

|

||||||

|

|

||||||

|

with open(image_path, "rb") as image_file:

|

||||||

|

files = {"image": image_file}

|

||||||

|

response = requests.post(url, files=files, params=params)

|

||||||

|

|

||||||

|

print(response.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

In this example, the `params` dictionary contains the query parameters `key`, `model`, and `normalize`, which tells the API to return all values in normalized image coordinates from 0 to 1. The `normalize` parameter is set to `"True"` as a string since query parameters should be passed as strings. These query parameters are then passed to the `requests.post()` function.

|

||||||

|

|

||||||

|

This will send the query parameters along with the file in the POST request. Make sure to consult the API documentation for the list of available arguments and their expected values.

|

||||||

|

|

||||||

|

## Return JSON format

|

||||||

|

|

||||||

|

The YOLO Inference API returns a JSON list with the detection results. The format of the JSON list will be the same as the one produced locally by the `results[0].tojson()` command.

|

||||||

|

|

||||||

|

The JSON list contains information about the detected objects, their coordinates, classes, and confidence scores.

|

||||||

|

|

||||||

|

### Detect Model Format

|

||||||

|

|

||||||

|

YOLO detection models, such as `yolov8n.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||||

|

|

||||||

|

!!! example "Detect Model JSON Response"

|

||||||

|

|

||||||

|

=== "Local"

|

||||||

|

```python

|

||||||

|

from ultralytics import YOLO

|

||||||

|

|

||||||

|

# Load model

|

||||||

|

model = YOLO('yolov8n.pt')

|

||||||

|

|

||||||

|

# Run inference

|

||||||

|

results = model('image.jpg')

|

||||||

|

|

||||||

|

# Print image.jpg results in JSON format

|

||||||

|

print(results[0].tojson())

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "CLI API"

|

||||||

|

```commandline

|

||||||

|

curl -X POST -F image=@image.jpg https://api.ultralytics.com/inference/v1?model=MODEL_ID,key=API_KEY

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "Python API"

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

|

||||||

|

api_key = "API_KEY"

|

||||||

|

model_id = "MODEL_ID"

|

||||||

|

url = "https://api.ultralytics.com/inference/v1"

|

||||||

|

|

||||||

|

# Define your query parameters

|

||||||

|

params = {

|

||||||

|

"key": api_key,

|

||||||

|

"model": model_id,

|

||||||

|

}

|

||||||

|

|

||||||

|

image_path = "image.jpg"

|

||||||

|

|

||||||

|

with open(image_path, "rb") as image_file:

|

||||||

|

files = {"image": image_file}

|

||||||

|

response = requests.post(url, files=files, params=params)

|

||||||

|

|

||||||

|

print(response.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "JSON Response"

|

||||||

|

```json

|

||||||

|

[

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.8359682559967041,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.08974208831787109,

|

||||||

|

"y1": 0.27418340047200523,

|

||||||

|

"x2": 0.8706787109375,

|

||||||

|

"y2": 0.9887352837456598

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.8189555406570435,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.5847355842590332,

|

||||||

|

"y1": 0.05813225640190972,

|

||||||

|

"x2": 0.8930277824401855,

|

||||||

|

"y2": 0.9903111775716146

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"name": "tie",

|

||||||

|

"class": 27,

|

||||||

|

"confidence": 0.2909725308418274,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.3433395862579346,

|

||||||

|

"y1": 0.6070465511745877,

|

||||||

|

"x2": 0.40964522361755373,

|

||||||

|

"y2": 0.9849439832899306

|

||||||

|

}

|

||||||

|

}

|

||||||

|

]

|

||||||

|

```

|

||||||

|

|

||||||

|

### Segment Model Format

|

||||||

|

|

||||||

|

YOLO segmentation models, such as `yolov8n-seg.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||||

|

|

||||||

|

!!! example "Segment Model JSON Response"

|

||||||

|

|

||||||

|

=== "Local"

|

||||||

|

```python

|

||||||

|

from ultralytics import YOLO

|

||||||

|

|

||||||

|

# Load model

|

||||||

|

model = YOLO('yolov8n-seg.pt')

|

||||||

|

|

||||||

|

# Run inference

|

||||||

|

results = model('image.jpg')

|

||||||

|

|

||||||

|

# Print image.jpg results in JSON format

|

||||||

|

print(results[0].tojson())

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "CLI API"

|

||||||

|

```commandline

|

||||||

|

curl -X POST -F image=@image.jpg https://api.ultralytics.com/inference/v1?model=MODEL_ID,key=API_KEY

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "Python API"

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

|

||||||

|

api_key = "API_KEY"

|

||||||

|

model_id = "MODEL_ID"

|

||||||

|

url = "https://api.ultralytics.com/inference/v1"

|

||||||

|

|

||||||

|

# Define your query parameters

|

||||||

|

params = {

|

||||||

|

"key": api_key,

|

||||||

|

"model": model_id,

|

||||||

|

}

|

||||||

|

|

||||||

|

image_path = "image.jpg"

|

||||||

|

|

||||||

|

with open(image_path, "rb") as image_file:

|

||||||

|

files = {"image": image_file}

|

||||||

|

response = requests.post(url, files=files, params=params)

|

||||||

|

|

||||||

|

print(response.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "JSON Response"

|

||||||

|

Note `segments` `x` and `y` lengths may vary from one object to another. Larger or more complex objects may have more segment points.

|

||||||

|

```json

|

||||||

|

[

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.856913149356842,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.1064866065979004,

|

||||||

|

"y1": 0.2798851860894097,

|

||||||

|

"x2": 0.8738358497619629,

|

||||||

|

"y2": 0.9894873725043403

|

||||||

|

},

|

||||||

|

"segments": {

|

||||||

|

"x": [

|

||||||

|

0.421875,

|

||||||

|

0.4203124940395355,

|

||||||

|

0.41718751192092896

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"y": [

|

||||||

|

0.2888889014720917,

|

||||||

|

0.2916666567325592,

|

||||||

|

0.2916666567325592

|

||||||

|

...

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.8512625694274902,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.5757311820983887,

|

||||||

|

"y1": 0.053943040635850696,

|

||||||

|

"x2": 0.8960096359252929,

|

||||||

|

"y2": 0.985154045952691

|

||||||

|

},

|

||||||

|

"segments": {

|

||||||

|

"x": [

|

||||||

|

0.7515624761581421,

|

||||||

|

0.75,

|

||||||

|

0.7437499761581421

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"y": [

|

||||||

|

0.0555555559694767,

|

||||||

|

0.05833333358168602,

|

||||||

|

0.05833333358168602

|

||||||

|

...

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"name": "tie",

|

||||||

|

"class": 27,

|

||||||

|

"confidence": 0.6485961675643921,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.33911995887756347,

|

||||||

|

"y1": 0.6057066175672743,

|

||||||

|

"x2": 0.4081430912017822,

|

||||||

|

"y2": 0.9916408962673611

|

||||||

|

},

|

||||||

|

"segments": {

|

||||||

|

"x": [

|

||||||

|

0.37187498807907104,

|

||||||

|

0.37031251192092896,

|

||||||

|

0.3687500059604645

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"y": [

|

||||||

|

0.6111111044883728,

|

||||||

|

0.6138888597488403,

|

||||||

|

0.6138888597488403

|

||||||

|

...

|

||||||

|

]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

]

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

### Pose Model Format

|

||||||

|

|

||||||

|

YOLO pose models, such as `yolov8n-pose.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||||

|

|

||||||

|

!!! example "Pose Model JSON Response"

|

||||||

|

|

||||||

|

=== "Local"

|

||||||

|

```python

|

||||||

|

from ultralytics import YOLO

|

||||||

|

|

||||||

|

# Load model

|

||||||

|

model = YOLO('yolov8n-seg.pt')

|

||||||

|

|

||||||

|

# Run inference

|

||||||

|

results = model('image.jpg')

|

||||||

|

|

||||||

|

# Print image.jpg results in JSON format

|

||||||

|

print(results[0].tojson())

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "CLI API"

|

||||||

|

```commandline

|

||||||

|

curl -X POST -F image=@image.jpg https://api.ultralytics.com/inference/v1?model=MODEL_ID,key=API_KEY

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "Python API"

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

|

||||||

|

api_key = "API_KEY"

|

||||||

|

model_id = "MODEL_ID"

|

||||||

|

url = "https://api.ultralytics.com/inference/v1"

|

||||||

|

|

||||||

|

# Define your query parameters

|

||||||

|

params = {

|

||||||

|

"key": api_key,

|

||||||

|

"model": model_id,

|

||||||

|

}

|

||||||

|

|

||||||

|

image_path = "image.jpg"

|

||||||

|

|

||||||

|

with open(image_path, "rb") as image_file:

|

||||||

|

files = {"image": image_file}

|

||||||

|

response = requests.post(url, files=files, params=params)

|

||||||

|

|

||||||

|

print(response.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

=== "JSON Response"

|

||||||

|

Note COCO-keypoints pretrained models will have 17 human keypoints. The `visible` part of the keypoints indicates whether a keypoint is visible or obscured. Obscured keypoints may be outside the image or may not be visible, i.e. a person's eyes facing away from the camera.

|

||||||

|

```json

|

||||||

|

[

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.8439509868621826,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.1125,

|

||||||

|

"y1": 0.28194444444444444,

|

||||||

|

"x2": 0.7953125,

|

||||||

|

"y2": 0.9902777777777778

|

||||||

|

},

|

||||||

|

"keypoints": {

|

||||||

|

"x": [

|

||||||

|

0.5058594942092896,

|

||||||

|

0.5103894472122192,

|

||||||

|

0.4920862317085266

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"y": [

|

||||||

|

0.48964157700538635,

|

||||||

|

0.4643048942089081,

|

||||||

|

0.4465252459049225

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"visible": [

|

||||||

|

0.8726999163627625,

|

||||||

|

0.653947651386261,

|

||||||

|

0.9130823612213135

|

||||||

|

...

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"name": "person",

|

||||||

|

"class": 0,

|

||||||

|

"confidence": 0.7474289536476135,

|

||||||

|

"box": {

|

||||||

|

"x1": 0.58125,

|

||||||

|

"y1": 0.0625,

|

||||||

|

"x2": 0.8859375,

|

||||||

|

"y2": 0.9888888888888889

|

||||||

|

},

|

||||||

|

"keypoints": {

|

||||||

|

"x": [

|

||||||

|

0.778544008731842,

|

||||||

|

0.7976160049438477,

|

||||||

|

0.7530890107154846

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"y": [

|

||||||

|

0.27595141530036926,

|

||||||

|

0.2378823608160019,

|

||||||

|

0.23644638061523438

|

||||||

|

...

|

||||||

|

],

|

||||||

|

"visible": [

|

||||||

|

0.8900790810585022,

|

||||||

|

0.789978563785553,

|

||||||

|

0.8974530100822449

|

||||||

|

...

|

||||||

|

]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

]

|

||||||

|

```

|

||||||

@ -8,11 +8,6 @@

|

|||||||

:::ultralytics.yolo.utils.callbacks.mlflow.on_fit_epoch_end

|

:::ultralytics.yolo.utils.callbacks.mlflow.on_fit_epoch_end

|

||||||

<br><br>

|

<br><br>

|

||||||

|

|

||||||

# on_model_save

|

|

||||||

---

|

|

||||||

:::ultralytics.yolo.utils.callbacks.mlflow.on_model_save

|

|

||||||

<br><br>

|

|

||||||

|

|

||||||

# on_train_end

|

# on_train_end

|

||||||

---

|

---

|

||||||

:::ultralytics.yolo.utils.callbacks.mlflow.on_train_end

|

:::ultralytics.yolo.utils.callbacks.mlflow.on_train_end

|

||||||

|

|||||||

@ -68,7 +68,8 @@ the [Configuration](../usage/cfg.md) page.

|

|||||||

yolo detect train data=coco128.yaml model=yolov8n.yaml pretrained=yolov8n.pt epochs=100 imgsz=640

|

yolo detect train data=coco128.yaml model=yolov8n.yaml pretrained=yolov8n.pt epochs=100 imgsz=640

|

||||||

```

|

```

|

||||||

### Dataset format

|

### Dataset format

|

||||||

YOLO detection dataset format can be found in detail in the [Dataset Guide](../yolov5/train_custom_data.md).

|

|

||||||

|

YOLO detection dataset format can be found in detail in the [Dataset Guide](../yolov5/tutorials/train_custom_data.md).

|

||||||

To convert your existing dataset from other formats( like COCO, VOC etc.) to YOLO format, please use [json2yolo tool](https://github.com/ultralytics/JSON2YOLO) by Ultralytics.

|

To convert your existing dataset from other formats( like COCO, VOC etc.) to YOLO format, please use [json2yolo tool](https://github.com/ultralytics/JSON2YOLO) by Ultralytics.

|

||||||

|

|

||||||

## Val

|

## Val

|

||||||

|

|||||||

82

docs/yolov5/environments/aws_quickstart_tutorial.md

Normal file

82

docs/yolov5/environments/aws_quickstart_tutorial.md

Normal file

@ -0,0 +1,82 @@

|

|||||||

|

# YOLOv5 🚀 on AWS Deep Learning Instance: A Comprehensive Guide

|

||||||

|

|

||||||

|

This guide will help new users run YOLOv5 on an Amazon Web Services (AWS) Deep Learning instance. AWS offers a [Free Tier](https://aws.amazon.com/free/) and a [credit program](https://aws.amazon.com/activate/) for a quick and affordable start.

|

||||||

|

|

||||||

|

Other quickstart options for YOLOv5 include our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and our Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>. *Updated: 21 April 2023*.

|

||||||

|

|

||||||

|

## 1. AWS Console Sign-in

|

||||||

|

|

||||||

|

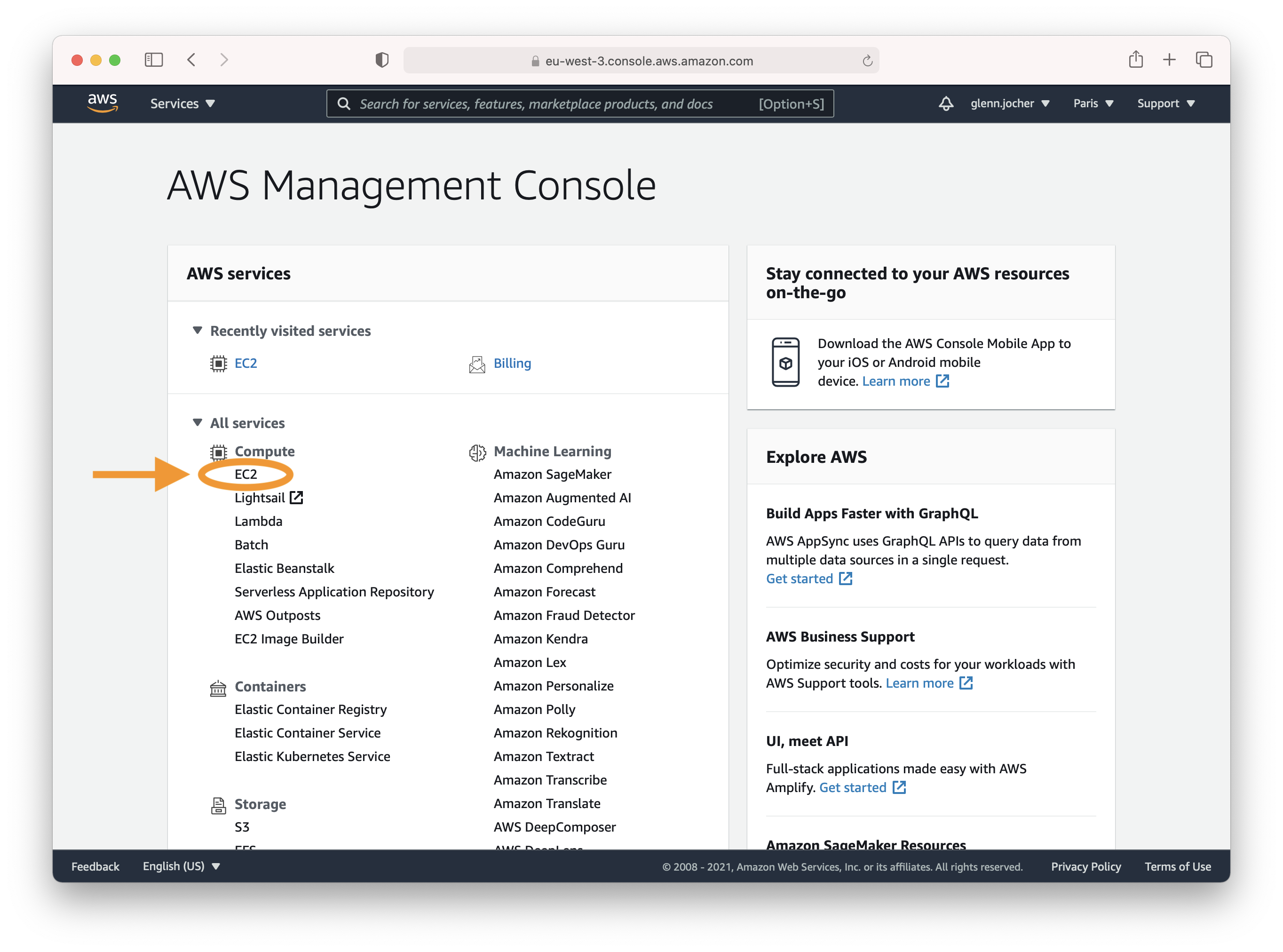

Create an account or sign in to the AWS console at [https://aws.amazon.com/console/](https://aws.amazon.com/console/) and select the **EC2** service.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 2. Launch Instance

|

||||||

|

|

||||||

|

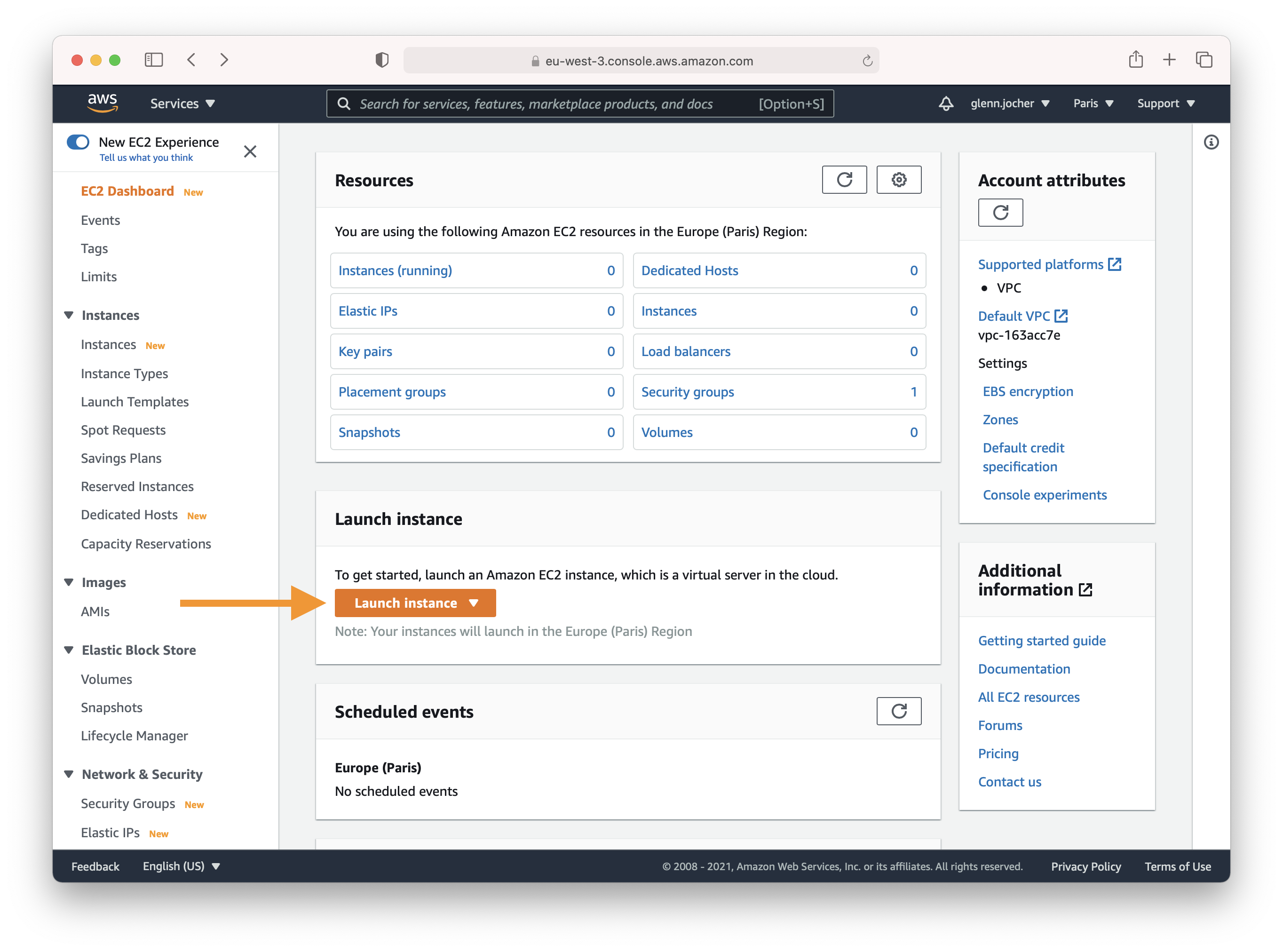

In the EC2 section of the AWS console, click the **Launch instance** button.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Choose an Amazon Machine Image (AMI)

|

||||||

|

|

||||||

|

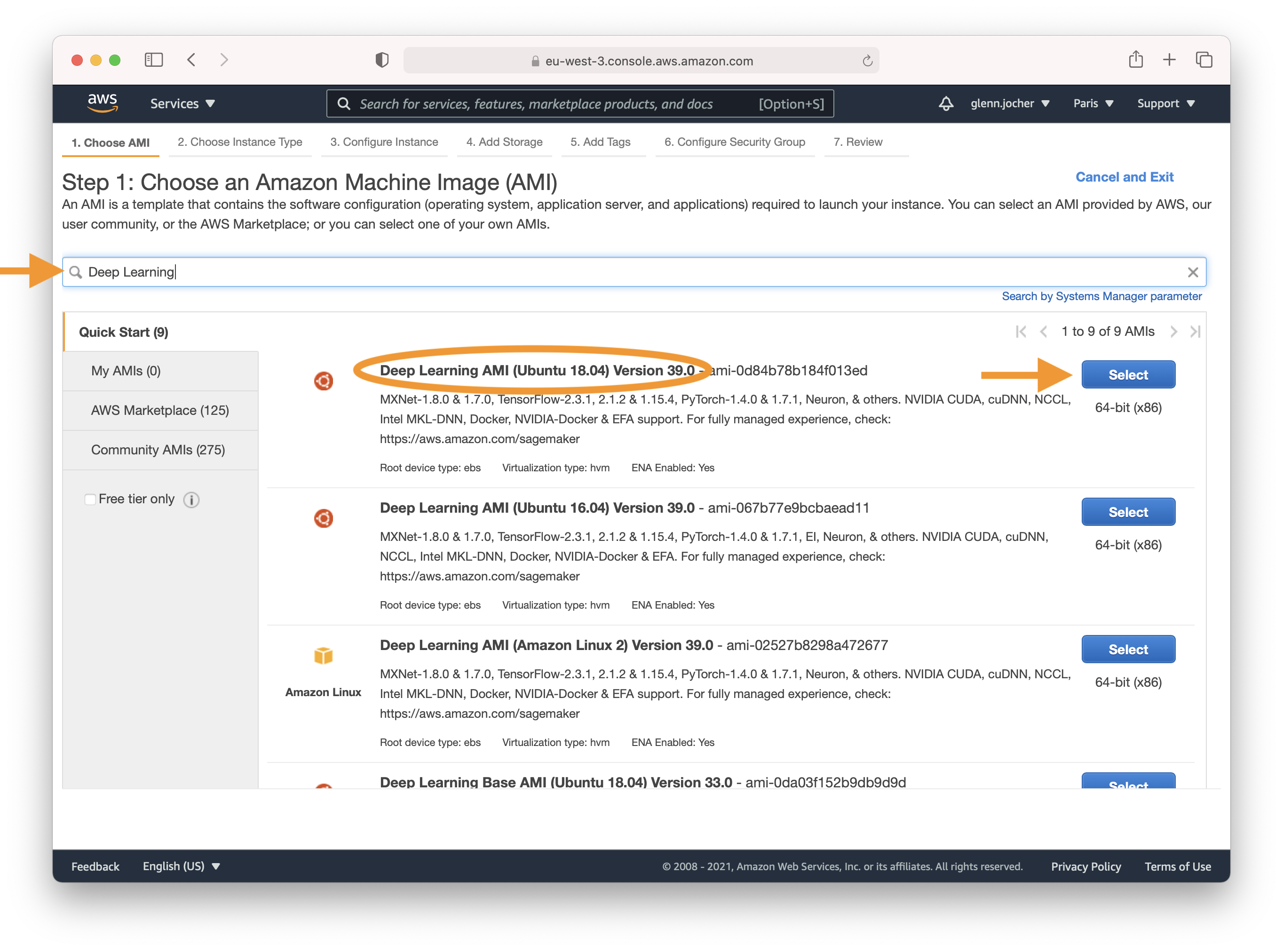

Enter 'Deep Learning' in the search field and select the most recent Ubuntu Deep Learning AMI (recommended), or an alternative Deep Learning AMI. For more information on selecting an AMI, see [Choosing Your DLAMI](https://docs.aws.amazon.com/dlami/latest/devguide/options.html).

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Select an Instance Type

|

||||||

|

|

||||||

|

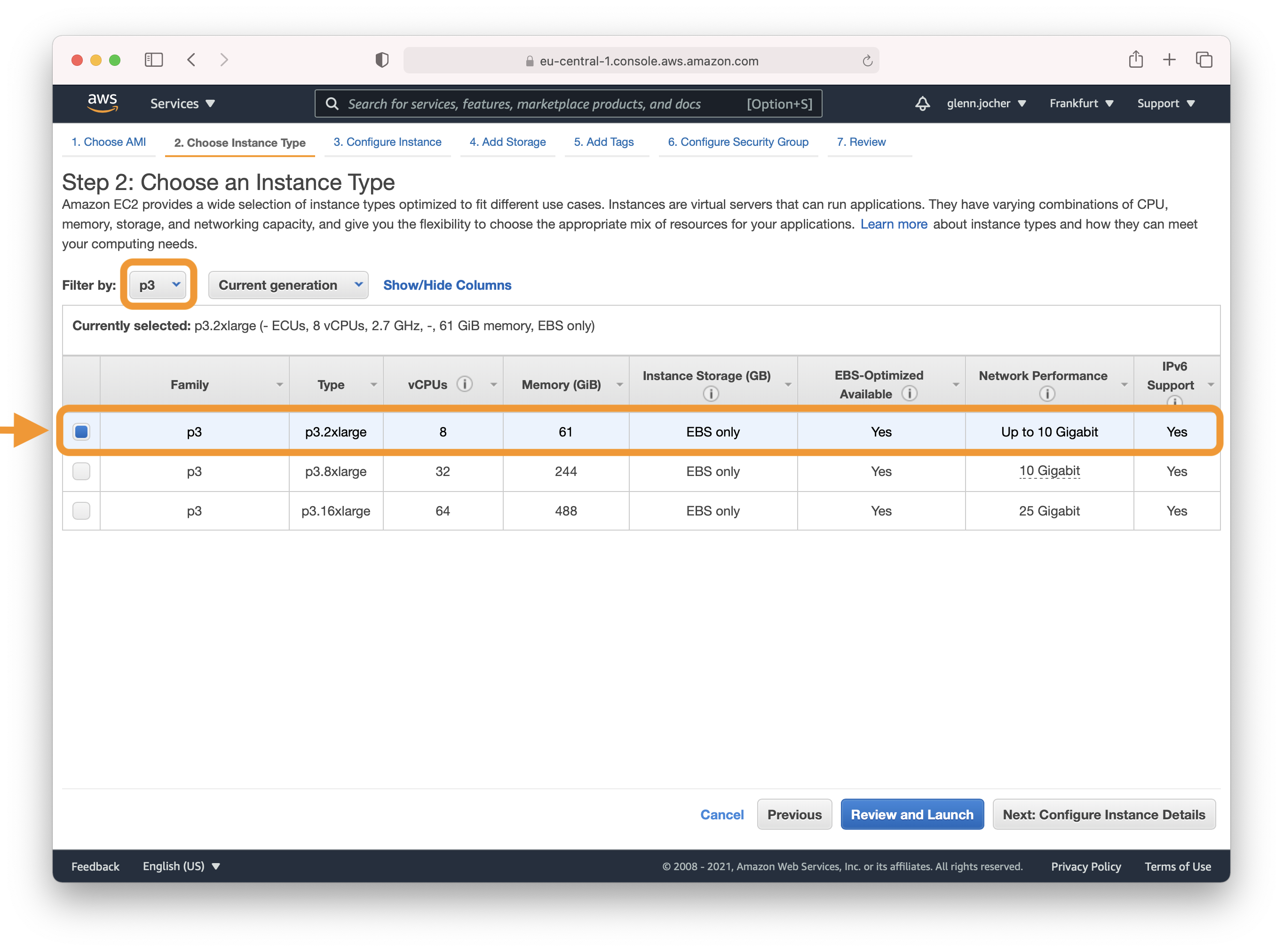

A GPU instance is recommended for most deep learning purposes. Training new models will be faster on a GPU instance than a CPU instance. Multi-GPU instances or distributed training across multiple instances with GPUs can offer sub-linear scaling. To set up distributed training, see [Distributed Training](https://docs.aws.amazon.com/dlami/latest/devguide/distributed-training.html).

|

||||||

|

|

||||||

|

**Note:** The size of your model should be a factor in selecting an instance. If your model exceeds an instance's available RAM, select a different instance type with enough memory for your application.

|

||||||

|

|

||||||

|

Refer to [EC2 Instance Types](https://aws.amazon.com/ec2/instance-types/) and choose Accelerated Computing to see the different GPU instance options.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

For more information on GPU monitoring and optimization, see [GPU Monitoring and Optimization](https://docs.aws.amazon.com/dlami/latest/devguide/tutorial-gpu.html). For pricing, see [On-Demand Pricing](https://aws.amazon.com/ec2/pricing/on-demand/) and [Spot Pricing](https://aws.amazon.com/ec2/spot/pricing/).

|

||||||

|

|

||||||

|

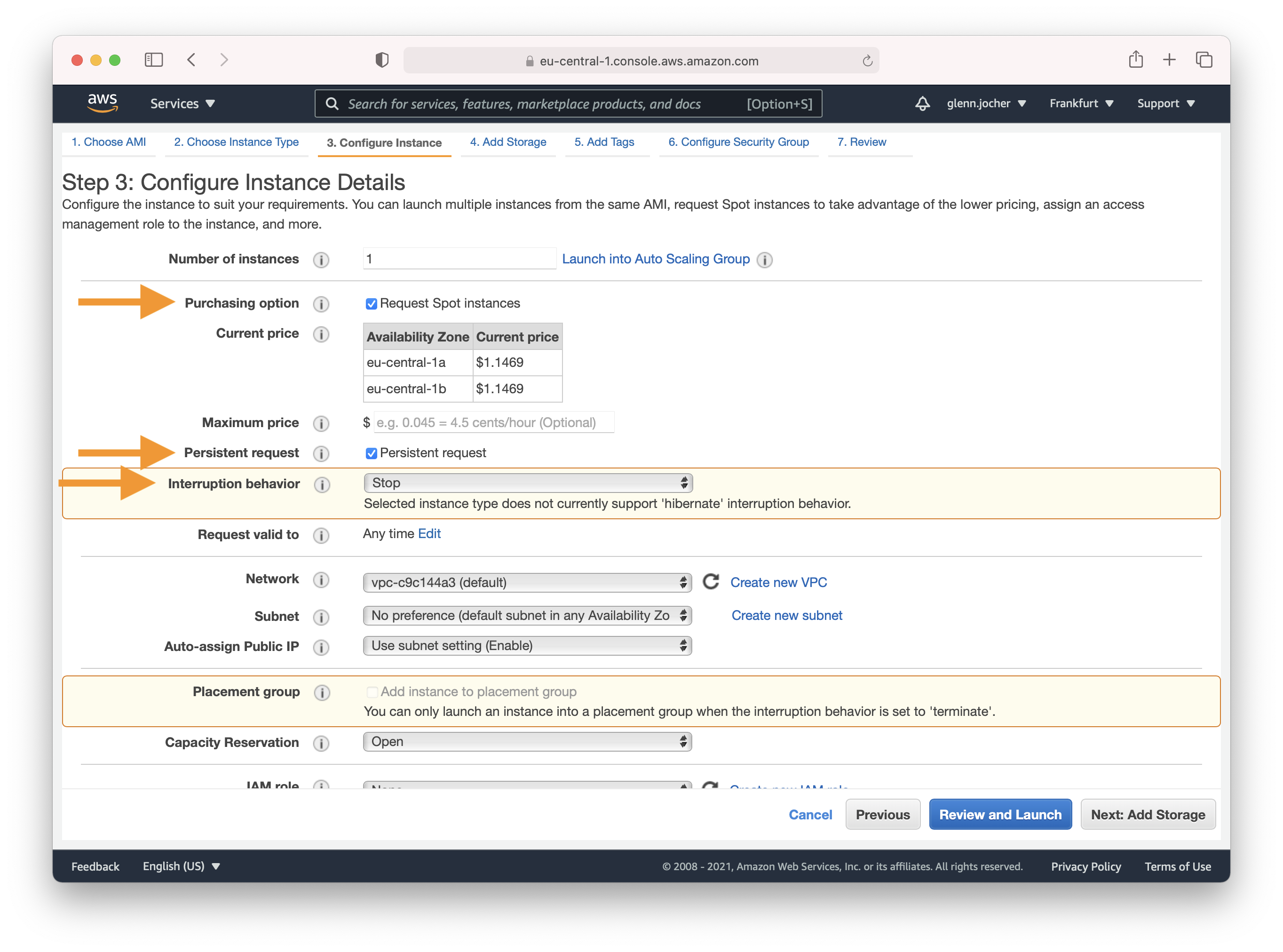

### Configure Instance Details

|

||||||

|

|

||||||

|

Amazon EC2 Spot Instances let you take advantage of unused EC2 capacity in the AWS cloud. Spot Instances are available at up to a 70% discount compared to On-Demand prices. We recommend a persistent spot instance, which will save your data and restart automatically when spot instance availability returns after spot instance termination. For full-price On-Demand instances, leave these settings at their default values.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Complete Steps 4-7 to finalize your instance hardware and security settings, and then launch the instance.

|

||||||

|

|

||||||

|

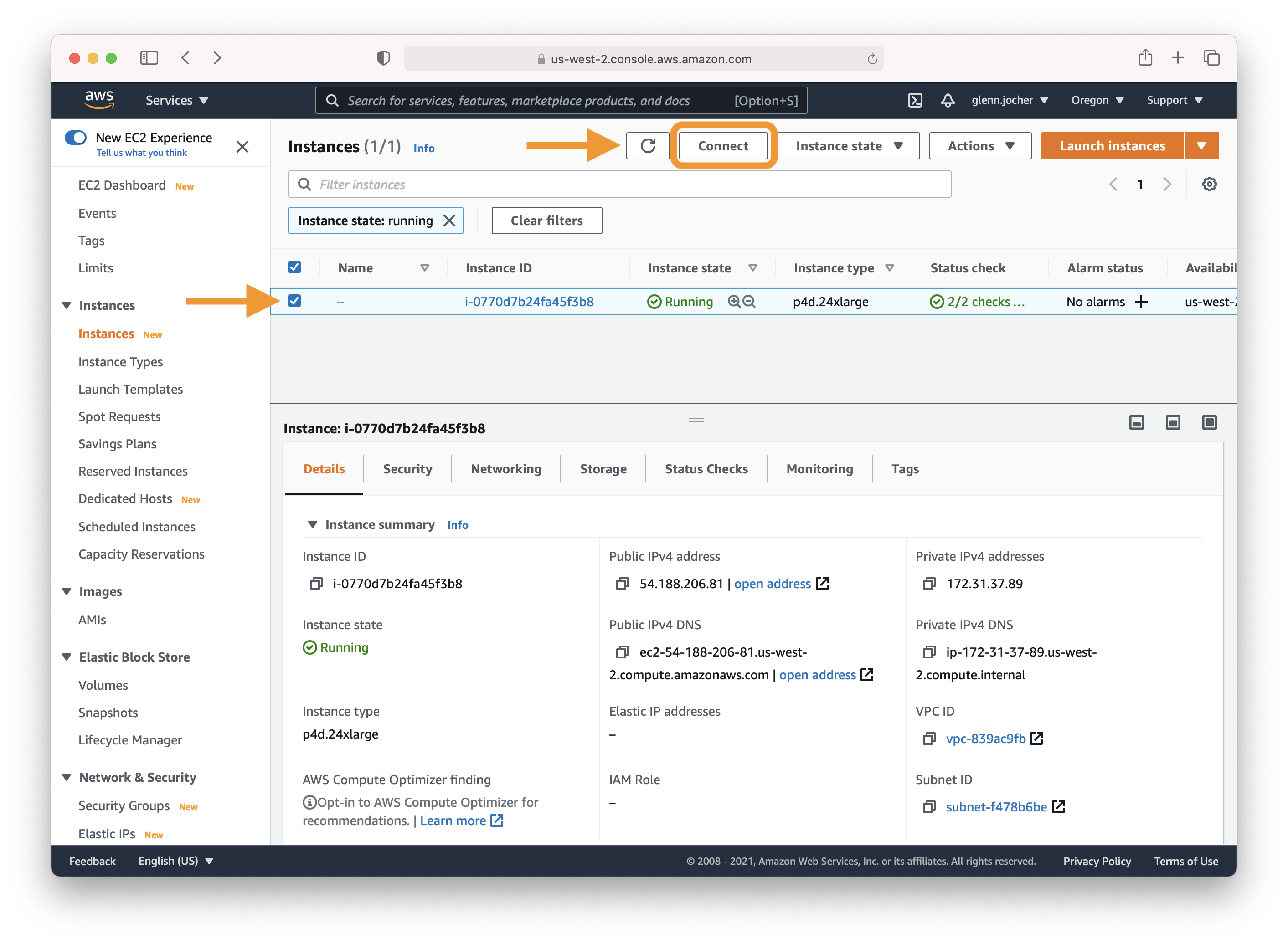

## 3. Connect to Instance

|

||||||

|

|

||||||

|

Select the checkbox next to your running instance, and then click Connect. Copy and paste the SSH terminal command into a terminal of your choice to connect to your instance.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 4. Run YOLOv5

|

||||||

|

|

||||||

|

Once you have logged in to your instance, clone the repository and install the dependencies in a [**Python>=3.7.0**](https://www.python.org/) environment, including [**PyTorch>=1.7**](https://pytorch.org/get-started/locally/). [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/ultralytics/yolov5 # clone

|

||||||

|

cd yolov5

|

||||||

|

pip install -r requirements.txt # install

|

||||||

|

```

|

||||||

|

|

||||||

|

Then, start training, testing, detecting, and exporting YOLOv5 models:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python train.py # train a model

|

||||||

|

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||||

|

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||||

|

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||||

|

```

|

||||||

|

|

||||||

|

## Optional Extras

|

||||||

|

|

||||||

|

Add 64GB of swap memory (to `--cache` large datasets):

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo fallocate -l 64G /swapfile

|

||||||

|

sudo chmod 600 /swapfile

|

||||||

|

sudo mkswap /swapfile

|

||||||

|

sudo swapon /swapfile

|

||||||

|

free -h # check memory

|

||||||

|

```

|

||||||

|

|

||||||

|

Now you have successfully set up and run YOLOv5 on an AWS Deep Learning instance. Enjoy training, testing, and deploying your object detection models!

|

||||||

58

docs/yolov5/environments/docker_image_quickstart_tutorial.md

Normal file

58

docs/yolov5/environments/docker_image_quickstart_tutorial.md

Normal file

@ -0,0 +1,58 @@

|

|||||||

|

# Get Started with YOLOv5 🚀 in Docker

|

||||||

|

|

||||||

|

This tutorial will guide you through the process of setting up and running YOLOv5 in a Docker container.

|

||||||

|

|

||||||

|

You can also explore other quickstart options for YOLOv5, such as our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial). *Updated: 21 April 2023*.

|

||||||

|

|

||||||

|

## Prerequisites

|

||||||

|

|

||||||

|

1. **Nvidia Driver**: Version 455.23 or higher. Download from [Nvidia's website](https://www.nvidia.com/Download/index.aspx).

|

||||||

|

2. **Nvidia-Docker**: Allows Docker to interact with your local GPU. Installation instructions are available on the [Nvidia-Docker GitHub repository](https://github.com/NVIDIA/nvidia-docker).

|

||||||

|

3. **Docker Engine - CE**: Version 19.03 or higher. Download and installation instructions can be found on the [Docker website](https://docs.docker.com/install/).

|

||||||

|

|

||||||

|

## Step 1: Pull the YOLOv5 Docker Image

|

||||||

|

|

||||||

|

The Ultralytics YOLOv5 DockerHub repository is available at [https://hub.docker.com/r/ultralytics/yolov5](https://hub.docker.com/r/ultralytics/yolov5). Docker Autobuild ensures that the `ultralytics/yolov5:latest` image is always in sync with the most recent repository commit. To pull the latest image, run the following command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo docker pull ultralytics/yolov5:latest

|

||||||

|

```

|

||||||

|

|

||||||

|

## Step 2: Run the Docker Container

|

||||||

|

|

||||||

|

### Basic container:

|

||||||

|

|

||||||

|

Run an interactive instance of the YOLOv5 Docker image (called a "container") using the `-it` flag:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo docker run --ipc=host -it ultralytics/yolov5:latest

|

||||||

|

```

|

||||||

|

|

||||||

|

### Container with local file access:

|

||||||

|

|

||||||

|

To run a container with access to local files (e.g., COCO training data in `/datasets`), use the `-v` flag:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo docker run --ipc=host -it -v "$(pwd)"/datasets:/usr/src/datasets ultralytics/yolov5:latest

|

||||||

|

```

|

||||||

|

|

||||||

|

### Container with GPU access:

|

||||||

|

|

||||||

|

To run a container with GPU access, use the `--gpus all` flag:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo docker run --ipc=host -it --gpus all ultralytics/yolov5:latest

|

||||||

|

```

|

||||||

|

|

||||||

|

## Step 3: Use YOLOv5 🚀 within the Docker Container

|

||||||

|

|

||||||

|

Now you can train, test, detect, and export YOLOv5 models within the running Docker container:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python train.py # train a model

|

||||||

|

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||||

|

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||||

|

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||||

|

```

|

||||||

|

|

||||||

|

<p align="center"><img width="1000" src="https://user-images.githubusercontent.com/26833433/142224770-6e57caaf-ac01-4719-987f-c37d1b6f401f.png"></p>

|

||||||

43

docs/yolov5/environments/google_cloud_quickstart_tutorial.md

Normal file

43

docs/yolov5/environments/google_cloud_quickstart_tutorial.md

Normal file

@ -0,0 +1,43 @@

|

|||||||

|

# Run YOLOv5 🚀 on Google Cloud Platform (GCP) Deep Learning Virtual Machine (VM) ⭐

|

||||||

|

|

||||||

|

This tutorial will guide you through the process of setting up and running YOLOv5 on a GCP Deep Learning VM. New GCP users are eligible for a [$300 free credit offer](https://cloud.google.com/free/docs/gcp-free-tier#free-trial).

|

||||||

|

|

||||||

|

You can also explore other quickstart options for YOLOv5, such as our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial) and our Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>. *Updated: 21 April 2023*.

|

||||||

|

|

||||||

|

**Last Updated**: 6 May 2022

|

||||||

|

|

||||||

|

## Step 1: Create a Deep Learning VM

|

||||||

|

|

||||||

|

1. Go to the [GCP marketplace](https://console.cloud.google.com/marketplace/details/click-to-deploy-images/deeplearning) and select a **Deep Learning VM**.

|

||||||

|

2. Choose an **n1-standard-8** instance (with 8 vCPUs and 30 GB memory).

|

||||||

|

3. Add a GPU of your choice.

|

||||||

|

4. Check 'Install NVIDIA GPU driver automatically on first startup?'

|

||||||

|

5. Select a 300 GB SSD Persistent Disk for sufficient I/O speed.

|

||||||

|

6. Click 'Deploy'.

|

||||||

|

|

||||||

|

The preinstalled [Anaconda](https://docs.anaconda.com/anaconda/packages/pkg-docs/) Python environment includes all dependencies.

|

||||||

|

|

||||||

|

<img width="1000" alt="GCP Marketplace" src="https://user-images.githubusercontent.com/26833433/105811495-95863880-5f61-11eb-841d-c2f2a5aa0ffe.png">

|

||||||

|

|

||||||

|

## Step 2: Set Up the VM

|

||||||

|

|

||||||

|

Clone the YOLOv5 repository and install the [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.7.0**](https://www.python.org/) environment, including [**PyTorch>=1.7**](https://pytorch.org/get-started/locally/). [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) will be downloaded automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/ultralytics/yolov5 # clone

|

||||||

|

cd yolov5

|

||||||

|

pip install -r requirements.txt # install

|

||||||

|

```

|

||||||

|

|

||||||

|

## Step 3: Run YOLOv5 🚀 on the VM

|

||||||

|

|

||||||

|

You can now train, test, detect, and export YOLOv5 models on your VM:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python train.py # train a model

|

||||||

|

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||||

|

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||||

|

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||||

|

```

|

||||||

|

|

||||||

|

<img width="1000" alt="GCP terminal" src="https://user-images.githubusercontent.com/26833433/142223900-275e5c9e-e2b5-43f7-a21c-35c4ca7de87c.png">

|

||||||

@ -24,22 +24,22 @@ This powerful deep learning framework is built on the PyTorch platform and has g

|

|||||||

|

|

||||||

## Tutorials

|

## Tutorials

|

||||||

|

|

||||||

* [Train Custom Data](train_custom_data.md) 🚀 RECOMMENDED

|

* [Train Custom Data](tutorials/train_custom_data.md) 🚀 RECOMMENDED

|

||||||

* [Tips for Best Training Results](tips_for_best_training_results.md) ☘️

|

* [Tips for Best Training Results](tutorials/tips_for_best_training_results.md) ☘️

|

||||||

* [Multi-GPU Training](multi_gpu_training.md)

|

* [Multi-GPU Training](tutorials/multi_gpu_training.md)

|

||||||

* [PyTorch Hub](pytorch_hub.md) 🌟 NEW

|

* [PyTorch Hub](tutorials/pytorch_hub_model_loading.md) 🌟 NEW

|

||||||

* [TFLite, ONNX, CoreML, TensorRT Export](export.md) 🚀

|

* [TFLite, ONNX, CoreML, TensorRT Export](tutorials/model_export.md) 🚀

|

||||||

* [NVIDIA Jetson platform Deployment](jetson_nano.md) 🌟 NEW

|

* [NVIDIA Jetson platform Deployment](tutorials/running_on_jetson_nano.md) 🌟 NEW

|

||||||

* [Test-Time Augmentation (TTA)](tta.md)

|

* [Test-Time Augmentation (TTA)](tutorials/test_time_augmentation.md)

|

||||||

* [Model Ensembling](ensemble.md)

|

* [Model Ensembling](tutorials/model_ensembling.md)

|

||||||

* [Model Pruning/Sparsity](pruning_sparsity.md)

|

* [Model Pruning/Sparsity](tutorials/model_pruning_and_sparsity.md)

|

||||||

* [Hyperparameter Evolution](hyp_evolution.md)

|

* [Hyperparameter Evolution](tutorials/hyperparameter_evolution.md)

|

||||||

* [Transfer Learning with Frozen Layers](transfer_learn_frozen.md)

|

* [Transfer Learning with Frozen Layers](tutorials/transfer_learning_with_frozen_layers.md)

|

||||||

* [Architecture Summary](architecture.md) 🌟 NEW

|

* [Architecture Summary](tutorials/architecture_description.md) 🌟 NEW

|

||||||

* [Roboflow for Datasets, Labeling, and Active Learning](roboflow.md)

|

* [Roboflow for Datasets, Labeling, and Active Learning](tutorials/roboflow_datasets_integration.md)

|

||||||

* [ClearML Logging](clearml.md) 🌟 NEW

|

* [ClearML Logging](tutorials/clearml_logging_integration.md) 🌟 NEW

|

||||||

* [YOLOv5 with Neural Magic's Deepsparse](neural_magic.md) 🌟 NEW

|

* [YOLOv5 with Neural Magic's Deepsparse](tutorials/neural_magic_pruning_quantization.md) 🌟 NEW

|

||||||

* [Comet Logging](comet.md) 🌟 NEW

|

* [Comet Logging](tutorials/comet_logging_integration.md) 🌟 NEW

|

||||||

|

|

||||||

## Environments

|

## Environments

|

||||||

|

|

||||||

@ -50,10 +50,10 @@ and [PyTorch](https://pytorch.org/) preinstalled):

|

|||||||

- **Notebooks** with free

|

- **Notebooks** with free

|

||||||

GPU: <a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a> <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

GPU: <a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a> <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

||||||

- **Google Cloud** Deep Learning VM.

|

- **Google Cloud** Deep Learning VM.

|

||||||

See [GCP Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart)

|

See [GCP Quickstart Guide](environments/google_cloud_quickstart_tutorial.md)

|

||||||

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart)

|

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](environments/aws_quickstart_tutorial.md)

|

||||||

- **Docker Image**.

|

- **Docker Image**.

|

||||||

See [Docker Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/Docker-Quickstart) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

See [Docker Quickstart Guide](environments/docker_image_quickstart_tutorial.md) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

||||||

|

|

||||||

## Status

|

## Status

|

||||||

|

|

||||||

|

|||||||

76

docs/yolov5/quickstart_tutorial.md

Normal file

76

docs/yolov5/quickstart_tutorial.md

Normal file

@ -0,0 +1,76 @@

|

|||||||

|

# YOLOv5 Quickstart

|

||||||

|

|

||||||

|

See below for quickstart examples.

|

||||||

|

|

||||||

|

## Install

|

||||||

|

|

||||||

|

Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a

|

||||||

|

[**Python>=3.7.0**](https://www.python.org/) environment, including

|

||||||

|

[**PyTorch>=1.7**](https://pytorch.org/get-started/locally/).

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/ultralytics/yolov5 # clone

|

||||||

|

cd yolov5

|

||||||

|

pip install -r requirements.txt # install

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## Inference

|

||||||

|

|

||||||

|

YOLOv5 [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest

|

||||||

|

YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||||

|

|

||||||

|

```python

|

||||||

|

import torch

|

||||||

|

|

||||||

|

# Model

|

||||||

|

model = torch.hub.load("ultralytics/yolov5", "yolov5s") # or yolov5n - yolov5x6, custom

|

||||||

|

|

||||||

|

# Images

|

||||||

|

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

|

||||||

|

|

||||||

|

# Inference

|

||||||

|

results = model(img)

|

||||||

|

|

||||||

|

# Results

|

||||||

|

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

|

||||||

|

```

|

||||||

|

|

||||||

|

## Inference with detect.py

|

||||||

|

|

||||||

|

`detect.py` runs inference on a variety of sources, downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from

|

||||||

|

the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python detect.py --weights yolov5s.pt --source 0 # webcam

|

||||||

|

img.jpg # image

|

||||||

|

vid.mp4 # video

|

||||||

|

screen # screenshot

|

||||||

|

path/ # directory

|

||||||

|

list.txt # list of images

|

||||||

|

list.streams # list of streams

|

||||||

|

'path/*.jpg' # glob

|

||||||

|

'https://youtu.be/Zgi9g1ksQHc' # YouTube

|

||||||

|

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

|

||||||

|

```

|

||||||

|

|

||||||

|

## Training

|

||||||

|

|

||||||

|

The commands below reproduce YOLOv5 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh)

|

||||||

|

results. [Models](https://github.com/ultralytics/yolov5/tree/master/models)

|

||||||

|

and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest

|

||||||

|

YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are

|

||||||

|

1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://github.com/ultralytics/yolov5/issues/475) times faster). Use the

|

||||||

|

largest `--batch-size` possible, or pass `--batch-size -1` for

|

||||||

|

YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128

|

||||||

|

yolov5s 64

|

||||||

|

yolov5m 40

|

||||||

|

yolov5l 24

|

||||||

|

yolov5x 16

|

||||||

|

```

|

||||||

|

|

||||||

|

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png">

|

||||||

@ -191,19 +191,3 @@ Match positive samples:

|

|||||||

- Because the center point offset range is adjusted from (0, 1) to (-0.5, 1.5). GT Box can be assigned to more anchors.

|

- Because the center point offset range is adjusted from (0, 1) to (-0.5, 1.5). GT Box can be assigned to more anchors.

|

||||||

|

|

||||||

<img src="https://user-images.githubusercontent.com/31005897/158508139-9db4e8c2-cf96-47e0-bc80-35d11512f296.png#pic_center" width=70%>

|

<img src="https://user-images.githubusercontent.com/31005897/158508139-9db4e8c2-cf96-47e0-bc80-35d11512f296.png#pic_center" width=70%>

|

||||||

|

|

||||||

## Environments

|

|

||||||

|

|

||||||

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including [CUDA](https://developer.nvidia.com/cuda)/[CUDNN](https://developer.nvidia.com/cudnn), [Python](https://www.python.org/) and [PyTorch](https://pytorch.org/) preinstalled):

|

|

||||||

|

|

||||||

- **Notebooks** with free GPU: <a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a> <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

|

||||||

- **Google Cloud** Deep Learning VM. See [GCP Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart)

|

|

||||||

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart)

|

|

||||||

- **Docker Image**. See [Docker Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/Docker-Quickstart) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

|

||||||

|

|

||||||

|

|

||||||

## Status

|

|

||||||

|

|

||||||

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI"></a>

|

|

||||||

|

|

||||||

If this badge is green, all [YOLOv5 GitHub Actions](https://github.com/ultralytics/yolov5/actions) Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 [training](https://github.com/ultralytics/yolov5/blob/master/train.py), [validation](https://github.com/ultralytics/yolov5/blob/master/val.py), [inference](https://github.com/ultralytics/yolov5/blob/master/detect.py), [export](https://github.com/ultralytics/yolov5/blob/master/export.py) and [benchmarks](https://github.com/ultralytics/yolov5/blob/master/benchmarks.py) on macOS, Windows, and Ubuntu every 24 hours and on every commit.

|

|

||||||

@ -4,11 +4,12 @@ This repository features a collection of real-world applications and walkthrough

|

|||||||

|

|

||||||

### Ultralytics YOLO Example Applications

|

### Ultralytics YOLO Example Applications

|

||||||

|

|

||||||

| Title | Format | Contributor |

|

| Title | Format | Contributor |

|

||||||

| ------------------------------------------------------------------------ | ------------------ | --------------------------------------------------- |

|

| -------------------------------------------------------------------------------------------------------------- | ------------------ | --------------------------------------------------- |

|

||||||

| [YOLO ONNX Detection Inference with C++](./YOLOv8-CPP-Inference) | C++/ONNX | [Justas Bartnykas](https://github.com/JustasBart) |

|

| [YOLO ONNX Detection Inference with C++](./YOLOv8-CPP-Inference) | C++/ONNX | [Justas Bartnykas](https://github.com/JustasBart) |

|

||||||

| [YOLO OpenCV ONNX Detection Python](./YOLOv8-OpenCV-ONNX-Python) | OpenCV/Python/ONNX | [Farid Inawan](https://github.com/frdteknikelektro) |

|

| [YOLO OpenCV ONNX Detection Python](./YOLOv8-OpenCV-ONNX-Python) | OpenCV/Python/ONNX | [Farid Inawan](https://github.com/frdteknikelektro) |

|

||||||

| [YOLO .Net ONNX Detection C#](https://www.nuget.org/packages/Yolov8.Net) | C# .Net | [Samuel Stainback](https://github.com/sstainba) |

|

| [YOLO .Net ONNX Detection C#](https://www.nuget.org/packages/Yolov8.Net) | C# .Net | [Samuel Stainback](https://github.com/sstainba) |

|

||||||

|

| [YOLOv8 on NVIDIA Jetson(TensorRT and DeepStream)](https://wiki.seeedstudio.com/YOLOv8-DeepStream-TRT-Jetson/) | Python | [Lakshantha](https://github.com/lakshanthad) |

|

||||||

|

|

||||||

### How to Contribute

|

### How to Contribute

|

||||||

|

|

||||||

|

|||||||

39

mkdocs.yml

39

mkdocs.yml

@ -42,6 +42,7 @@ theme:

|

|||||||

- navigation.top

|

- navigation.top

|

||||||

- navigation.tabs

|

- navigation.tabs

|

||||||

- navigation.tabs.sticky

|

- navigation.tabs.sticky

|

||||||

|

- navigation.expand

|

||||||

- navigation.footer

|

- navigation.footer

|

||||||

- navigation.tracking

|

- navigation.tracking

|

||||||

- navigation.instant

|

- navigation.instant

|

||||||

@ -230,20 +231,26 @@ nav:

|

|||||||

|

|

||||||

- YOLOv5:

|

- YOLOv5:

|

||||||

- yolov5/index.md

|

- yolov5/index.md

|

||||||

- Train Custom Data: yolov5/train_custom_data.md

|

- Quickstart: yolov5/quickstart_tutorial.md

|

||||||

- Tips for Best Training Results: yolov5/tips_for_best_training_results.md

|

- Environments:

|

||||||

- Multi-GPU Training: yolov5/multi_gpu_training.md

|

- Amazon Web Services (AWS): yolov5/environments/aws_quickstart_tutorial.md

|

||||||

- PyTorch Hub: yolov5/pytorch_hub.md

|

- Google Cloud (GCP): yolov5/environments/google_cloud_quickstart_tutorial.md

|

||||||

- TFLite, ONNX, CoreML, TensorRT Export: yolov5/export.md

|

- Docker Image: yolov5/environments/docker_image_quickstart_tutorial.md

|

||||||

- NVIDIA Jetson Nano Deployment: yolov5/jetson_nano.md

|

- Tutorials:

|

||||||

- Test-Time Augmentation (TTA): yolov5/tta.md

|

- Train Custom Data: yolov5/tutorials/train_custom_data.md

|

||||||

- Model Ensembling: yolov5/ensemble.md

|

- Tips for Best Training Results: yolov5/tutorials/tips_for_best_training_results.md

|

||||||

- Pruning/Sparsity Tutorial: yolov5/pruning_sparsity.md

|

- Multi-GPU Training: yolov5/tutorials/multi_gpu_training.md

|

||||||

- Hyperparameter evolution: yolov5/hyp_evolution.md

|

- PyTorch Hub: yolov5/tutorials/pytorch_hub_model_loading.md

|

||||||

- Transfer learning with frozen layers: yolov5/transfer_learn_frozen.md

|

- TFLite, ONNX, CoreML, TensorRT Export: yolov5/tutorials/model_export.md

|

||||||

- Architecture Summary: yolov5/architecture.md

|

- NVIDIA Jetson Nano Deployment: yolov5/tutorials/running_on_jetson_nano.md

|

||||||

- Roboflow Datasets: yolov5/roboflow.md

|

- Test-Time Augmentation (TTA): yolov5/tutorials/test_time_augmentation.md

|

||||||

- Neural Magic's DeepSparse: yolov5/neural_magic.md

|

- Model Ensembling: yolov5/tutorials/model_ensembling.md

|

||||||

- Comet Logging: yolov5/comet.md

|