ultralytics 8.0.106 (#2736)

Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: vyskocj <whiskey1939@seznam.cz> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> Co-authored-by: triple Mu <gpu@163.com> Co-authored-by: Ayush Chaurasia <ayush.chaurarsia@gmail.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Laughing <61612323+Laughing-q@users.noreply.github.com>

This commit is contained in:

@ -1,7 +1,78 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Caltech-101 dataset, a collection of images for object recognition tasks in machine learning and computer vision algorithms.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# Caltech-101 Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [Caltech-101](https://data.caltech.edu/records/mzrjq-6wc02) dataset is a widely used dataset for object recognition tasks, containing around 9,000 images from 101 object categories. The categories were chosen to reflect a variety of real-world objects, and the images themselves were carefully selected and annotated to provide a challenging benchmark for object recognition algorithms.

|

||||

|

||||

## Key Features

|

||||

|

||||

- The Caltech-101 dataset comprises around 9,000 color images divided into 101 categories.

|

||||

- The categories encompass a wide variety of objects, including animals, vehicles, household items, and people.

|

||||

- The number of images per category varies, with about 40 to 800 images in each category.

|

||||

- Images are of variable sizes, with most images being medium resolution.

|

||||

- Caltech-101 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

Unlike many other datasets, the Caltech-101 dataset is not formally split into training and testing sets. Users typically create their own splits based on their specific needs. However, a common practice is to use a random subset of images for training (e.g., 30 images per category) and the remaining images for testing.

|

||||

|

||||

## Applications

|

||||

|

||||

The Caltech-101 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its wide variety of categories and high-quality images make it an excellent dataset for research and development in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLO model on the Caltech-101 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='caltech101', epochs=100, imgsz=416)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=caltech101 model=yolov8n-cls.pt epochs=100 imgsz=416

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

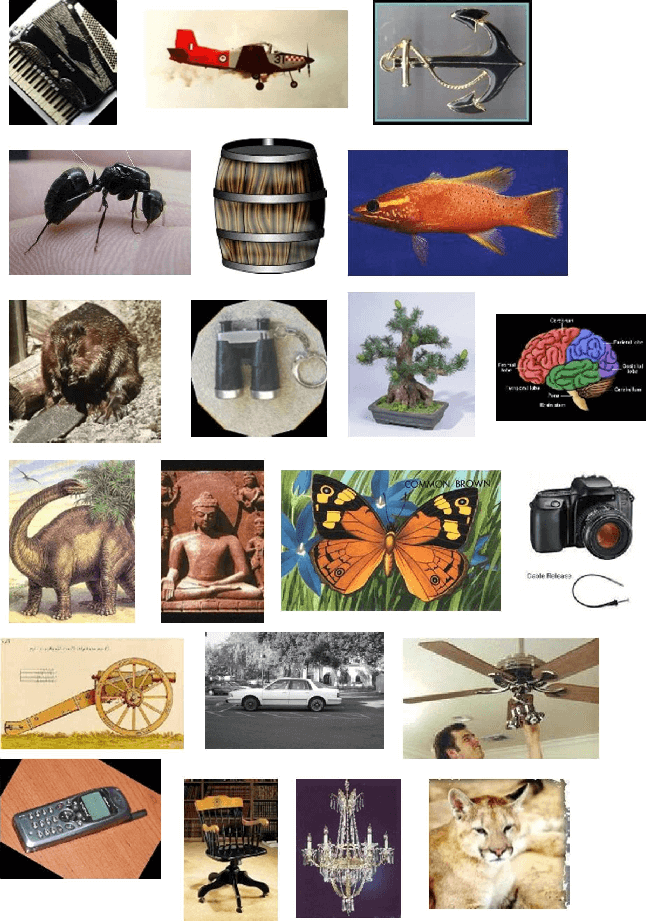

The Caltech-101 dataset contains high-quality color images of various objects, providing a well-structured dataset for object recognition tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the Caltech-101 dataset, emphasizing the significance of a diverse dataset for training robust object recognition models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the Caltech-101 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@article{fei2007learning,

|

||||

title={Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories},

|

||||

author={Fei-Fei, Li and Fergus, Rob and Perona, Pietro},

|

||||

journal={Computer vision and Image understanding},

|

||||

volume={106},

|

||||

number={

|

||||

|

||||

1},

|

||||

pages={59--70},

|

||||

year={2007},

|

||||

publisher={Elsevier}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Li Fei-Fei, Rob Fergus, and Pietro Perona for creating and maintaining the Caltech-101 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the Caltech-101 dataset and its creators, visit the [Caltech-101 dataset website](https://data.caltech.edu/records/mzrjq-6wc02).

|

||||

@ -1,7 +1,73 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Caltech-256 dataset, a broad collection of images used for object classification tasks in machine learning and computer vision algorithms.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# Caltech-256 Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [Caltech-256](https://data.caltech.edu/records/nyy15-4j048) dataset is an extensive collection of images used for object classification tasks. It contains around 30,000 images divided into 257 categories (256 object categories and 1 background category). The images are carefully curated and annotated to provide a challenging and diverse benchmark for object recognition algorithms.

|

||||

|

||||

## Key Features

|

||||

|

||||

- The Caltech-256 dataset comprises around 30,000 color images divided into 257 categories.

|

||||

- Each category contains a minimum of 80 images.

|

||||

- The categories encompass a wide variety of real-world objects, including animals, vehicles, household items, and people.

|

||||

- Images are of variable sizes and resolutions.

|

||||

- Caltech-256 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

Like Caltech-101, the Caltech-256 dataset does not have a formal split between training and testing sets. Users typically create their own splits according to their specific needs. A common practice is to use a random subset of images for training and the remaining images for testing.

|

||||

|

||||

## Applications

|

||||

|

||||

The Caltech-256 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its diverse set of categories and high-quality images make it an invaluable dataset for research and development in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='caltech256', epochs=100, imgsz=416)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=caltech256 model=yolov8n-cls.pt epochs=100 imgsz=416

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The Caltech-256 dataset contains high-quality color images of various objects, providing a comprehensive dataset for object recognition tasks. Here are some examples of images from the dataset ([credit](https://ml4a.github.io/demos/tsne_viewer.html)):

|

||||

|

||||

|

||||

|

||||

The example showcases the diversity and complexity of the objects in the Caltech-256 dataset, emphasizing the importance of a varied dataset for training robust object recognition models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the Caltech-256 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@article{griffin2007caltech,

|

||||

title={Caltech-256 object category dataset},

|

||||

author={Griffin, Gregory and Holub, Alex and Perona, Pietro},

|

||||

year={2007}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Gregory Griffin, Alex Holub, and Pietro Perona for creating and maintaining the Caltech-256 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the

|

||||

|

||||

Caltech-256 dataset and its creators, visit the [Caltech-256 dataset website](https://data.caltech.edu/records/nyy15-4j048).

|

||||

@ -1,7 +1,75 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the CIFAR-10 dataset, a collection of images that are commonly used to train machine learning and computer vision algorithms.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# CIFAR-10 Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [CIFAR-10](https://www.cs.toronto.edu/~kriz/cifar.html) (Canadian Institute For Advanced Research) dataset is a collection of images used widely for machine learning and computer vision algorithms. It was developed by researchers at the CIFAR institute and consists of 60,000 32x32 color images in 10 different classes.

|

||||

|

||||

## Key Features

|

||||

|

||||

- The CIFAR-10 dataset consists of 60,000 images, divided into 10 classes.

|

||||

- Each class contains 6,000 images, split into 5,000 for training and 1,000 for testing.

|

||||

- The images are colored and of size 32x32 pixels.

|

||||

- The 10 different classes represent airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

|

||||

- CIFAR-10 is commonly used for training and testing in the field of machine learning and computer vision.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The CIFAR-10 dataset is split into two subsets:

|

||||

|

||||

1. **Training Set**: This subset contains 50,000 images used for training machine learning models.

|

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models.

|

||||

|

||||

## Applications

|

||||

|

||||

The CIFAR-10 dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a well-rounded dataset for research and development in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLO model on the CIFAR-10 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='cifar10', epochs=100, imgsz=32)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=cifar10 model=yolov8n-cls.pt epochs=100 imgsz=32

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

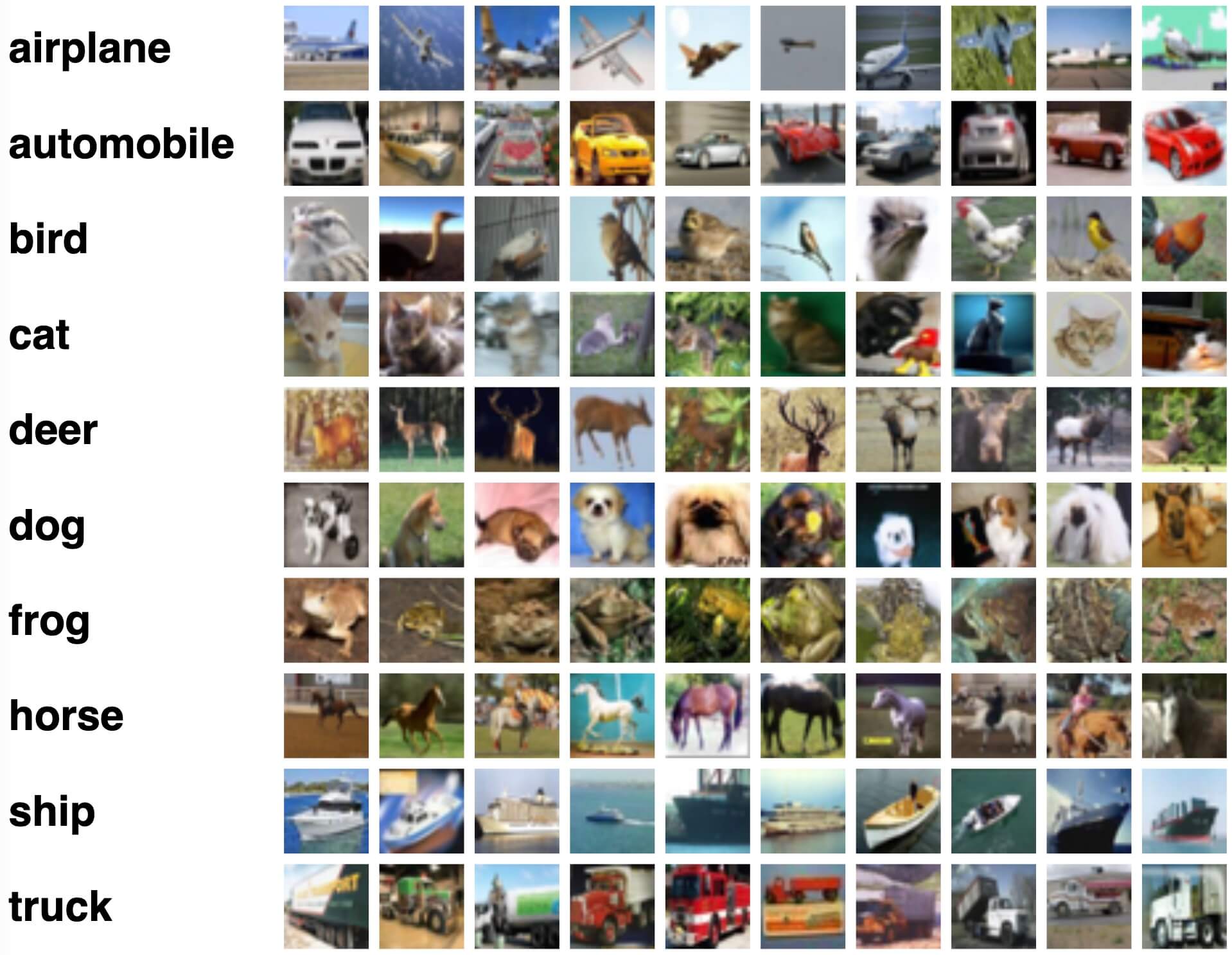

The CIFAR-10 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the CIFAR-10 dataset, highlighting the importance of a diverse dataset for training robust image classification models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the CIFAR-10 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@TECHREPORT{Krizhevsky09learningmultiple,

|

||||

author = {Alex Krizhevsky},

|

||||

title = {Learning multiple layers of features from tiny images},

|

||||

institution = {},

|

||||

year = {2009}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-10 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-10 dataset and its creator, visit the [CIFAR-10 dataset website](https://www.cs.toronto.edu/~kriz/cifar.html).

|

||||

@ -1,7 +1,75 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the CIFAR-100 dataset, a collection of images that are commonly used to train machine learning and computer vision algorithms.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# CIFAR-100 Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [CIFAR-100](https://www.cs.toronto.edu/~kriz/cifar.html) (Canadian Institute For Advanced Research) dataset is a significant extension of the CIFAR-10 dataset, composed of 60,000 32x32 color images in 100 different classes. It was developed by researchers at the CIFAR institute, offering a more challenging dataset for more complex machine learning and computer vision tasks.

|

||||

|

||||

## Key Features

|

||||

|

||||

- The CIFAR-100 dataset consists of 60,000 images, divided into 100 classes.

|

||||

- Each class contains 600 images, split into 500 for training and 100 for testing.

|

||||

- The images are colored and of size 32x32 pixels.

|

||||

- The 100 different classes are grouped into 20 coarse categories for higher level classification.

|

||||

- CIFAR-100 is commonly used for training and testing in the field of machine learning and computer vision.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The CIFAR-100 dataset is split into two subsets:

|

||||

|

||||

1. **Training Set**: This subset contains 50,000 images used for training machine learning models.

|

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models.

|

||||

|

||||

## Applications

|

||||

|

||||

The CIFAR-100 dataset is extensively used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a more challenging and comprehensive dataset for research and development in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLO model on the CIFAR-100 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='cifar100', epochs=100, imgsz=32)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=cifar100 model=yolov8n-cls.pt epochs=100 imgsz=32

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The CIFAR-100 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the CIFAR-100 dataset, highlighting the importance of a diverse dataset for training robust image classification models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the CIFAR-100 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@TECHREPORT{Krizhevsky09learningmultiple,

|

||||

author = {Alex Krizhevsky},

|

||||

title = {Learning multiple layers of features from tiny images},

|

||||

institution = {},

|

||||

year = {2009}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-100 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-100 dataset and its creator, visit the [CIFAR-100 dataset website](https://www.cs.toronto.edu/~kriz/cifar.html).

|

||||

@ -1,7 +1,78 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the Fashion-MNIST dataset, a large database of Zalando's article images used for training various image processing systems and machine learning models.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# Fashion-MNIST Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [Fashion-MNIST](https://github.com/zalandoresearch/fashion-mnist) dataset is a database of Zalando's article images—consisting of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28x28 grayscale image, associated with a label from 10 classes. Fashion-MNIST is intended to serve as a direct drop-in replacement for the original MNIST dataset for benchmarking machine learning algorithms.

|

||||

|

||||

## Key Features

|

||||

|

||||

- Fashion-MNIST contains 60,000 training images and 10,000 testing images of Zalando's article images.

|

||||

- The dataset comprises grayscale images of size 28x28 pixels.

|

||||

- Each pixel has a single pixel-value associated with it, indicating the lightness or darkness of that pixel, with higher numbers meaning darker. This pixel-value is an integer between 0 and 255.

|

||||

- Fashion-MNIST is widely used for training and testing in the field of machine learning, especially for image classification tasks.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The Fashion-MNIST dataset is split into two subsets:

|

||||

|

||||

1. **Training Set**: This subset contains 60,000 images used for training machine learning models.

|

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models.

|

||||

|

||||

## Labels

|

||||

|

||||

Each training and test example is assigned to one of the following labels:

|

||||

|

||||

0. T-shirt/top

|

||||

1. Trouser

|

||||

2. Pullover

|

||||

3. Dress

|

||||

4. Coat

|

||||

5. Sandal

|

||||

6. Shirt

|

||||

7. Sneaker

|

||||

8. Bag

|

||||

9. Ankle boot

|

||||

|

||||

## Applications

|

||||

|

||||

The Fashion-MNIST dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The dataset's simple and well-structured format makes it an essential resource for researchers and practitioners in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a CNN model on the Fashion-MNIST dataset for 100 epochs with an image size of 28x28, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='fashion-mnist', epochs=100, imgsz=28)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=fashion-mnist model=yolov8n-cls.pt epochs=100 imgsz=28

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

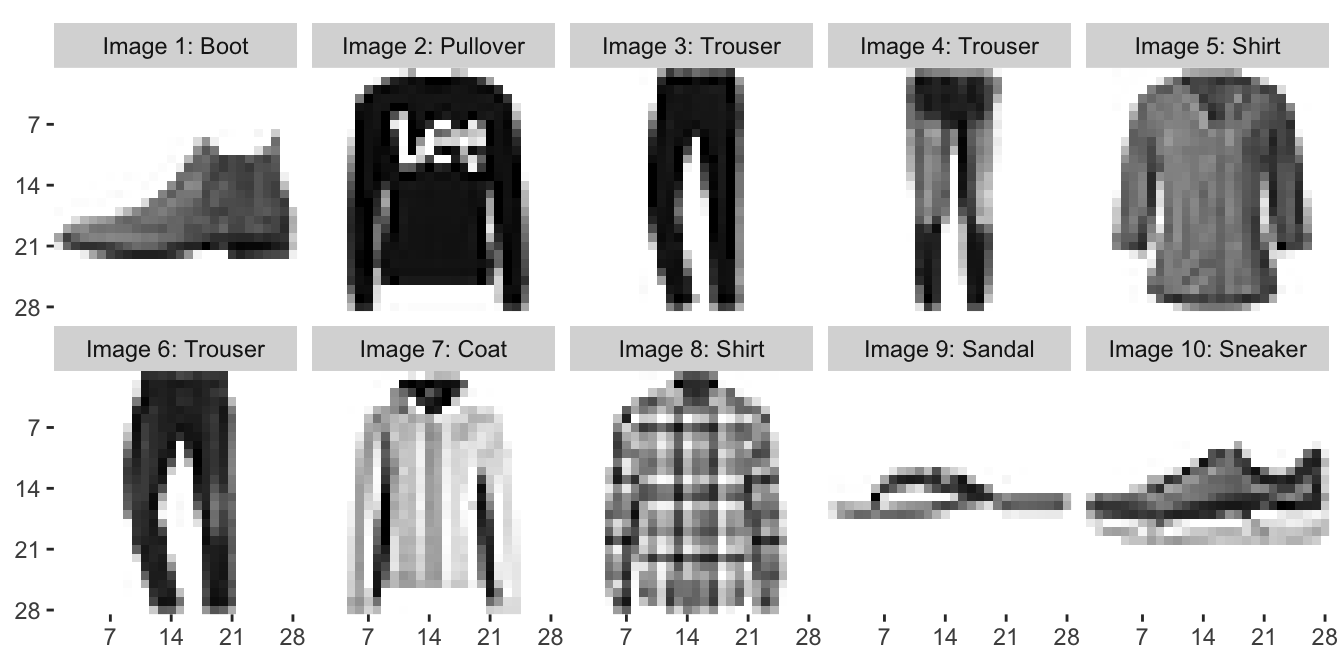

The Fashion-MNIST dataset contains grayscale images of Zalando's article images, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the Fashion-MNIST dataset, highlighting the importance of a diverse dataset for training robust image classification models.

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

If you use the Fashion-MNIST dataset in your research or development work, please acknowledge the dataset by linking to the [GitHub repository](https://github.com/zalandoresearch/fashion-mnist). This dataset was made available by Zalando Research.

|

||||

|

||||

@ -1,7 +1,80 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the ImageNet dataset, a large-scale database of annotated images commonly used for training deep learning models in computer vision tasks.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# ImageNet Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

[ImageNet](https://www.image-net.org/) is a large-scale database of annotated images designed for use in visual object recognition research. It contains over 14 million images, with each image annotated using WordNet synsets, making it one of the most extensive resources available for training deep learning models in computer vision tasks.

|

||||

|

||||

## Key Features

|

||||

|

||||

- ImageNet contains over 14 million high-resolution images spanning thousands of object categories.

|

||||

- The dataset is organized according to the WordNet hierarchy, with each synset representing a category.

|

||||

- ImageNet is widely used for training and benchmarking in the field of computer vision, particularly for image classification and object detection tasks.

|

||||

- The annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC) has been instrumental in advancing computer vision research.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The ImageNet dataset is organized using the WordNet hierarchy. Each node in the hierarchy represents a category, and each category is described by a synset (a collection of synonymous terms). The images in ImageNet are annotated with one or more synsets, providing a rich resource for training models to recognize various objects and their relationships.

|

||||

|

||||

## ImageNet Large Scale Visual Recognition Challenge (ILSVRC)

|

||||

|

||||

The annual [ImageNet Large Scale Visual Recognition Challenge (ILSVRC)](http://image-net.org/challenges/LSVRC/) has been an important event in the field of computer vision. It has provided a platform for researchers and developers to evaluate their algorithms and models on a large-scale dataset with standardized evaluation metrics. The ILSVRC has led to significant advancements in the development of deep learning models for image classification, object detection, and other computer vision tasks.

|

||||

|

||||

## Applications

|

||||

|

||||

The ImageNet dataset is widely used for training and evaluating deep learning models in various computer vision tasks, such as image classification, object detection, and object localization. Some popular deep learning architectures, such as AlexNet, VGG, and ResNet, were developed and benchmarked using the ImageNet dataset.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a deep learning model on the ImageNet dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='imagenet', epochs=100, imgsz=224)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo train data=imagenet model=yolov8n-cls.pt epochs=100 imgsz=224

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

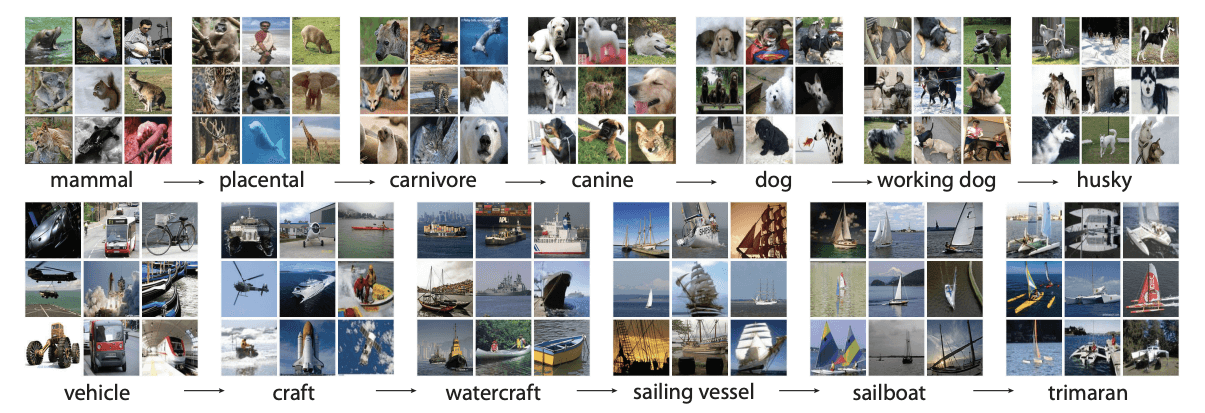

The ImageNet dataset contains high-resolution images spanning thousands of object categories, providing a diverse and extensive dataset for training and evaluating computer vision models. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNet dataset, highlighting the importance of a diverse dataset for training robust computer vision models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the ImageNet dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@article{ILSVRC15,

|

||||

Author = {Olga Russakovsky

|

||||

|

||||

and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei},

|

||||

Title = { {ImageNet Large Scale Visual Recognition Challenge}},

|

||||

Year = {2015},

|

||||

journal = {International Journal of Computer Vision (IJCV)},

|

||||

volume={115},

|

||||

number={3},

|

||||

pages={211-252}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset as a valuable resource for the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the [ImageNet website](https://www.image-net.org/).

|

||||

@ -1,7 +1,77 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the ImageNet10 dataset, a compact subset of the original ImageNet dataset designed for quick testing, CI tests, and sanity checks.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# ImageNet10 Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

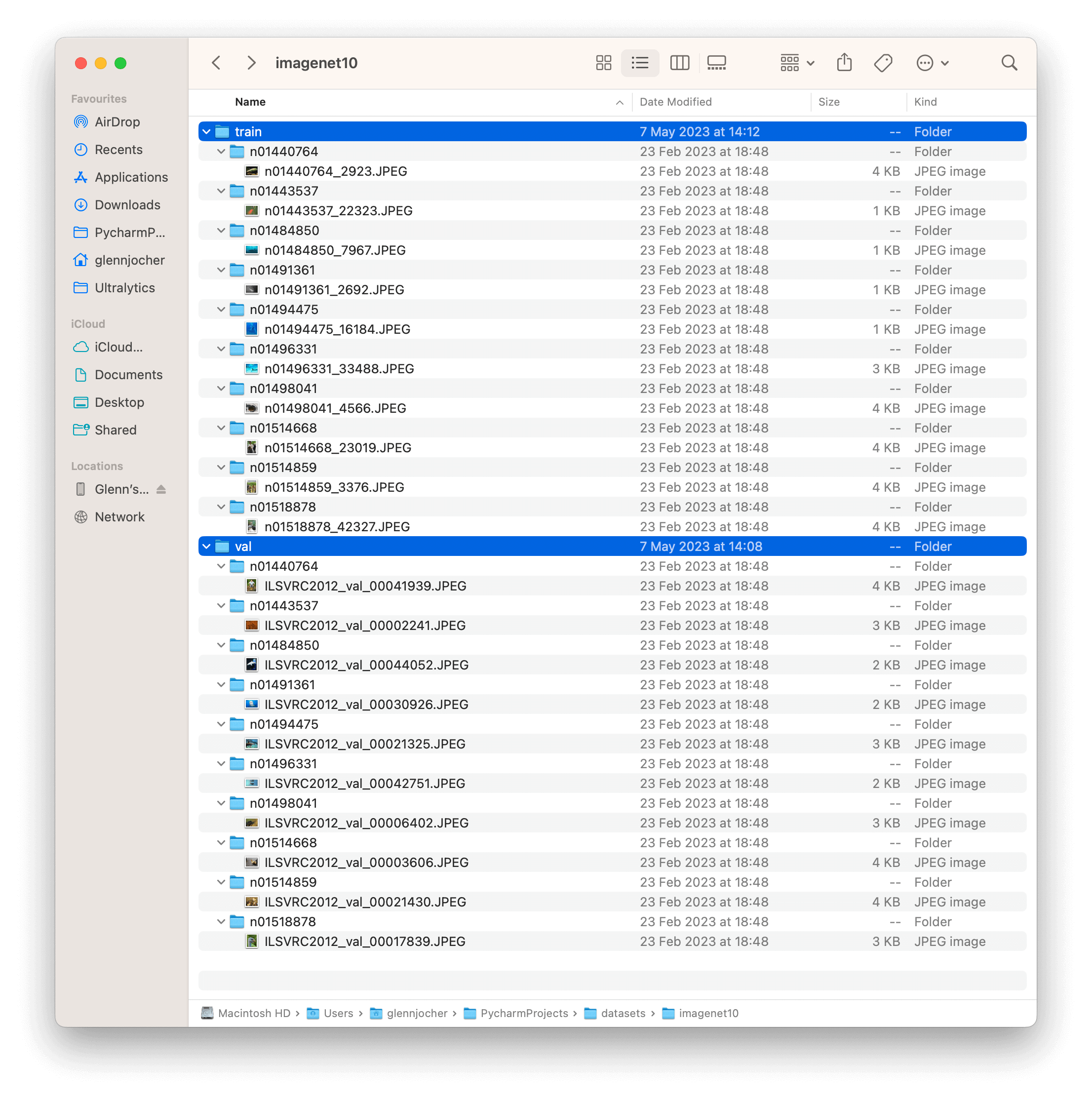

The [ImageNet10](https://github.com/ultralytics/yolov5/releases/download/v1.0/imagenet10.zip) dataset is a small-scale subset of the [ImageNet](https://www.image-net.org/) database, developed by [Ultralytics](https://ultralytics.com) and designed for CI tests, sanity checks, and fast testing of training pipelines. This dataset is composed of the first image in the training set and the first image from the validation set of the first 10 classes in ImageNet. Although significantly smaller, it retains the structure and diversity of the original ImageNet dataset.

|

||||

|

||||

## Key Features

|

||||

|

||||

- ImageNet10 is a compact version of ImageNet, with 20 images representing the first 10 classes of the original dataset.

|

||||

- The dataset is organized according to the WordNet hierarchy, mirroring the structure of the full ImageNet dataset.

|

||||

- It is ideally suited for CI tests, sanity checks, and rapid testing of training pipelines in computer vision tasks.

|

||||

- Although not designed for model benchmarking, it can provide a quick indication of a model's basic functionality and correctness.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The ImageNet10 dataset, like the original ImageNet, is organized using the WordNet hierarchy. Each of the 10 classes in ImageNet10 is described by a synset (a collection of synonymous terms). The images in ImageNet10 are annotated with one or more synsets, providing a compact resource for testing models to recognize various objects and their relationships.

|

||||

|

||||

## Applications

|

||||

|

||||

The ImageNet10 dataset is useful for quickly testing and debugging computer vision models and pipelines. Its small size allows for rapid iteration, making it ideal for continuous integration tests and sanity checks. It can also be used for fast preliminary testing of new models or changes to existing models before moving on to full-scale testing with the complete ImageNet dataset.

|

||||

|

||||

## Usage

|

||||

|

||||

To test a deep learning model on the ImageNet10 dataset with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Test Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='imagenet10', epochs=5, imgsz=224)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo train data=imagenet10 model=yolov8n-cls.pt epochs=5 imgsz=224

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The ImageNet10 dataset contains a subset of images from the original ImageNet dataset. These images are chosen to represent the first 10 classes in the dataset, providing a diverse yet compact dataset for quick testing and evaluation.

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNet10 dataset, highlighting its usefulness for sanity checks and quick testing of computer vision models.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the ImageNet10 dataset in your research or development work, please cite the original ImageNet paper:

|

||||

|

||||

```bibtex

|

||||

@article{ILSVRC15,

|

||||

Author = {Olga Russakovsky

|

||||

|

||||

and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei},

|

||||

Title = { {ImageNet Large

|

||||

|

||||

Scale Visual Recognition Challenge}},

|

||||

Year = {2015},

|

||||

journal = {International Journal of Computer Vision (IJCV)},

|

||||

volume={115},

|

||||

number={3},

|

||||

pages={211-252}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset. The ImageNet10 dataset, while a compact subset, is a valuable resource for quick testing and debugging in the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the [ImageNet website](https://www.image-net.org/).

|

||||

@ -1,7 +1,112 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the ImageNette dataset, a subset of 10 easily classified classes from the Imagenet dataset commonly used for training various image processing systems and machine learning models.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# ImageNette Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [ImageNette](https://github.com/fastai/imagenette) dataset is a subset of the larger [Imagenet](http://www.image-net.org/) dataset, but it only includes 10 easily distinguishable classes. It was created to provide a quicker, easier-to-use version of Imagenet for software development and education.

|

||||

|

||||

## Key Features

|

||||

|

||||

- ImageNette contains images from 10 different classes such as tench, English springer, cassette player, chain saw, church, French horn, garbage truck, gas pump, golf ball, parachute.

|

||||

- The dataset comprises colored images of varying dimensions.

|

||||

- ImageNette is widely used for training and testing in the field of machine learning, especially for image classification tasks.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The ImageNette dataset is split into two subsets:

|

||||

|

||||

1. **Training Set**: This subset contains several thousands of images used for training machine learning models. The exact number varies per class.

|

||||

2. **Validation Set**: This subset consists of several hundreds of images used for validating and benchmarking the trained models. Again, the exact number varies per class.

|

||||

|

||||

## Applications

|

||||

|

||||

The ImageNette dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), and various other machine learning algorithms. The dataset's straightforward format and well-chosen classes make it a handy resource for both beginner and experienced practitioners in the field of machine learning and computer vision.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a model on the ImageNette dataset for 100 epochs with a standard image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='imagenette', epochs=100, imgsz=224)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=imagenette model=yolov8n-cls.pt epochs=100 imgsz=224

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

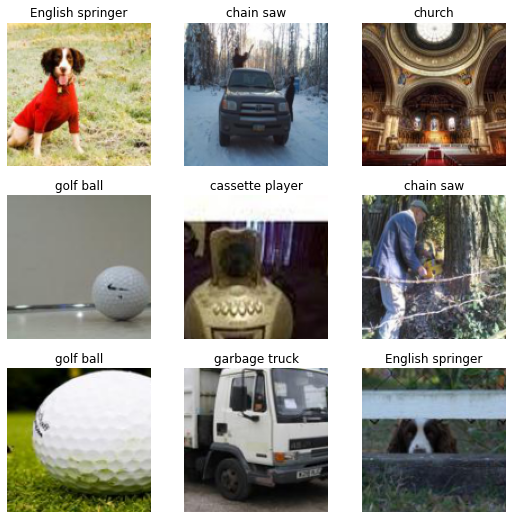

The ImageNette dataset contains colored images of various objects and scenes, providing a diverse dataset for image classification tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNette dataset, highlighting the importance of a diverse dataset for training robust image classification models.

|

||||

|

||||

## ImageNette160 and ImageNette320

|

||||

|

||||

For faster prototyping and training, the ImageNette dataset is also available in two reduced sizes: ImageNette160 and ImageNette320. These datasets maintain the same classes and structure as the full ImageNette dataset, but the images are resized to a smaller dimension. As such, these versions of the dataset are particularly useful for preliminary model testing, or when computational resources are limited.

|

||||

|

||||

To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imagenette320' in the training command. The following code snippets illustrate this:

|

||||

|

||||

!!! example "Train Example with ImageNette160"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model with ImageNette160

|

||||

model.train(data='imagenette160', epochs=100, imgsz=160)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model with ImageNette160

|

||||

yolo detect train data=imagenette160 model=yolov8n-cls.pt epochs=100 imgsz=160

|

||||

```

|

||||

|

||||

!!! example "Train Example with ImageNette320"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model with ImageNette320

|

||||

model.train(data='imagenette320', epochs=100, imgsz=320)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model with ImageNette320

|

||||

yolo detect train data=imagenette320 model=yolov8n-cls.pt epochs=100 imgsz=320

|

||||

```

|

||||

|

||||

These smaller versions of the dataset allow for rapid iterations during the development process while still providing valuable and realistic image classification tasks.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the ImageNette dataset in your research or development work, please acknowledge it appropriately. For more information about the ImageNette dataset, visit the [ImageNette dataset GitHub page](https://github.com/fastai/imagenette).

|

||||

@ -1,7 +1,83 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the ImageWoof dataset, a subset of the ImageNet consisting of 10 challenging-to-classify dog breed classes.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# ImageWoof Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

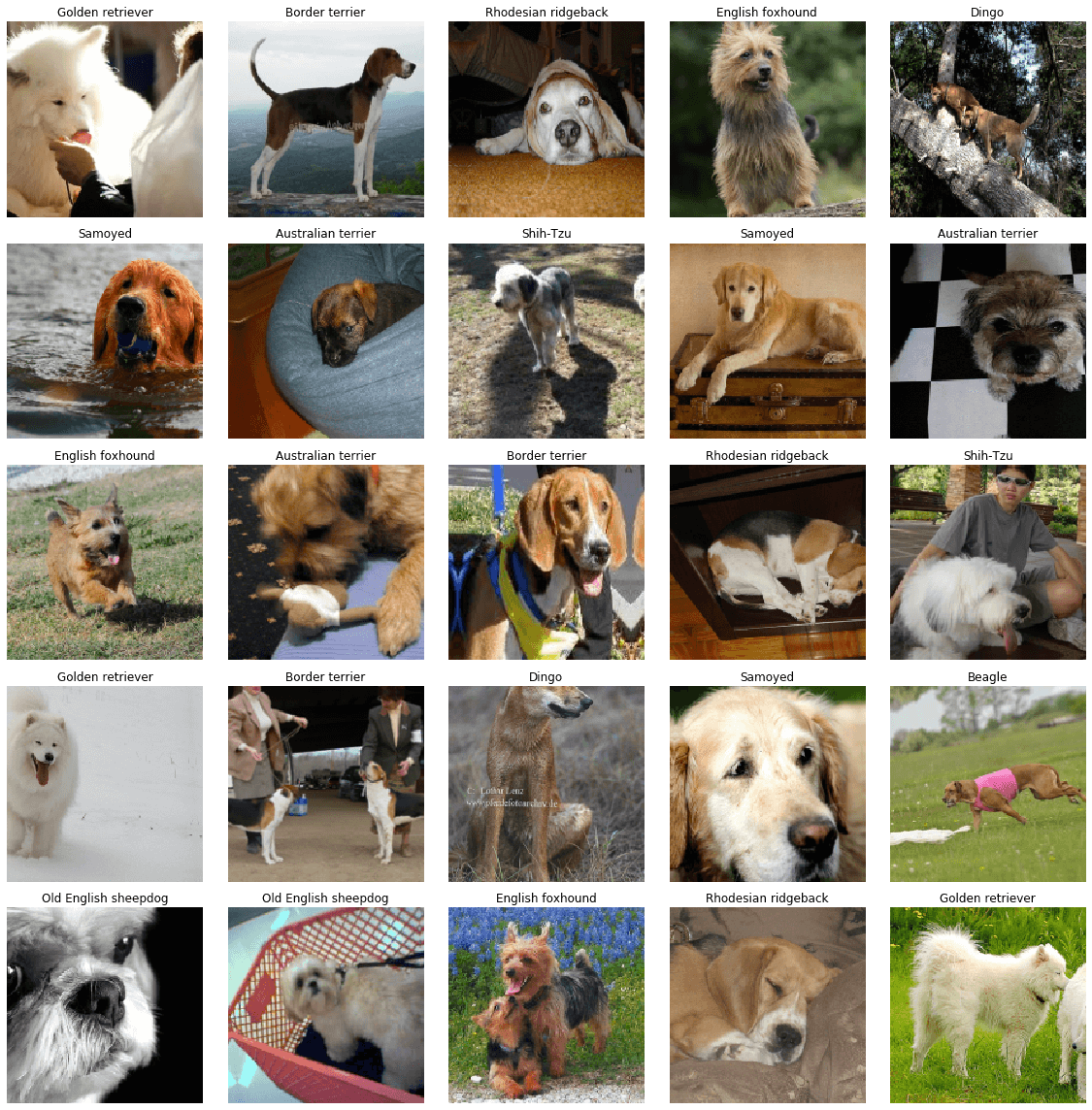

The [ImageWoof](https://github.com/fastai/imagenette) dataset is a subset of the ImageNet consisting of 10 classes that are challenging to classify, since they're all dog breeds. It was created as a more difficult task for image classification algorithms to solve, aiming at encouraging development of more advanced models.

|

||||

|

||||

## Key Features

|

||||

|

||||

- ImageWoof contains images of 10 different dog breeds: Australian terrier, Border terrier, Samoyed, Beagle, Shih-Tzu, English foxhound, Rhodesian ridgeback, Dingo, Golden retriever, and Old English sheepdog.

|

||||

- The dataset provides images at various resolutions (full size, 320px, 160px), accommodating for different computational capabilities and research needs.

|

||||

- It also includes a version with noisy labels, providing a more realistic scenario where labels might not always be reliable.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The ImageWoof dataset structure is based on the dog breed classes, with each breed having its own directory of images.

|

||||

|

||||

## Applications

|

||||

|

||||

The ImageWoof dataset is widely used for training and evaluating deep learning models in image classification tasks, especially when it comes to more complex and similar classes. The dataset's challenge lies in the subtle differences between the dog breeds, pushing the limits of model's performance and generalization.

|

||||

|

||||

## Usage

|

||||

|

||||

To train a CNN model on the ImageWoof dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='imagewoof', epochs=100, imgsz=224)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=imagewoof model=yolov8n-cls.pt epochs=100 imgsz=224

|

||||

```

|

||||

|

||||

## Dataset Variants

|

||||

|

||||

ImageWoof dataset comes in three different sizes to accommodate various research needs and computational capabilities:

|

||||

|

||||

1. **Full Size (imagewoof)**: This is the original version of the ImageWoof dataset. It contains full-sized images and is ideal for final training and performance benchmarking.

|

||||

|

||||

2. **Medium Size (imagewoof320)**: This version contains images resized to have a maximum edge length of 320 pixels. It's suitable for faster training without significantly sacrificing model performance.

|

||||

|

||||

3. **Small Size (imagewoof160)**: This version contains images resized to have a maximum edge length of 160 pixels. It's designed for rapid prototyping and experimentation where training speed is a priority.

|

||||

|

||||

To use these variants in your training, simply replace 'imagewoof' in the dataset argument with 'imagewoof320' or 'imagewoof160'. For example:

|

||||

|

||||

```python

|

||||

# For medium-sized dataset

|

||||

model.train(data='imagewoof320', epochs=100, imgsz=224)

|

||||

|

||||

# For small-sized dataset

|

||||

model.train(data='imagewoof160', epochs=100, imgsz=224)

|

||||

```

|

||||

|

||||

It's important to note that using smaller images will likely yield lower performance in terms of classification accuracy. However, it's an excellent way to iterate quickly in the early stages of model development and prototyping.

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The ImageWoof dataset contains colorful images of various dog breeds, providing a challenging dataset for image classification tasks. Here are some examples of images from the dataset:

|

||||

|

||||

|

||||

|

||||

The example showcases the subtle differences and similarities among the different dog breeds in the ImageWoof dataset, highlighting the complexity and difficulty of the classification task.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the ImageWoof dataset in your research or development work, please make sure to acknowledge the creators of the dataset by linking to the [official dataset repository](https://github.com/fastai/imagenette). As of my knowledge cutoff in September 2021, there is no official publication specifically about ImageWoof for citation.

|

||||

|

||||

We would like to acknowledge the FastAI team for creating and maintaining the ImageWoof dataset as a valuable resource for the machine learning and computer vision research community. For more information about the ImageWoof dataset, visit the [ImageWoof dataset repository](https://github.com/fastai/imagenette).

|

||||

@ -51,7 +51,7 @@ To train a CNN model on the MNIST dataset for 100 epochs with an image size of 3

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

cnn detect train data=MNIST.yaml model=cnn_mnist.pt epochs=100 imgsz=28

|

||||

cnn detect train data=mnist model=yolov8n-cls.pt epochs=100 imgsz=28

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

@ -1,7 +1,90 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the COCO-Pose dataset, designed to encourage research on pose estimation tasks with standardized evaluation metrics.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# COCO-Pose Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [COCO-Pose](https://cocodataset.org/#keypoints-2017) dataset is a specialized version of the COCO (Common Objects in Context) dataset, designed for pose estimation tasks. It leverages the COCO Keypoints 2017 images and labels to enable the training of models like YOLO for pose estimation tasks.

|

||||

|

||||

|

||||

|

||||

## Key Features

|

||||

|

||||

- COCO-Pose builds upon the COCO Keypoints 2017 dataset which contains 200K images labeled with keypoints for pose estimation tasks.

|

||||

- The dataset supports 17 keypoints for human figures, facilitating detailed pose estimation.

|

||||

- Like COCO, it provides standardized evaluation metrics, including Object Keypoint Similarity (OKS) for pose estimation tasks, making it suitable for comparing model performance.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The COCO-Pose dataset is split into three subsets:

|

||||

|

||||

1. **Train2017**: This subset contains a portion of the 118K images from the COCO dataset, annotated for training pose estimation models.

|

||||

2. **Val2017**: This subset has a selection of images used for validation purposes during model training.

|

||||

3. **Test2017**: This subset consists of images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [COCO evaluation server](https://competitions.codalab.org/competitions/5181) for performance evaluation.

|

||||

|

||||

## Applications

|

||||

|

||||

The COCO-Pose dataset is specifically used for training and evaluating deep learning models in keypoint detection and pose estimation tasks, such as OpenPose. The dataset's large number of annotated images and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners focused on pose estimation.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO-Pose dataset, the `coco-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco-pose.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco-pose.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco-pose.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n-pose model on the COCO-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco-pose.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco-pose.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

The COCO-Pose dataset contains a diverse set of images with human figures annotated with keypoints. Here are some examples of images from the dataset, along with their corresponding annotations:

|

||||

|

||||

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO-Pose dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO-Pose dataset in your research or development work, please cite the following paper:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO-Pose dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

@ -28,7 +28,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

To train a YOLOv8n-pose model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

@ -38,7 +38,7 @@ To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

|

||||

|

||||

@ -1,7 +1,89 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the COCO-Seg dataset, designed for simple training of YOLO models on instance segmentation tasks.

|

||||

---

|

||||

|

||||

# 🚧 Page Under Construction ⚒

|

||||

# COCO-Seg Dataset

|

||||

|

||||

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

|

||||

The [COCO-Seg](https://cocodataset.org/#home) dataset, an extension of the COCO (Common Objects in Context) dataset, is specially designed to aid research in object instance segmentation. It uses the same images as COCO but introduces more detailed segmentation annotations. This dataset is a crucial resource for researchers and developers working on instance segmentation tasks, especially for training YOLO models.

|

||||

|

||||

## Key Features

|

||||

|

||||

- COCO-Seg retains the original 330K images from COCO.

|

||||

- The dataset consists of the same 80 object categories found in the original COCO dataset.

|

||||

- Annotations now include more detailed instance segmentation masks for each object in the images.

|

||||

- COCO-Seg provides standardized evaluation metrics like mean Average Precision (mAP) for object detection, and mean Average Recall (mAR) for instance segmentation tasks, enabling effective comparison of model performance.

|

||||

|

||||

## Dataset Structure

|

||||

|

||||

The COCO-Seg dataset is partitioned into three subsets:

|

||||

|

||||

1. **Train2017**: This subset contains 118K images for training instance segmentation models.

|

||||

2. **Val2017**: This subset includes 5K images used for validation purposes during model training.

|

||||

3. **Test2017**: This subset encompasses 20K images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [COCO evaluation server](https://competitions.codalab.org/competitions/5181) for performance evaluation.

|

||||

|

||||

## Applications

|

||||

|

||||

COCO-Seg is widely used for training and evaluating deep learning models in instance segmentation, such as the YOLO models. The large number of annotated images, the diversity of object categories, and the standardized evaluation metrics make it an indispensable resource for computer vision researchers and practitioners.

|

||||

|

||||

## Dataset YAML

|

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO-Seg dataset, the `coco.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml).

|

||||

|

||||

!!! example "ultralytics/datasets/coco.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/datasets/coco.yaml"

|

||||

```

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n-seg model on the COCO-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco-seg.yaml', epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

=== "CLI"

|

||||

|

||||

```bash

|

||||

# Start training from a pretrained *.pt model

|

||||

yolo detect train data=coco-seg.yaml model=yolov8n.pt epochs=100 imgsz=640

|

||||

```

|

||||

|

||||

## Sample Images and Annotations

|

||||

|

||||

COCO-Seg, like its predecessor COCO, contains a diverse set of images with various object categories and complex scenes. However, COCO-Seg introduces more detailed instance segmentation masks for each object in the images. Here are some examples of images from the dataset, along with their corresponding instance segmentation masks:

|

||||

|

||||

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This aids the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

The example showcases the variety and complexity of the images in the COCO-Seg dataset and the benefits of using mosaicing during the training process.

|

||||

|

||||

## Citations and Acknowledgments

|

||||

|

||||

If you use the COCO-Seg dataset in your research or development work, please cite the original COCO paper and acknowledge the extension to COCO-Seg:

|

||||

|

||||

```bibtex

|

||||

@misc{lin2015microsoft,

|

||||

title={Microsoft COCO: Common Objects in Context},

|

||||

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

|

||||

year={2015},

|

||||

eprint={1405.0312},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

We extend our thanks to the COCO Consortium for creating and maintaining this invaluable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

|

||||

@ -28,7 +28,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

||||

|

||||

## Usage

|

||||

|

||||

To train a YOLOv8n model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

To train a YOLOv8n-seg model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

|

||||

@ -38,7 +38,7 @@ To train a YOLOv8n model on the COCO8-Seg dataset for 100 epochs with an image s

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

|

||||

model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

|

||||

|

||||

# Train the model

|

||||

model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

|

||||

|

||||

Reference in New Issue

Block a user