Docs updates for HUB, YOLOv4, YOLOv7, NAS (#3174)

Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

This commit is contained in:

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about the supported models and architectures, such as YOLOv3, YOLOv5, and YOLOv8, and how to contribute your own model to Ultralytics.

|

||||

keywords: Ultralytics YOLO, YOLOv3, YOLOv4, YOLOv5, YOLOv6, YOLOv7, YOLOv8, SAM, YOLO-NAS, RT-DETR, object detection, instance segmentation, detection transformers, real-time detection, computer vision, CLI, Python

|

||||

---

|

||||

|

||||

# Models

|

||||

@ -9,13 +10,15 @@ Ultralytics supports many models and architectures with more to come in the futu

|

||||

|

||||

In this documentation, we provide information on four major models:

|

||||

|

||||

1. [YOLOv3](./yolov3.md): The third iteration of the YOLO model family, known for its efficient real-time object detection capabilities.

|

||||

2. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture, offering better performance and speed tradeoffs compared to previous versions.

|

||||

3. [YOLOv6](./yolov6.md): Released by [Meituan](https://about.meituan.com/) in 2022 and is in use in many of the company's autonomous delivery robots.

|

||||

4. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

|

||||

5. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

|

||||

6. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

|

||||

7. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

|

||||

1. [YOLOv3](./yolov3.md): The third iteration of the YOLO model family originally by Joseph Redmon, known for its efficient real-time object detection capabilities.

|

||||

2. [YOLOv4](./yolov3.md): A darknet-native update to YOLOv3 released by Alexey Bochkovskiy in 2020.

|

||||

3. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture by Ultralytics, offering better performance and speed tradeoffs compared to previous versions.

|

||||

4. [YOLOv6](./yolov6.md): Released by [Meituan](https://about.meituan.com/) in 2022 and is in use in many of the company's autonomous delivery robots.

|

||||

5. [YOLOv7](./yolov7.md): Updated YOLO models released in 2022 by the authors of YOLOv4.

|

||||

6. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

|

||||

7. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

|

||||

8. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

|

||||

9. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

|

||||

|

||||

You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

|

||||

|

||||

@ -36,4 +39,4 @@ model.info() # display model information

|

||||

model.train(data="coco128.yaml", epochs=100) # train the model

|

||||

```

|

||||

|

||||

For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

|

||||

For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Dive into Baidu's RT-DETR, a revolutionary real-time object detection model built on the foundation of Vision Transformers (ViT). Learn how to use pre-trained PaddlePaddle RT-DETR models with the Ultralytics Python API for various tasks.

|

||||

keywords: RT-DETR, Transformer, ViT, Vision Transformers, Baidu RT-DETR, PaddlePaddle, Paddle Paddle RT-DETR, real-time object detection, Vision Transformers-based object detection, pre-trained PaddlePaddle RT-DETR models, Baidu's RT-DETR usage, Ultralytics Python API, object detector

|

||||

---

|

||||

|

||||

# Baidu's RT-DETR: A Vision Transformer-Based Real-Time Object Detector

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Discover the Segment Anything Model (SAM), a revolutionary promptable image segmentation model, and delve into the details of its advanced architecture and the large-scale SA-1B dataset.

|

||||

keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI

|

||||

---

|

||||

|

||||

# Segment Anything Model (SAM)

|

||||

@ -95,4 +96,4 @@ If you find SAM useful in your research or development work, please consider cit

|

||||

|

||||

We would like to express our gratitude to Meta AI for creating and maintaining this valuable resource for the computer vision community.

|

||||

|

||||

*keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI.*

|

||||

*keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI.*

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Dive into YOLO-NAS, Deci's next-generation object detection model, offering breakthroughs in speed and accuracy. Learn how to utilize pre-trained models using the Ultralytics Python API for various tasks.

|

||||

keywords: YOLO-NAS, Deci AI, Ultralytics, object detection, deep learning, neural architecture search, Python API, pre-trained models, quantization

|

||||

---

|

||||

|

||||

# YOLO-NAS

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: YOLOv3, YOLOv3-Ultralytics and YOLOv3u by Ultralytics explained. Learn the evolution of these models and their specifications.

|

||||

keywords: YOLOv3, Ultralytics YOLOv3, YOLO v3, YOLOv3 models, object detection, models, machine learning, AI, image recognition, object recognition

|

||||

---

|

||||

|

||||

# YOLOv3, YOLOv3-Ultralytics, and YOLOv3u

|

||||

|

||||

67

docs/models/yolov4.md

Normal file

67

docs/models/yolov4.md

Normal file

@ -0,0 +1,67 @@

|

||||

---

|

||||

comments: true

|

||||

description: Explore YOLOv4, a state-of-the-art, real-time object detector. Learn about its architecture, features, and performance.

|

||||

keywords: YOLOv4, object detection, real-time, CNN, GPU, Ultralytics, documentation, YOLOv4 architecture, YOLOv4 features, YOLOv4 performance

|

||||

---

|

||||

|

||||

# YOLOv4: High-Speed and Precise Object Detection

|

||||

|

||||

Welcome to the Ultralytics documentation page for YOLOv4, a state-of-the-art, real-time object detector launched in 2020 by Alexey Bochkovskiy at [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet). YOLOv4 is designed to provide the optimal balance between speed and accuracy, making it an excellent choice for many applications.

|

||||

|

||||

|

||||

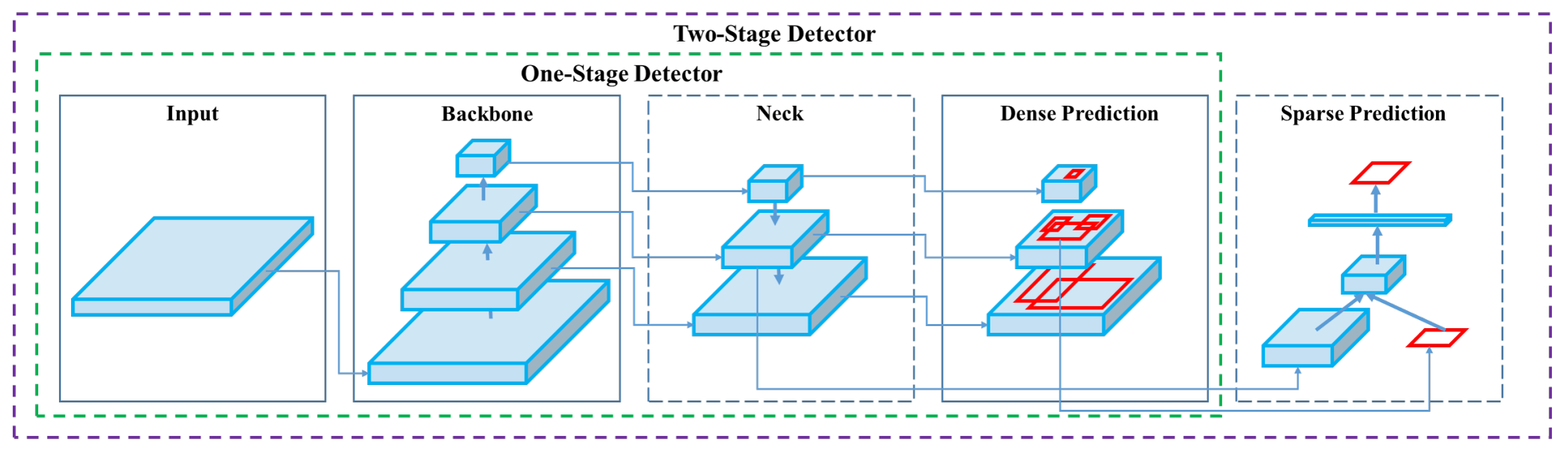

**YOLOv4 architecture diagram**. Showcasing the intricate network design of YOLOv4, including the backbone, neck, and head components, and their interconnected layers for optimal real-time object detection.

|

||||

|

||||

## Introduction

|

||||

|

||||

YOLOv4 stands for You Only Look Once version 4. It is a real-time object detection model developed to address the limitations of previous YOLO versions like [YOLOv3](./yolov3.md) and other object detection models. Unlike other convolutional neural network (CNN) based object detectors, YOLOv4 is not only applicable for recommendation systems but also for standalone process management and human input reduction. Its operation on conventional graphics processing units (GPUs) allows for mass usage at an affordable price, and it is designed to work in real-time on a conventional GPU while requiring only one such GPU for training.

|

||||

|

||||

## Architecture

|

||||

|

||||

YOLOv4 makes use of several innovative features that work together to optimize its performance. These include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT), Mish-activation, Mosaic data augmentation, DropBlock regularization, and CIoU loss. These features are combined to achieve state-of-the-art results.

|

||||

|

||||

A typical object detector is composed of several parts including the input, the backbone, the neck, and the head. The backbone of YOLOv4 is pre-trained on ImageNet and is used to predict classes and bounding boxes of objects. The backbone could be from several models including VGG, ResNet, ResNeXt, or DenseNet. The neck part of the detector is used to collect feature maps from different stages and usually includes several bottom-up paths and several top-down paths. The head part is what is used to make the final object detections and classifications.

|

||||

|

||||

## Bag of Freebies

|

||||

|

||||

YOLOv4 also makes use of methods known as "bag of freebies," which are techniques that improve the accuracy of the model during training without increasing the cost of inference. Data augmentation is a common bag of freebies technique used in object detection, which increases the variability of the input images to improve the robustness of the model. Some examples of data augmentation include photometric distortions (adjusting the brightness, contrast, hue, saturation, and noise of an image) and geometric distortions (adding random scaling, cropping, flipping, and rotating). These techniques help the model to generalize better to different types of images.

|

||||

|

||||

## Features and Performance

|

||||

|

||||

YOLOv4 is designed for optimal speed and accuracy in object detection. The architecture of YOLOv4 includes CSPDarknet53 as the backbone, PANet as the neck, and YOLOv3 as the detection head. This design allows YOLOv4 to perform object detection at an impressive speed, making it suitable for real-time applications. YOLOv4 also excels in accuracy, achieving state-of-the-art results in object detection benchmarks.

|

||||

|

||||

## Usage Examples

|

||||

|

||||

As of the time of writing, Ultralytics does not currently support YOLOv4 models. Therefore, any users interested in using YOLOv4 will need to refer directly to the YOLOv4 GitHub repository for installation and usage instructions.

|

||||

|

||||

Here is a brief overview of the typical steps you might take to use YOLOv4:

|

||||

|

||||

1. Visit the YOLOv4 GitHub repository: [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet).

|

||||

|

||||

2. Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

|

||||

|

||||

3. Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

|

||||

|

||||

Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv4 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv4 GitHub repository.

|

||||

|

||||

We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv4 is implemented.

|

||||

|

||||

## Conclusion

|

||||

|

||||

YOLOv4 is a powerful and efficient object detection model that strikes a balance between speed and accuracy. Its use of unique features and bag of freebies techniques during training allows it to perform excellently in real-time object detection tasks. YOLOv4 can be trained and used by anyone with a conventional GPU, making it accessible and practical for a wide range of applications.

|

||||

|

||||

## Citations and Acknowledgements

|

||||

|

||||

We would like to acknowledge the YOLOv4 authors for their significant contributions in the field of real-time object detection:

|

||||

|

||||

```bibtex

|

||||

@misc{bochkovskiy2020yolov4,

|

||||

title={YOLOv4: Optimal Speed and Accuracy of Object Detection},

|

||||

author={Alexey Bochkovskiy and Chien-Yao Wang and Hong-Yuan Mark Liao},

|

||||

year={2020},

|

||||

eprint={2004.10934},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

```

|

||||

|

||||

The original YOLOv4 paper can be found on [arXiv](https://arxiv.org/pdf/2004.10934.pdf). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/AlexeyAB/darknet). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: YOLOv5 by Ultralytics explained. Discover the evolution of this model and its key specifications. Experience faster and more accurate object detection.

|

||||

keywords: YOLOv5, Ultralytics YOLOv5, YOLO v5, YOLOv5 models, YOLO, object detection, model, neural network, accuracy, speed, pre-trained weights, inference, validation, training

|

||||

---

|

||||

|

||||

# YOLOv5

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Discover Meituan YOLOv6, a robust real-time object detector. Learn how to utilize pre-trained models with Ultralytics Python API for a variety of tasks.

|

||||

keywords: Meituan, YOLOv6, object detection, Bi-directional Concatenation (BiC), anchor-aided training (AAT), pre-trained models, high-resolution input, real-time, ultra-fast computations

|

||||

---

|

||||

|

||||

# Meituan YOLOv6

|

||||

@ -78,4 +79,4 @@ We would like to acknowledge the authors for their significant contributions in

|

||||

}

|

||||

```

|

||||

|

||||

The original YOLOv6 paper can be found on [arXiv](https://arxiv.org/abs/2301.05586). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/meituan/YOLOv6). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

|

||||

The original YOLOv6 paper can be found on [arXiv](https://arxiv.org/abs/2301.05586). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/meituan/YOLOv6). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

|

||||

61

docs/models/yolov7.md

Normal file

61

docs/models/yolov7.md

Normal file

@ -0,0 +1,61 @@

|

||||

---

|

||||

comments: true

|

||||

description: Discover YOLOv7, a cutting-edge real-time object detector that surpasses competitors in speed and accuracy. Explore its unique trainable bag-of-freebies.

|

||||

keywords: object detection, real-time object detector, YOLOv7, MS COCO, computer vision, neural networks, AI, deep learning, deep neural networks, real-time, GPU, GitHub, arXiv

|

||||

---

|

||||

|

||||

# YOLOv7: Trainable Bag-of-Freebies

|

||||

|

||||

YOLOv7 is a state-of-the-art real-time object detector that surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS. It has the highest accuracy (56.8% AP) among all known real-time object detectors with 30 FPS or higher on GPU V100. Moreover, YOLOv7 outperforms other object detectors such as YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, and many others in speed and accuracy. The model is trained on the MS COCO dataset from scratch without using any other datasets or pre-trained weights. Source code for YOLOv7 is available on GitHub.

|

||||

|

||||

|

||||

**Comparison of state-of-the-art object detectors.** From the results in Table 2 we know that the proposed method has the best speed-accuracy trade-off comprehensively. If we compare YOLOv7-tiny-SiLU with YOLOv5-N (r6.1), our method is 127 fps faster and 10.7% more accurate on AP. In addition, YOLOv7 has 51.4% AP at frame rate of 161 fps, while PPYOLOE-L with the same AP has only 78 fps frame rate. In terms of parameter usage, YOLOv7 is 41% less than PPYOLOE-L. If we compare YOLOv7-X with 114 fps inference speed to YOLOv5-L (r6.1) with 99 fps inference speed, YOLOv7-X can improve AP by 3.9%. If YOLOv7-X is compared with YOLOv5-X (r6.1) of similar scale, the inference speed of YOLOv7-X is 31 fps faster. In addition, in terms the amount of parameters and computation, YOLOv7-X reduces 22% of parameters and 8% of computation compared to YOLOv5-X (r6.1), but improves AP by 2.2% ([Source](https://arxiv.org/pdf/2207.02696.pdf)).

|

||||

|

||||

## Overview

|

||||

|

||||

Real-time object detection is an important component in many computer vision systems, including multi-object tracking, autonomous driving, robotics, and medical image analysis. In recent years, real-time object detection development has focused on designing efficient architectures and improving the inference speed of various CPUs, GPUs, and neural processing units (NPUs). YOLOv7 supports both mobile GPU and GPU devices, from the edge to the cloud.

|

||||

|

||||

Unlike traditional real-time object detectors that focus on architecture optimization, YOLOv7 introduces a focus on the optimization of the training process. This includes modules and optimization methods designed to improve the accuracy of object detection without increasing the inference cost, a concept known as the "trainable bag-of-freebies".

|

||||

|

||||

## Key Features

|

||||

|

||||

YOLOv7 introduces several key features:

|

||||

|

||||

1. **Model Re-parameterization**: YOLOv7 proposes a planned re-parameterized model, which is a strategy applicable to layers in different networks with the concept of gradient propagation path.

|

||||

|

||||

2. **Dynamic Label Assignment**: The training of the model with multiple output layers presents a new issue: "How to assign dynamic targets for the outputs of different branches?" To solve this problem, YOLOv7 introduces a new label assignment method called coarse-to-fine lead guided label assignment.

|

||||

|

||||

3. **Extended and Compound Scaling**: YOLOv7 proposes "extend" and "compound scaling" methods for the real-time object detector that can effectively utilize parameters and computation.

|

||||

|

||||

4. **Efficiency**: The method proposed by YOLOv7 can effectively reduce about 40% parameters and 50% computation of state-of-the-art real-time object detector, and has faster inference speed and higher detection accuracy.

|

||||

|

||||

## Usage Examples

|

||||

|

||||

As of the time of writing, Ultralytics does not currently support YOLOv7 models. Therefore, any users interested in using YOLOv7 will need to refer directly to the YOLOv7 GitHub repository for installation and usage instructions.

|

||||

|

||||

Here is a brief overview of the typical steps you might take to use YOLOv7:

|

||||

|

||||

1. Visit the YOLOv7 GitHub repository: [https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7).

|

||||

|

||||

2. Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

|

||||

|

||||

3. Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

|

||||

|

||||

Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv7 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv7 GitHub repository.

|

||||

|

||||

We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv7 is implemented.

|

||||

|

||||

## Citations and Acknowledgements

|

||||

|

||||

We would like to acknowledge the YOLOv7 authors for their significant contributions in the field of real-time object detection:

|

||||

|

||||

```bibtex

|

||||

@article{wang2022yolov7,

|

||||

title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors},

|

||||

author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

|

||||

journal={arXiv preprint arXiv:2207.02696},

|

||||

year={2022}

|

||||

}

|

||||

```

|

||||

|

||||

The original YOLOv7 paper can be found on [arXiv](https://arxiv.org/pdf/2207.02696.pdf). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/WongKinYiu/yolov7). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

|

||||

@ -1,6 +1,7 @@

|

||||

---

|

||||

comments: true

|

||||

description: Learn about YOLOv8's pre-trained weights supporting detection, instance segmentation, pose, and classification tasks. Get performance details.

|

||||

keywords: YOLOv8, real-time object detection, object detection, deep learning, machine learning

|

||||

---

|

||||

|

||||

# YOLOv8

|

||||

|

||||

Reference in New Issue

Block a user