diff --git a/README.md b/README.md

index 4ea5393..ccf8aa3 100644

--- a/README.md

+++ b/README.md

@@ -102,9 +102,11 @@ path = model.export(format="onnx") # export the model to ONNX format

## Models

-All YOLOv8 pretrained models are available here. Detect, Segment and Pose models are pretrained on the [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml) dataset, while Classify models are pretrained on the [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/ImageNet.yaml) dataset.

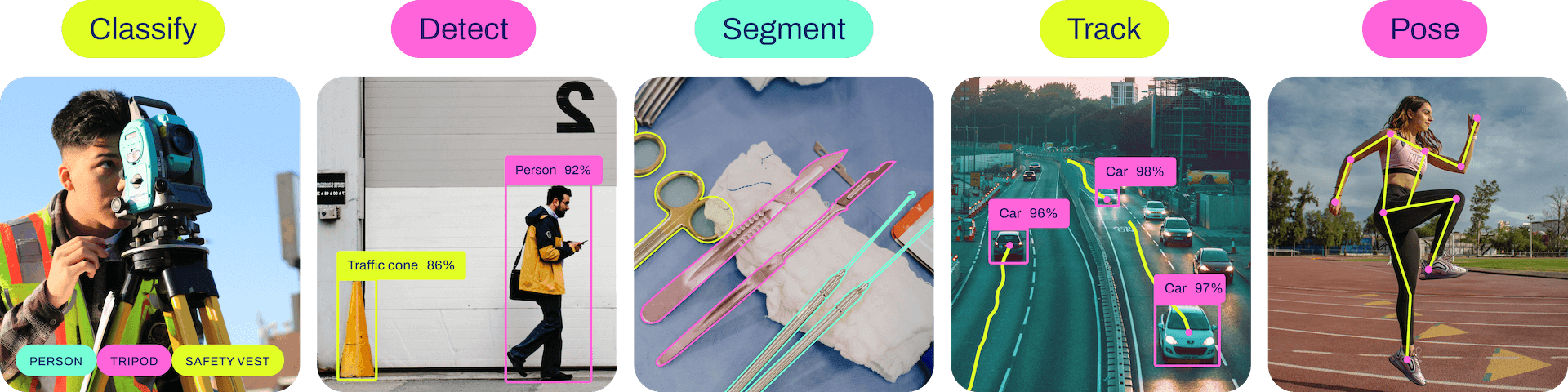

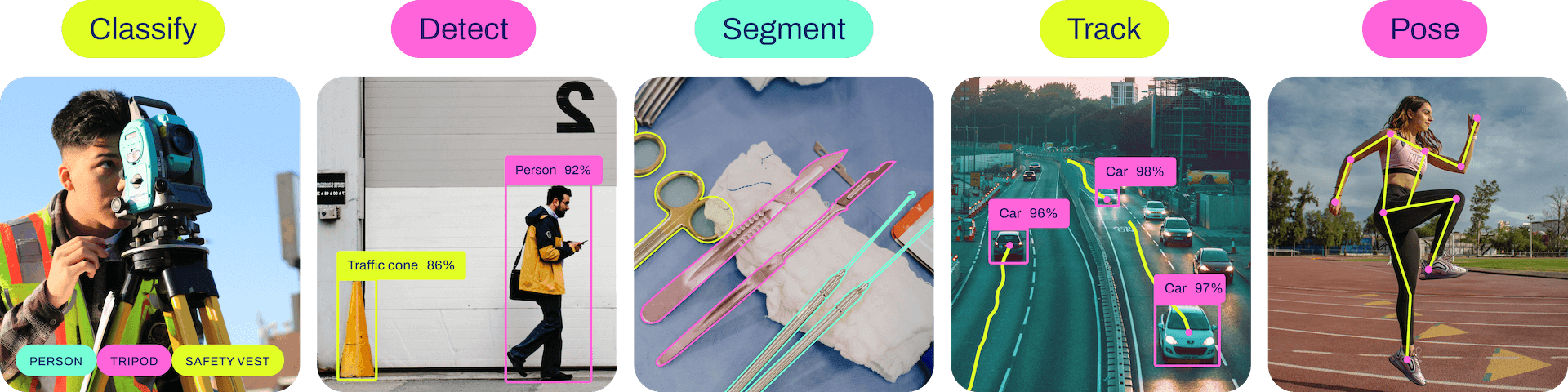

+YOLOv8 [Detect](https://docs.ultralytics.com/tasks/detect), [Segment](https://docs.ultralytics.com/tasks/segment) and [Pose](https://docs.ultralytics.com/tasks/pose) models pretrained on the [COCO](https://docs.ultralytics.com/datasets/detect/coco) dataset are available here, as well as YOLOv8 [Classify](https://docs.ultralytics.com/modes/classify) models pretrained on the [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet) dataset. [Track](https://docs.ultralytics.com/modes/track) mode is available for all Detect, Segment and Pose models.

-[Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

+ +

+All [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

+

+All [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

Detection

diff --git a/README.zh-CN.md b/README.zh-CN.md

index b08e4e4..3cde911 100644

--- a/README.zh-CN.md

+++ b/README.zh-CN.md

@@ -102,9 +102,11 @@ success = model.export(format="onnx") # 将模型导出为 ONNX 格式

## 模型

-所有的 YOLOv8 预训练模型都可以在此找到。检测、分割和姿态模型在 [COCO](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco.yaml) 数据集上进行预训练,而分类模型在 [ImageNet](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/ImageNet.yaml) 数据集上进行预训练。

+在[COCO](https://docs.ultralytics.com/datasets/detect/coco)数据集上预训练的YOLOv8 [检测](https://docs.ultralytics.com/tasks/detect),[分割](https://docs.ultralytics.com/tasks/segment)和[姿态](https://docs.ultralytics.com/tasks/pose)模型可以在这里找到,以及在[ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet)数据集上预训练的YOLOv8 [分类](https://docs.ultralytics.com/modes/classify)模型。所有的检测,分割和姿态模型都支持[追踪](https://docs.ultralytics.com/modes/track)模式。

-在首次使用时,[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models) 会自动从最新的 Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)中下载。

+ +

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。

+

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。

检测

diff --git a/docs/models/index.md b/docs/models/index.md

index 3eb6d4e..051bfb5 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -12,9 +12,10 @@ In this documentation, we provide information on four major models:

1. [YOLOv3](./yolov3.md): The third iteration of the YOLO model family, known for its efficient real-time object detection capabilities.

2. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture, offering better performance and speed tradeoffs compared to previous versions.

3. [YOLOv6](./yolov6.md): Released by [Meituan](https://about.meituan.com/) in 2022 and is in use in many of the company's autonomous delivery robots.

-3. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

-4. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

-5. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's RT-DETR model.

+4. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

+5. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

+6. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

+7. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

@@ -35,4 +36,4 @@ model.info() # display model information

model.train(data="coco128.yaml", epochs=100) # train the model

```

-For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

\ No newline at end of file

+For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

diff --git a/docs/models/rtdetr.md b/docs/models/rtdetr.md

index aa1ea63..a38acbb 100644

--- a/docs/models/rtdetr.md

+++ b/docs/models/rtdetr.md

@@ -1,29 +1,26 @@

---

comments: true

-description: Explore RT-DETR, a high-performance real-time object detector. Learn how to use pre-trained models with Ultralytics Python API for various tasks.

+description: Dive into Baidu's RT-DETR, a revolutionary real-time object detection model built on the foundation of Vision Transformers (ViT). Learn how to use pre-trained PaddlePaddle RT-DETR models with the Ultralytics Python API for various tasks.

---

-# RT-DETR

+# Baidu's RT-DETR: A Vision Transformer-Based Real-Time Object Detector

## Overview

-Real-Time Detection Transformer (RT-DETR) is an end-to-end object detector that provides real-time performance while maintaining high accuracy. It efficiently processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion, and supports flexible adjustment of inference speed using different decoder layers without retraining. RT-DETR outperforms many real-time object detectors on accelerated backends like CUDA with TensorRT.

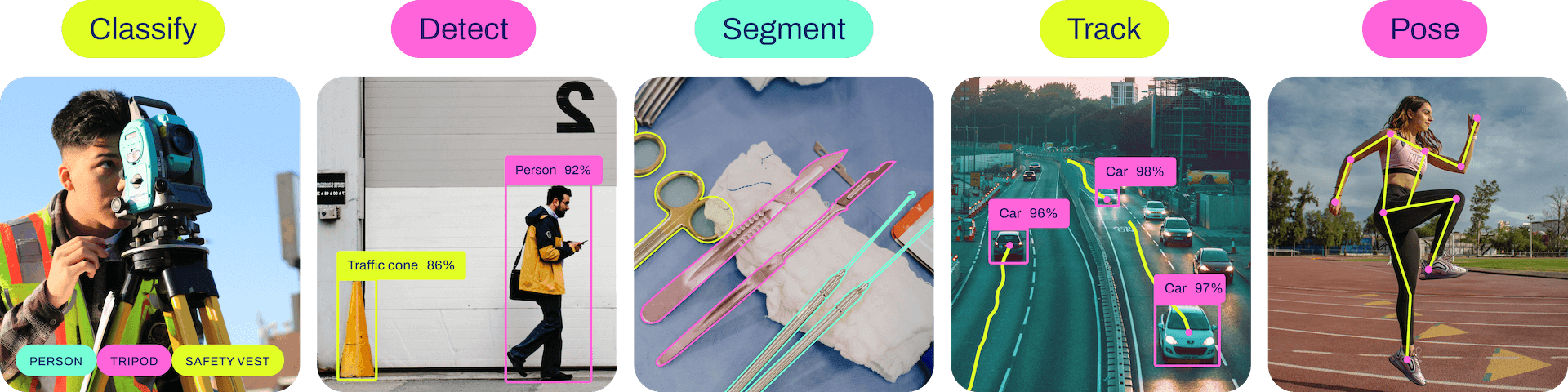

+Real-Time Detection Transformer (RT-DETR), developed by Baidu, is a cutting-edge end-to-end object detector that provides real-time performance while maintaining high accuracy. It leverages the power of Vision Transformers (ViT) to efficiently process multiscale features by decoupling intra-scale interaction and cross-scale fusion. RT-DETR is highly adaptable, supporting flexible adjustment of inference speed using different decoder layers without retraining. The model excels on accelerated backends like CUDA with TensorRT, outperforming many other real-time object detectors.

-**Overview of RT-DETR.** Model architecture diagram showing the last three stages of the backbone {S3, S4, S5} as the input

-to the encoder. The efficient hybrid encoder transforms multiscale features into a sequence of image features through intrascale feature interaction (AIFI) and cross-scale feature-fusion module (CCFM). The IoU-aware query selection is employed

-to select a fixed number of image features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary

-prediction heads iteratively optimizes object queries to generate boxes and confidence scores ([source](https://arxiv.org/pdf/2304.08069.pdf)).

+**Overview of Baidu's RT-DETR.** The RT-DETR model architecture diagram shows the last three stages of the backbone {S3, S4, S5} as the input to the encoder. The efficient hybrid encoder transforms multiscale features into a sequence of image features through intrascale feature interaction (AIFI) and cross-scale feature-fusion module (CCFM). The IoU-aware query selection is employed to select a fixed number of image features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object queries to generate boxes and confidence scores ([source](https://arxiv.org/pdf/2304.08069.pdf)).

### Key Features

-- **Efficient Hybrid Encoder:** RT-DETR uses an efficient hybrid encoder that processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion. This design reduces computational costs and allows for real-time object detection.

-- **IoU-aware Query Selection:** RT-DETR improves object query initialization by utilizing IoU-aware query selection. This allows the model to focus on the most relevant objects in the scene.

-- **Adaptable Inference Speed:** RT-DETR supports flexible adjustments of inference speed by using different decoder layers without the need for retraining. This adaptability facilitates practical application in various real-time object detection scenarios.

+- **Efficient Hybrid Encoder:** Baidu's RT-DETR uses an efficient hybrid encoder that processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion. This unique Vision Transformers-based design reduces computational costs and allows for real-time object detection.

+- **IoU-aware Query Selection:** Baidu's RT-DETR improves object query initialization by utilizing IoU-aware query selection. This allows the model to focus on the most relevant objects in the scene, enhancing the detection accuracy.

+- **Adaptable Inference Speed:** Baidu's RT-DETR supports flexible adjustments of inference speed by using different decoder layers without the need for retraining. This adaptability facilitates practical application in various real-time object detection scenarios.

## Pre-trained Models

-Ultralytics RT-DETR provides several pre-trained models with different scales:

+The Ultralytics Python API provides pre-trained PaddlePaddle RT-DETR models with different scales:

- RT-DETR-L: 53.0% AP on COCO val2017, 114 FPS on T4 GPU

- RT-DETR-X: 54.8% AP on COCO val2017, 74 FPS on T4 GPU

@@ -57,7 +54,7 @@ model.predict("path/to/image.jpg") # predict

# Citations and Acknowledgements

-If you use RT-DETR in your research or development work, please cite the [original paper](https://arxiv.org/abs/2304.08069):

+If you use Baidu's RT-DETR in your research or development work, please cite the [original paper](https://arxiv.org/abs/2304.08069):

```bibtex

@misc{lv2023detrs,

@@ -70,4 +67,6 @@ If you use RT-DETR in your research or development work, please cite the [origin

}

```

-We would like to acknowledge Baidu's [PaddlePaddle](https://github.com/PaddlePaddle/PaddleDetection) team for creating and maintaining this valuable resource for the computer vision community.

+We would like to acknowledge Baidu and the [PaddlePaddle](https://github.com/PaddlePaddle/PaddleDetection) team for creating and maintaining this valuable resource for the computer vision community. Their contribution to the field with the development of the Vision Transformers-based real-time object detector, RT-DETR, is greatly appreciated.

+

+*Keywords: RT-DETR, Transformer, ViT, Vision Transformers, Baidu RT-DETR, PaddlePaddle, Paddle Paddle RT-DETR, real-time object detection, Vision Transformers-based object detection, pre-trained PaddlePaddle RT-DETR models, Baidu's RT-DETR usage, Ultralytics Python API*

\ No newline at end of file

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 1378780..12dd115 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -1,29 +1,33 @@

---

comments: true

-description: Learn about the Segment Anything Model (SAM) and how it provides promptable image segmentation through an advanced architecture and the SA-1B dataset.

+description: Discover the Segment Anything Model (SAM), a revolutionary promptable image segmentation model, and delve into the details of its advanced architecture and the large-scale SA-1B dataset.

---

# Segment Anything Model (SAM)

-## Overview

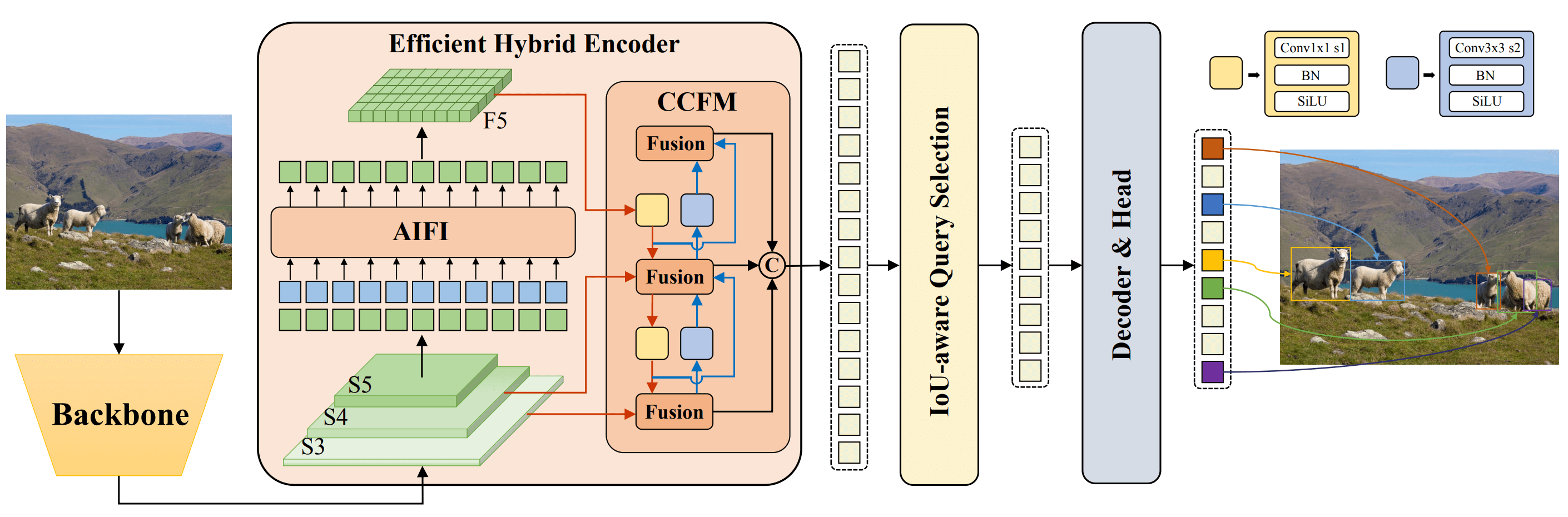

+Welcome to the frontier of image segmentation with the Segment Anything Model, or SAM. This revolutionary model has changed the game by introducing promptable image segmentation with real-time performance, setting new standards in the field.

-The Segment Anything Model (SAM) is a groundbreaking image segmentation model that enables promptable segmentation with real-time performance. It forms the foundation for the Segment Anything project, which introduces a new task, model, and dataset for image segmentation. SAM is designed to be promptable, allowing it to transfer zero-shot to new image distributions and tasks. The model is trained on the [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/), which contains over 1 billion masks on 11 million licensed and privacy-respecting images. SAM has demonstrated impressive zero-shot performance, often surpassing prior fully supervised results.

+## Introduction to SAM: The Segment Anything Model

+

+The Segment Anything Model, or SAM, is a cutting-edge image segmentation model that allows for promptable segmentation, providing unparalleled versatility in image analysis tasks. SAM forms the heart of the Segment Anything initiative, a groundbreaking project that introduces a novel model, task, and dataset for image segmentation.

+

+SAM's advanced design allows it to adapt to new image distributions and tasks without prior knowledge, a feature known as zero-shot transfer. Trained on the expansive [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/), which contains more than 1 billion masks spread over 11 million carefully curated images, SAM has displayed impressive zero-shot performance, surpassing previous fully supervised results in many cases.

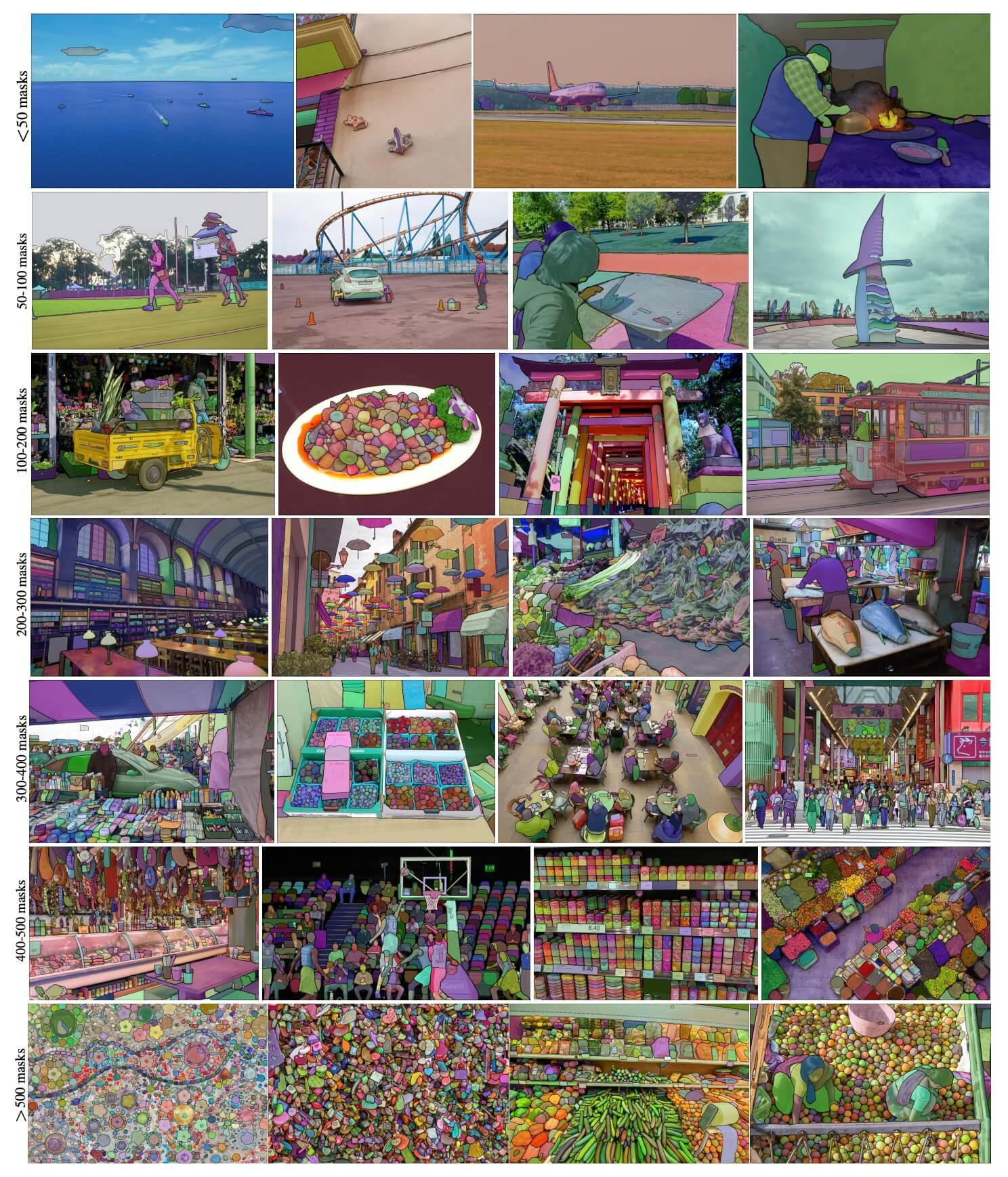

Example images with overlaid masks from our newly introduced dataset, SA-1B. SA-1B contains 11M diverse, high-resolution, licensed, and privacy protecting images and 1.1B high-quality segmentation masks. These masks were annotated fully automatically by SAM, and as verified by human ratings and numerous experiments, are of high quality and diversity. Images are grouped by number of masks per image for visualization (there are ∼100 masks per image on average).

-## Key Features

+## Key Features of the Segment Anything Model (SAM)

-- **Promptable Segmentation Task:** SAM is designed for a promptable segmentation task, enabling it to return a valid segmentation mask given any segmentation prompt, such as spatial or text information identifying an object.

-- **Advanced Architecture:** SAM utilizes a powerful image encoder, a prompt encoder, and a lightweight mask decoder. This architecture enables flexible prompting, real-time mask computation, and ambiguity awareness in segmentation.

-- **SA-1B Dataset:** The Segment Anything project introduces the SA-1B dataset, which contains over 1 billion masks on 11 million images. This dataset is the largest segmentation dataset to date, providing SAM with a diverse and large-scale source of data for training.

-- **Zero-Shot Performance:** SAM demonstrates remarkable zero-shot performance across a range of segmentation tasks, allowing it to be used out-of-the-box with prompt engineering for various applications.

+- **Promptable Segmentation Task:** SAM was designed with a promptable segmentation task in mind, allowing it to generate valid segmentation masks from any given prompt, such as spatial or text clues identifying an object.

+- **Advanced Architecture:** The Segment Anything Model employs a powerful image encoder, a prompt encoder, and a lightweight mask decoder. This unique architecture enables flexible prompting, real-time mask computation, and ambiguity awareness in segmentation tasks.

+- **The SA-1B Dataset:** Introduced by the Segment Anything project, the SA-1B dataset features over 1 billion masks on 11 million images. As the largest segmentation dataset to date, it provides SAM with a diverse and large-scale training data source.

+- **Zero-Shot Performance:** SAM displays outstanding zero-shot performance across various segmentation tasks, making it a ready-to-use tool for diverse applications with minimal need for prompt engineering.

-For more information about the Segment Anything Model and the SA-1B dataset, please refer to the [Segment Anything website](https://segment-anything.com) and the research paper [Segment Anything](https://arxiv.org/abs/2304.02643).

+For an in-depth look at the Segment Anything Model and the SA-1B dataset, please visit the [Segment Anything website](https://segment-anything.com) and check out the research paper [Segment Anything](https://arxiv.org/abs/2304.02643).

-## Usage

+## How to Use SAM: Versatility and Power in Image Segmentation

-SAM can be used for a variety of downstream tasks involving object and image distributions beyond its training data. Examples include edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. By employing prompt engineering, SAM can adapt to new tasks and data distributions in a zero-shot manner, making it a versatile and powerful tool for image segmentation tasks.

+The Segment Anything Model can be employed for a multitude of downstream tasks that go beyond its training data. This includes edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. With prompt engineering, SAM can swiftly adapt to new tasks and data distributions in a zero-shot manner, establishing it as a versatile and potent tool for all your image segmentation needs.

```python

from ultralytics import SAM

@@ -33,14 +37,14 @@ model.info() # display model information

model.predict('path/to/image.jpg') # predict

```

-## Supported Tasks

+## Available Models and Supported Tasks

| Model Type | Pre-trained Weights | Tasks Supported |

|------------|---------------------|-----------------------|

-| sam base | `sam_b.pt` | Instance Segmentation |

-| sam large | `sam_l.pt` | Instance Segmentation |

+| SAM base | `sam_b.pt` | Instance Segmentation |

+| SAM large | `sam_l.pt` | Instance Segmentation |

-## Supported Modes

+## Operating Modes

| Mode | Supported |

|------------|--------------------|

@@ -48,13 +52,13 @@ model.predict('path/to/image.jpg') # predict

| Validation | :x: |

| Training | :x: |

-## Auto-Annotation

+## Auto-Annotation: A Quick Path to Segmentation Datasets

-Auto-annotation is an essential feature that allows you to generate a [segmentation dataset](https://docs.ultralytics.com/datasets/segment) using a pre-trained detection model. It enables you to quickly and accurately annotate a large number of images without the need for manual labeling, saving time and effort.

+Auto-annotation is a key feature of SAM, allowing users to generate a [segmentation dataset](https://docs.ultralytics.com/datasets/segment) using a pre-trained detection model. This feature enables rapid and accurate annotation of a large number of images, bypassing the need for time-consuming manual labeling.

-### Generate Segmentation Dataset Using a Detection Model

+### Generate Your Segmentation Dataset Using a Detection Model

-To auto-annotate your dataset using the Ultralytics framework, you can use the `auto_annotate` function as shown below:

+To auto-annotate your dataset with the Ultralytics framework, use the `auto_annotate` function as shown below:

```python

from ultralytics.yolo.data.annotator import auto_annotate

@@ -70,13 +74,13 @@ auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model='sam_b.pt

| device | str, optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

| output_dir | str, None, optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | None |

-The `auto_annotate` function takes the path to your images, along with optional arguments for specifying the pre-trained detection and SAM segmentation models, the device to run the models on, and the output directory for saving the annotated results.

+The `auto_annotate` function takes the path to your images, with optional arguments for specifying the pre-trained detection and SAM segmentation models, the device to run the models on, and the output directory for saving the annotated results.

-By leveraging the power of pre-trained models, auto-annotation can significantly reduce the time and effort required for creating high-quality segmentation datasets. This feature is particularly useful for researchers and developers working with large image collections, as it allows them to focus on model development and evaluation rather than manual annotation.

+Auto-annotation with pre-trained models can dramatically cut down the time and effort required for creating high-quality segmentation datasets. This feature is especially beneficial for researchers and developers dealing with large image collections, as it allows them to focus on model development and evaluation rather than manual annotation.

## Citations and Acknowledgements

-If you use SAM in your research or development work, please cite the following paper:

+If you find SAM useful in your research or development work, please consider citing our paper:

```bibtex

@misc{kirillov2023segment,

@@ -89,4 +93,6 @@ If you use SAM in your research or development work, please cite the following p

}

```

-We would like to acknowledge Meta AI for creating and maintaining this valuable resource for the computer vision community.

\ No newline at end of file

+We would like to express our gratitude to Meta AI for creating and maintaining this valuable resource for the computer vision community.

+

+*keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI.*

diff --git a/docs/models/yolo-nas.md b/docs/models/yolo-nas.md

new file mode 100644

index 0000000..a39cec2

--- /dev/null

+++ b/docs/models/yolo-nas.md

@@ -0,0 +1,108 @@

+---

+comments: true

+description: Dive into YOLO-NAS, Deci's next-generation object detection model, offering breakthroughs in speed and accuracy. Learn how to utilize pre-trained models using the Ultralytics Python API for various tasks.

+---

+

+# Deci's YOLO-NAS

+

+## Overview

+

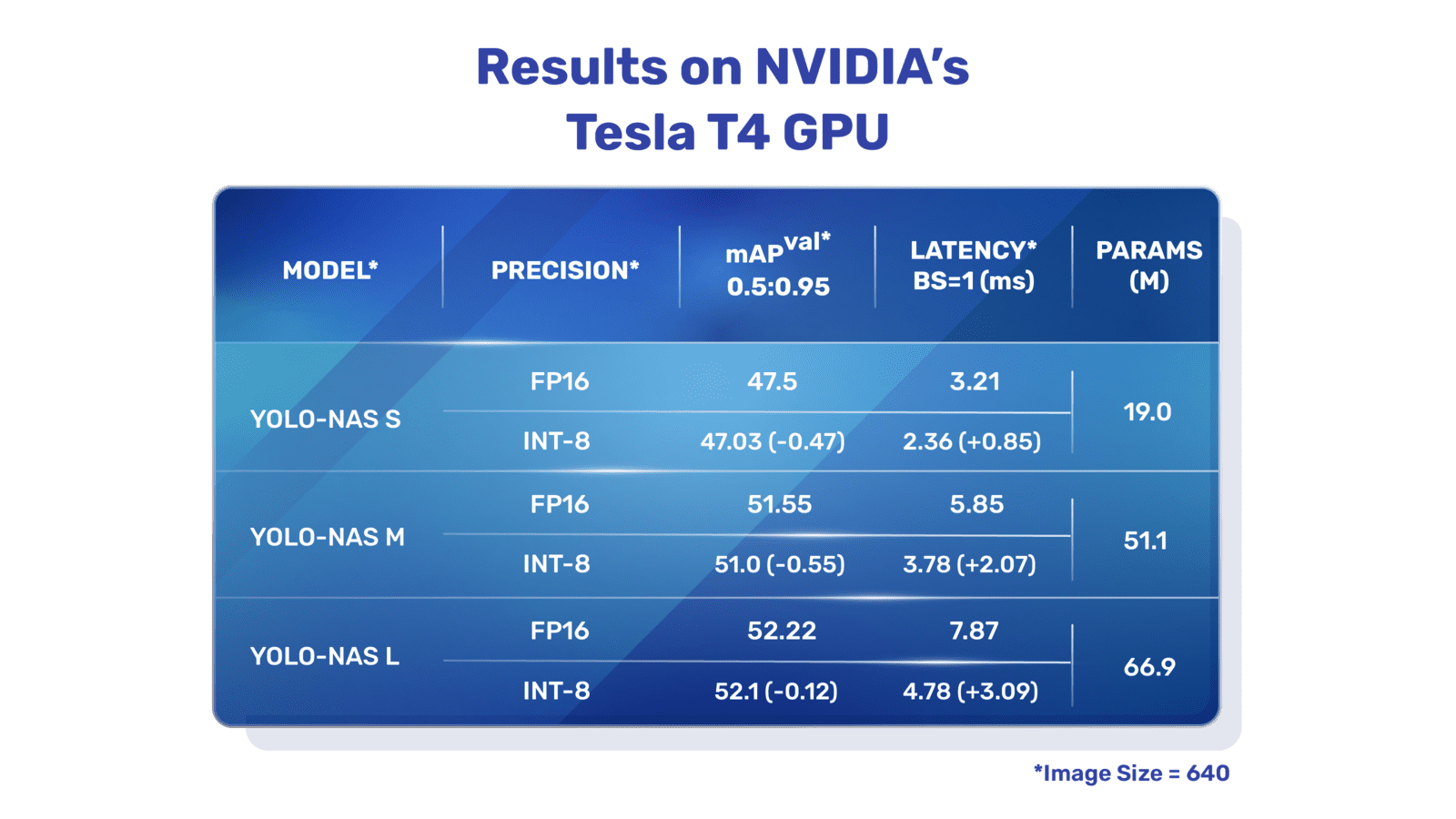

+Developed by Deci AI, YOLO-NAS is a groundbreaking object detection foundational model. It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection.

+

+

+**Overview of YOLO-NAS.** YOLO-NAS employs quantization-aware blocks and selective quantization for optimal performance. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance.

+

+### Key Features

+

+- **Quantization-Friendly Basic Block:** YOLO-NAS introduces a new basic block that is friendly to quantization, addressing one of the significant limitations of previous YOLO models.

+- **Sophisticated Training and Quantization:** YOLO-NAS leverages advanced training schemes and post-training quantization to enhance performance.

+- **AutoNAC Optimization and Pre-training:** YOLO-NAS utilizes AutoNAC optimization and is pre-trained on prominent datasets such as COCO, Objects365, and Roboflow 100. This pre-training makes it extremely suitable for downstream object detection tasks in production environments.

+

+## Pre-trained Models

+

+Experience the power of next-generation object detection with the pre-trained YOLO-NAS models provided by Ultralytics. These models are designed to deliver top-notch performance in terms of both speed and accuracy. Choose from a variety of options tailored to your specific needs:

+

+| Model | mAP | Latency (ms) |

+|------------------|-------|--------------|

+| YOLO-NAS S | 47.5 | 3.21 |

+| YOLO-NAS M | 51.55 | 5.85 |

+| YOLO-NAS L | 52.22 | 7.87 |

+| YOLO-NAS S INT-8 | 47.03 | 2.36 |

+| YOLO-NAS M INT-8 | 51.0 | 3.78 |

+| YOLO-NAS L INT-8 | 52.1 | 4.78 |

+

+Each model variant is designed to offer a balance between Mean Average Precision (mAP) and latency, helping you optimize your object detection tasks for both performance and speed.

+

+## Usage

+

+### Python API

+

+The YOLO-NAS models are easy to integrate into your Python applications. Ultralytics provides a user-friendly Python API to streamline the process.

+

+#### Predict Usage

+

+To perform object detection on an image, use the `predict` method as shown below:

+

+```python

+from ultralytics import NAS

+

+model = NAS('yolo_nas_s')

+results = model.predict('ultralytics/assets/bus.jpg')

+```

+

+This snippet demonstrates the simplicity of loading a pre-trained model and running a prediction on an image.

+

+#### Val Usage

+

+Validation of the model on a dataset can be done as follows:

+

+```python

+from ultralytics import NAS

+

+model = NAS('yolo_nas_s')

+results = model.val(data='coco8.yaml)

+```

+

+In this example, the model is validated against the dataset specified in the 'coco8.yaml' file.

+

+### Supported Tasks

+

+The YOLO-NAS models are primarily designed for object detection tasks. You can download the pre-trained weights for each variant of the model as follows:

+

+| Model Type | Pre-trained Weights | Tasks Supported |

+|------------|-----------------------------------------------------------------------------------------------|------------------|

+| YOLO-NAS-s | [yolo_nas_s.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolo_nas_s.pt) | Object Detection |

+| YOLO-NAS-m | [yolo_nas_m.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolo_nas_m.pt) | Object Detection |

+| YOLO-NAS-l | [yolo_nas_l.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolo_nas_l.pt) | Object Detection |

+

+### Supported Modes

+

+The YOLO-NAS models support both inference and validation modes, allowing you to predict and validate results with ease. Training mode, however, is currently not supported.

+

+| Mode | Supported |

+|------------|--------------------|

+| Inference | :heavy_check_mark: |

+| Validation | :heavy_check_mark: |

+| Training | :x: |

+

+Harness the power of the YOLO-NAS models to drive your object detection tasks to new heights of performance and speed.

+

+## Acknowledgements and Citations

+

+If you employ YOLO-NAS in your research or development work, please cite SuperGradients:

+

+```bibtex

+@misc{supergradients,

+ doi = {10.5281/ZENODO.7789328},

+ url = {https://zenodo.org/record/7789328},

+ author = {Aharon, Shay and {Louis-Dupont} and {Ofri Masad} and Yurkova, Kate and {Lotem Fridman} and {Lkdci} and Khvedchenya, Eugene and Rubin, Ran and Bagrov, Natan and Tymchenko, Borys and Keren, Tomer and Zhilko, Alexander and {Eran-Deci}},

+ title = {Super-Gradients},

+ publisher = {GitHub},

+ journal = {GitHub repository},

+ year = {2021},

+}

+```

+

+We express our gratitude to Deci AI's [SuperGradients](https://github.com/Deci-AI/super-gradients/) team for their efforts in creating and maintaining this valuable resource for the computer vision community. We believe YOLO-NAS, with its innovative architecture and superior object detection capabilities, will become a critical tool for developers and researchers alike.

+

+*Keywords: YOLO-NAS, Deci AI, object detection, deep learning, neural architecture search, Ultralytics Python API, YOLO model, SuperGradients, pre-trained models, quantization-friendly basic block, advanced training schemes, post-training quantization, AutoNAC optimization, COCO, Objects365, Roboflow 100*

\ No newline at end of file

diff --git a/docs/models/yolov3.md b/docs/models/yolov3.md

index 0f02445..0ca49ee 100644

--- a/docs/models/yolov3.md

+++ b/docs/models/yolov3.md

@@ -15,6 +15,8 @@ This document presents an overview of three closely related object detection mod

3. **YOLOv3u:** This is an updated version of YOLOv3-Ultralytics that incorporates the anchor-free, objectness-free split head used in YOLOv8 models. YOLOv3u maintains the same backbone and neck architecture as YOLOv3 but with the updated detection head from YOLOv8.

+

+

## Key Features

- **YOLOv3:** Introduced the use of three different scales for detection, leveraging three different sizes of detection kernels: 13x13, 26x26, and 52x52. This significantly improved detection accuracy for objects of different sizes. Additionally, YOLOv3 added features such as multi-label predictions for each bounding box and a better feature extractor network.

diff --git a/docs/models/yolov5.md b/docs/models/yolov5.md

index 1f40631..ac0d075 100644

--- a/docs/models/yolov5.md

+++ b/docs/models/yolov5.md

@@ -1,14 +1,16 @@

---

comments: true

-description: YOLOv5u by Ultralytics explained. Discover the evolution of this model and its key specifications. Experience faster and more accurate object detection.

+description: YOLOv5 by Ultralytics explained. Discover the evolution of this model and its key specifications. Experience faster and more accurate object detection.

---

-# YOLOv5u

+# YOLOv5

## Overview

YOLOv5u is an enhanced version of the [YOLOv5](https://github.com/ultralytics/yolov5) object detection model from Ultralytics. This iteration incorporates the anchor-free, objectness-free split head that is featured in the [YOLOv8](./yolov8.md) models. Although it maintains the same backbone and neck architecture as YOLOv5, YOLOv5u provides an improved accuracy-speed tradeoff for object detection tasks, making it a robust choice for numerous applications.

+

+

## Key Features

- **Anchor-free Split Ultralytics Head:** YOLOv5u replaces the conventional anchor-based detection head with an anchor-free split Ultralytics head, boosting performance in object detection tasks.

diff --git a/docs/models/yolov8.md b/docs/models/yolov8.md

index 159ed56..e1a0a5c 100644

--- a/docs/models/yolov8.md

+++ b/docs/models/yolov8.md

@@ -9,6 +9,8 @@ description: Learn about YOLOv8's pre-trained weights supporting detection, inst

YOLOv8 is the latest iteration in the YOLO series of real-time object detectors, offering cutting-edge performance in terms of accuracy and speed. Building upon the advancements of previous YOLO versions, YOLOv8 introduces new features and optimizations that make it an ideal choice for various object detection tasks in a wide range of applications.

+

+

## Key Features

- **Advanced Backbone and Neck Architectures:** YOLOv8 employs state-of-the-art backbone and neck architectures, resulting in improved feature extraction and object detection performance.

@@ -76,7 +78,6 @@ YOLOv8 is the latest iteration in the YOLO series of real-time object detectors,

| [YOLOv8x-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose.pt) | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 |

| [YOLOv8x-pose-p6](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose-p6.pt) | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 |

-

## Usage

You can use YOLOv8 for object detection tasks using the Ultralytics pip package. The following is a sample code snippet showing how to use YOLOv8 models for inference:

@@ -94,7 +95,6 @@ results = model('image.jpg')

results.print()

```

-

## Citation

If you use the YOLOv8 model or any other software from this repository in your work, please cite it using the following format:

diff --git a/docs/modes/index.md b/docs/modes/index.md

index 8e729ba..c9ae14a 100644

--- a/docs/modes/index.md

+++ b/docs/modes/index.md

@@ -9,12 +9,12 @@ description: Use Ultralytics YOLOv8 Modes (Train, Val, Predict, Export, Track, B

Ultralytics YOLOv8 supports several **modes** that can be used to perform different tasks. These modes are:

-**Train**: For training a YOLOv8 model on a custom dataset.

-**Val**: For validating a YOLOv8 model after it has been trained.

-**Predict**: For making predictions using a trained YOLOv8 model on new images or videos.

-**Export**: For exporting a YOLOv8 model to a format that can be used for deployment.

-**Track**: For tracking objects in real-time using a YOLOv8 model.

-**Benchmark**: For benchmarking YOLOv8 exports (ONNX, TensorRT, etc.) speed and accuracy.

+- **Train**: For training a YOLOv8 model on a custom dataset.

+- **Val**: For validating a YOLOv8 model after it has been trained.

+- **Predict**: For making predictions using a trained YOLOv8 model on new images or videos.

+- **Export**: For exporting a YOLOv8 model to a format that can be used for deployment.

+- **Track**: For tracking objects in real-time using a YOLOv8 model.

+- **Benchmark**: For benchmarking YOLOv8 exports (ONNX, TensorRT, etc.) speed and accuracy.

## [Train](train.md)

diff --git a/mkdocs.yml b/mkdocs.yml

index 57d8809..c1f2049 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -166,6 +166,7 @@ nav:

- YOLOv6: models/yolov6.md

- YOLOv8: models/yolov8.md

- SAM (Segment Anything Model): models/sam.md

+ - YOLO-NAS (Neural Architecture Search): models/yolo-nas.md

- RT-DETR (Realtime Detection Transformer): models/rtdetr.md

- Datasets:

- datasets/index.md

diff --git a/ultralytics/__init__.py b/ultralytics/__init__.py

index 83ca7d8..cd12c76 100644

--- a/ultralytics/__init__.py

+++ b/ultralytics/__init__.py

@@ -6,6 +6,7 @@ from ultralytics.hub import start

from ultralytics.vit.rtdetr import RTDETR

from ultralytics.vit.sam import SAM

from ultralytics.yolo.engine.model import YOLO

+from ultralytics.yolo.nas import NAS

from ultralytics.yolo.utils.checks import check_yolo as checks

-__all__ = '__version__', 'YOLO', 'SAM', 'RTDETR', 'checks', 'start' # allow simpler import

+__all__ = '__version__', 'YOLO', 'NAS', 'SAM', 'RTDETR', 'checks', 'start' # allow simpler import

diff --git a/ultralytics/vit/rtdetr/model.py b/ultralytics/vit/rtdetr/model.py

index 10c7d72..1297e15 100644

--- a/ultralytics/vit/rtdetr/model.py

+++ b/ultralytics/vit/rtdetr/model.py

@@ -110,3 +110,12 @@ class RTDETR:

if args.batch == DEFAULT_CFG.batch:

args.batch = 1 # default to 1 if not modified

return Exporter(overrides=args)(model=self.model)

+

+ def __call__(self, source=None, stream=False, **kwargs):

+ """Calls the 'predict' function with given arguments to perform object detection."""

+ return self.predict(source, stream, **kwargs)

+

+ def __getattr__(self, attr):

+ """Raises error if object has no requested attribute."""

+ name = self.__class__.__name__

+ raise AttributeError(f"'{name}' object has no attribute '{attr}'. See valid attributes below.\n{self.__doc__}")

diff --git a/ultralytics/vit/sam/model.py b/ultralytics/vit/sam/model.py

index d65433c..a60c8cf 100644

--- a/ultralytics/vit/sam/model.py

+++ b/ultralytics/vit/sam/model.py

@@ -35,6 +35,15 @@ class SAM:

"""Run validation given dataset."""

raise NotImplementedError("SAM models don't support validation")

+ def __call__(self, source=None, stream=False, **kwargs):

+ """Calls the 'predict' function with given arguments to perform object detection."""

+ return self.predict(source, stream, **kwargs)

+

+ def __getattr__(self, attr):

+ """Raises error if object has no requested attribute."""

+ name = self.__class__.__name__

+ raise AttributeError(f"'{name}' object has no attribute '{attr}'. See valid attributes below.\n{self.__doc__}")

+

def info(self, detailed=False, verbose=True):

"""

Logs model info.

diff --git a/ultralytics/yolo/nas/__init__.py b/ultralytics/yolo/nas/__init__.py

new file mode 100644

index 0000000..eec3837

--- /dev/null

+++ b/ultralytics/yolo/nas/__init__.py

@@ -0,0 +1,7 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+

+from .model import NAS

+from .predict import NASPredictor

+from .val import NASValidator

+

+__all__ = 'NASPredictor', 'NASValidator', 'NAS'

diff --git a/ultralytics/yolo/nas/model.py b/ultralytics/yolo/nas/model.py

new file mode 100644

index 0000000..f375dac

--- /dev/null

+++ b/ultralytics/yolo/nas/model.py

@@ -0,0 +1,125 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+"""

+# NAS model interface

+"""

+

+from pathlib import Path

+

+import torch

+

+from ultralytics.yolo.cfg import get_cfg

+from ultralytics.yolo.engine.exporter import Exporter

+from ultralytics.yolo.utils import DEFAULT_CFG, DEFAULT_CFG_DICT, LOGGER, ROOT, is_git_dir

+from ultralytics.yolo.utils.checks import check_imgsz

+

+from ...yolo.utils.torch_utils import model_info, smart_inference_mode

+from .predict import NASPredictor

+from .val import NASValidator

+

+

+class NAS:

+

+ def __init__(self, model='yolo_nas_s.pt') -> None:

+ # Load or create new NAS model

+ import super_gradients

+

+ self.predictor = None

+ suffix = Path(model).suffix

+ if suffix == '.pt':

+ self._load(model)

+ elif suffix == '':

+ self.model = super_gradients.training.models.get(model, pretrained_weights='coco')

+ self.task = 'detect'

+ self.model.args = DEFAULT_CFG_DICT # attach args to model

+

+ # Standardize model

+ self.model.fuse = lambda verbose: self.model

+ self.model.stride = torch.tensor([32])

+ self.model.names = dict(enumerate(self.model._class_names))

+ self.model.is_fused = lambda: False # for info()

+ self.model.yaml = {} # for info()

+ self.info()

+

+ @smart_inference_mode()

+ def _load(self, weights: str):

+ self.model = torch.load(weights)

+

+ @smart_inference_mode()

+ def predict(self, source=None, stream=False, **kwargs):

+ """

+ Perform prediction using the YOLO model.

+

+ Args:

+ source (str | int | PIL | np.ndarray): The source of the image to make predictions on.

+ Accepts all source types accepted by the YOLO model.

+ stream (bool): Whether to stream the predictions or not. Defaults to False.

+ **kwargs : Additional keyword arguments passed to the predictor.

+ Check the 'configuration' section in the documentation for all available options.

+

+ Returns:

+ (List[ultralytics.yolo.engine.results.Results]): The prediction results.

+ """

+ if source is None:

+ source = ROOT / 'assets' if is_git_dir() else 'https://ultralytics.com/images/bus.jpg'

+ LOGGER.warning(f"WARNING ⚠️ 'source' is missing. Using 'source={source}'.")

+ overrides = dict(conf=0.25, task='detect', mode='predict')

+ overrides.update(kwargs) # prefer kwargs

+ if not self.predictor:

+ self.predictor = NASPredictor(overrides=overrides)

+ self.predictor.setup_model(model=self.model)

+ else: # only update args if predictor is already setup

+ self.predictor.args = get_cfg(self.predictor.args, overrides)

+ return self.predictor(source, stream=stream)

+

+ def train(self, **kwargs):

+ """Function trains models but raises an error as NAS models do not support training."""

+ raise NotImplementedError("NAS models don't support training")

+

+ def val(self, **kwargs):

+ """Run validation given dataset."""

+ overrides = dict(task='detect', mode='val')

+ overrides.update(kwargs) # prefer kwargs

+ args = get_cfg(cfg=DEFAULT_CFG, overrides=overrides)

+ args.imgsz = check_imgsz(args.imgsz, max_dim=1)

+ validator = NASValidator(args=args)

+ validator(model=self.model)

+ self.metrics = validator.metrics

+ return validator.metrics

+

+ @smart_inference_mode()

+ def export(self, **kwargs):

+ """

+ Export model.

+

+ Args:

+ **kwargs : Any other args accepted by the predictors. To see all args check 'configuration' section in docs

+ """

+ overrides = dict(task='detect')

+ overrides.update(kwargs)

+ overrides['mode'] = 'export'

+ args = get_cfg(cfg=DEFAULT_CFG, overrides=overrides)

+ args.task = self.task

+ if args.imgsz == DEFAULT_CFG.imgsz:

+ args.imgsz = self.model.args['imgsz'] # use trained imgsz unless custom value is passed

+ if args.batch == DEFAULT_CFG.batch:

+ args.batch = 1 # default to 1 if not modified

+ return Exporter(overrides=args)(model=self.model)

+

+ def info(self, detailed=False, verbose=True):

+ """

+ Logs model info.

+

+ Args:

+ detailed (bool): Show detailed information about model.

+ verbose (bool): Controls verbosity.

+ """

+ return model_info(self.model, detailed=detailed, verbose=verbose, imgsz=640)

+

+ def __call__(self, source=None, stream=False, **kwargs):

+ """Calls the 'predict' function with given arguments to perform object detection."""

+ return self.predict(source, stream, **kwargs)

+

+ def __getattr__(self, attr):

+ """Raises error if object has no requested attribute."""

+ name = self.__class__.__name__

+ raise AttributeError(f"'{name}' object has no attribute '{attr}'. See valid attributes below.\n{self.__doc__}")

diff --git a/ultralytics/yolo/nas/predict.py b/ultralytics/yolo/nas/predict.py

new file mode 100644

index 0000000..e135bc1

--- /dev/null

+++ b/ultralytics/yolo/nas/predict.py

@@ -0,0 +1,35 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+

+import torch

+

+from ultralytics.yolo.engine.predictor import BasePredictor

+from ultralytics.yolo.engine.results import Results

+from ultralytics.yolo.utils import ops

+from ultralytics.yolo.utils.ops import xyxy2xywh

+

+

+class NASPredictor(BasePredictor):

+

+ def postprocess(self, preds_in, img, orig_imgs):

+ """Postprocesses predictions and returns a list of Results objects."""

+

+ # Cat boxes and class scores

+ boxes = xyxy2xywh(preds_in[0][0])

+ preds = torch.cat((boxes, preds_in[0][1]), -1).permute(0, 2, 1)

+

+ preds = ops.non_max_suppression(preds,

+ self.args.conf,

+ self.args.iou,

+ agnostic=self.args.agnostic_nms,

+ max_det=self.args.max_det,

+ classes=self.args.classes)

+

+ results = []

+ for i, pred in enumerate(preds):

+ orig_img = orig_imgs[i] if isinstance(orig_imgs, list) else orig_imgs

+ if not isinstance(orig_imgs, torch.Tensor):

+ pred[:, :4] = ops.scale_boxes(img.shape[2:], pred[:, :4], orig_img.shape)

+ path = self.batch[0]

+ img_path = path[i] if isinstance(path, list) else path

+ results.append(Results(orig_img=orig_img, path=img_path, names=self.model.names, boxes=pred))

+ return results

diff --git a/ultralytics/yolo/nas/val.py b/ultralytics/yolo/nas/val.py

new file mode 100644

index 0000000..474cf6b

--- /dev/null

+++ b/ultralytics/yolo/nas/val.py

@@ -0,0 +1,25 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+

+import torch

+

+from ultralytics.yolo.utils import ops

+from ultralytics.yolo.utils.ops import xyxy2xywh

+from ultralytics.yolo.v8.detect import DetectionValidator

+

+__all__ = ['NASValidator']

+

+

+class NASValidator(DetectionValidator):

+

+ def postprocess(self, preds_in):

+ """Apply Non-maximum suppression to prediction outputs."""

+ boxes = xyxy2xywh(preds_in[0][0])

+ preds = torch.cat((boxes, preds_in[0][1]), -1).permute(0, 2, 1)

+ return ops.non_max_suppression(preds,

+ self.args.conf,

+ self.args.iou,

+ labels=self.lb,

+ multi_label=False,

+ agnostic=self.args.single_cls,

+ max_det=self.args.max_det,

+ max_time_img=0.5)

+

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。

+

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。