diff --git a/docs/datasets/detect/coco8.md b/docs/datasets/detect/coco8.md

index 19480d2..a307d25 100644

--- a/docs/datasets/detect/coco8.md

+++ b/docs/datasets/detect/coco8.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Get started with Ultralytics COCO8. Ideal for testing and debugging object detection models or experimenting with new detection approaches.

---

-# 🚧 Page Under Construction ⚒

+# COCO8 Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8 is a small, but versatile object detection dataset composed of the first 8

+images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging

+object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough to be

+easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

+larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8 dataset, the `coco8.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8.yaml).

+

+!!! example "ultralytics/datasets/coco8.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8 dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8 dataset, along with their corresponding annotations:

+

+ +

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8 dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/datasets/detect/voc.md b/docs/datasets/detect/voc.md

index 19480d2..e5850cf 100644

--- a/docs/datasets/detect/voc.md

+++ b/docs/datasets/detect/voc.md

@@ -1,7 +1,90 @@

---

comments: true

+description: Learn about the VOC dataset, designed to encourage research on object detection, segmentation, and classification with standardized evaluation metrics.

---

-# 🚧 Page Under Construction ⚒

+# VOC Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+The [PASCAL VOC](http://host.robots.ox.ac.uk/pascal/VOC/) (Visual Object Classes) dataset is a well-known object detection, segmentation, and classification dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and classification tasks.

+

+## Key Features

+

+- VOC dataset includes two main challenges: VOC2007 and VOC2012.

+- The dataset comprises 20 object categories, including common objects like cars, bicycles, and animals, as well as more specific categories such as boats, sofas, and dining tables.

+- Annotations include object bounding boxes and class labels for object detection and classification tasks, and segmentation masks for the segmentation tasks.

+- VOC provides standardized evaluation metrics like mean Average Precision (mAP) for object detection and classification, making it suitable for comparing model performance.

+

+## Dataset Structure

+

+The VOC dataset is split into three subsets:

+

+1. **Train**: This subset contains images for training object detection, segmentation, and classification models.

+2. **Validation**: This subset has images used for validation purposes during model training.

+3. **Test**: This subset consists of images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [PASCAL VOC evaluation server](http://host.robots.ox.ac.uk:8080/leaderboard/displaylb.php) for performance evaluation.

+

+## Applications

+

+The VOC dataset is widely used for training and evaluating deep learning models in object detection (such as YOLO, Faster R-CNN, and SSD), instance segmentation (such as Mask R-CNN), and image classification. The dataset's diverse set of object categories, large number of annotated images, and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners.

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the VOC dataset, the `VOC.yaml` file should be created and maintained.

+

+!!! example "ultralytics/datasets/VOC.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/VOC.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='VOC.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from

+ a pretrained *.pt model

+ yolo detect train data=VOC.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

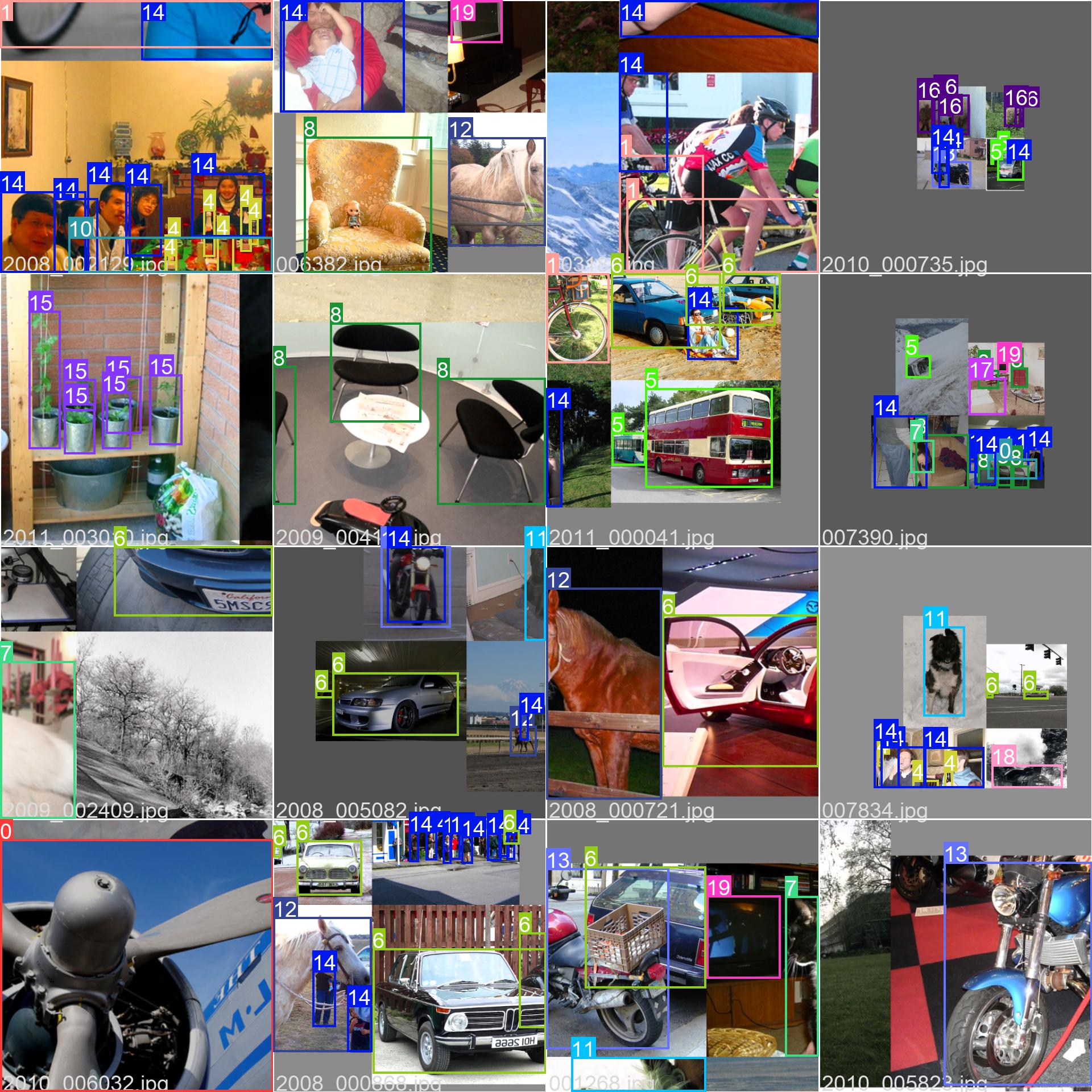

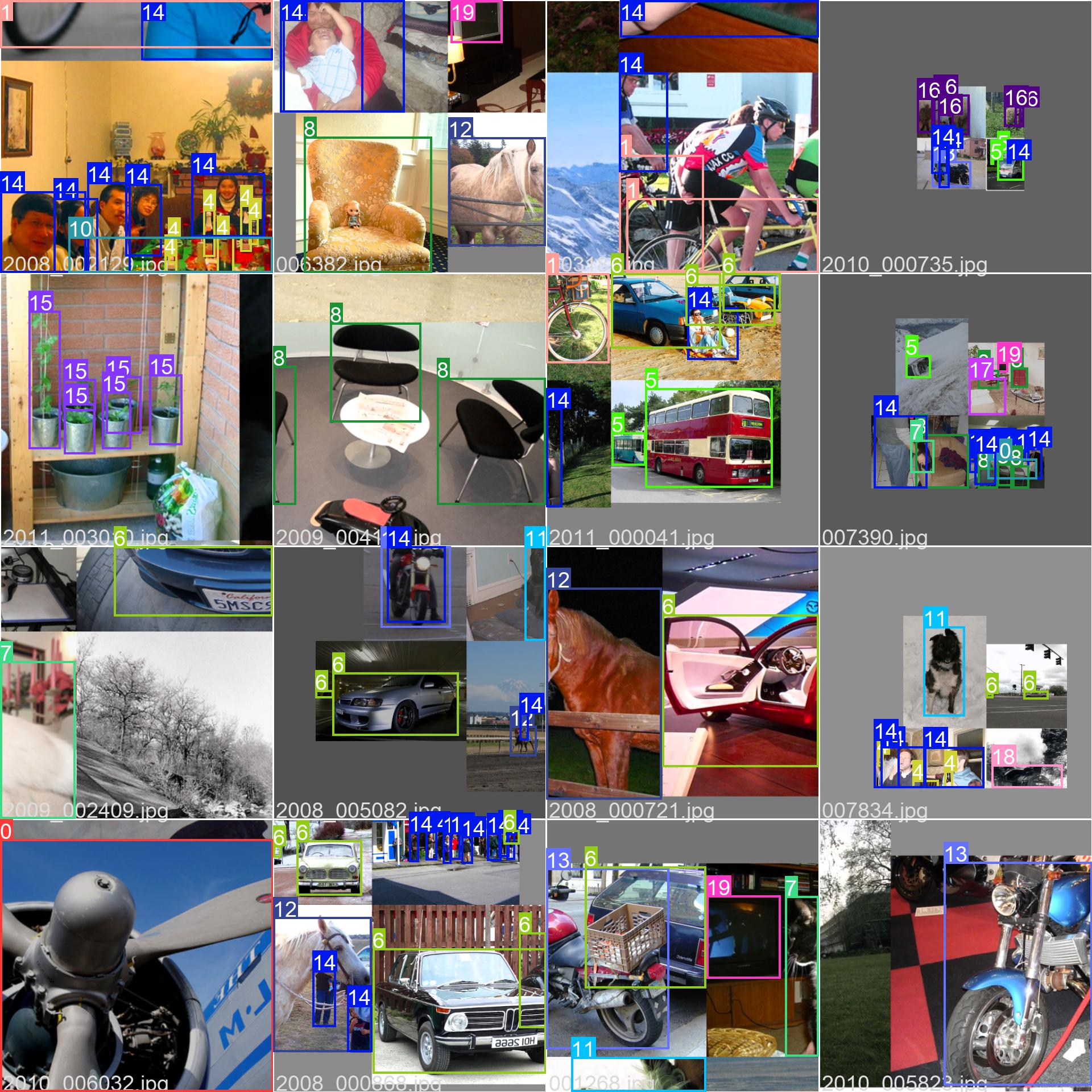

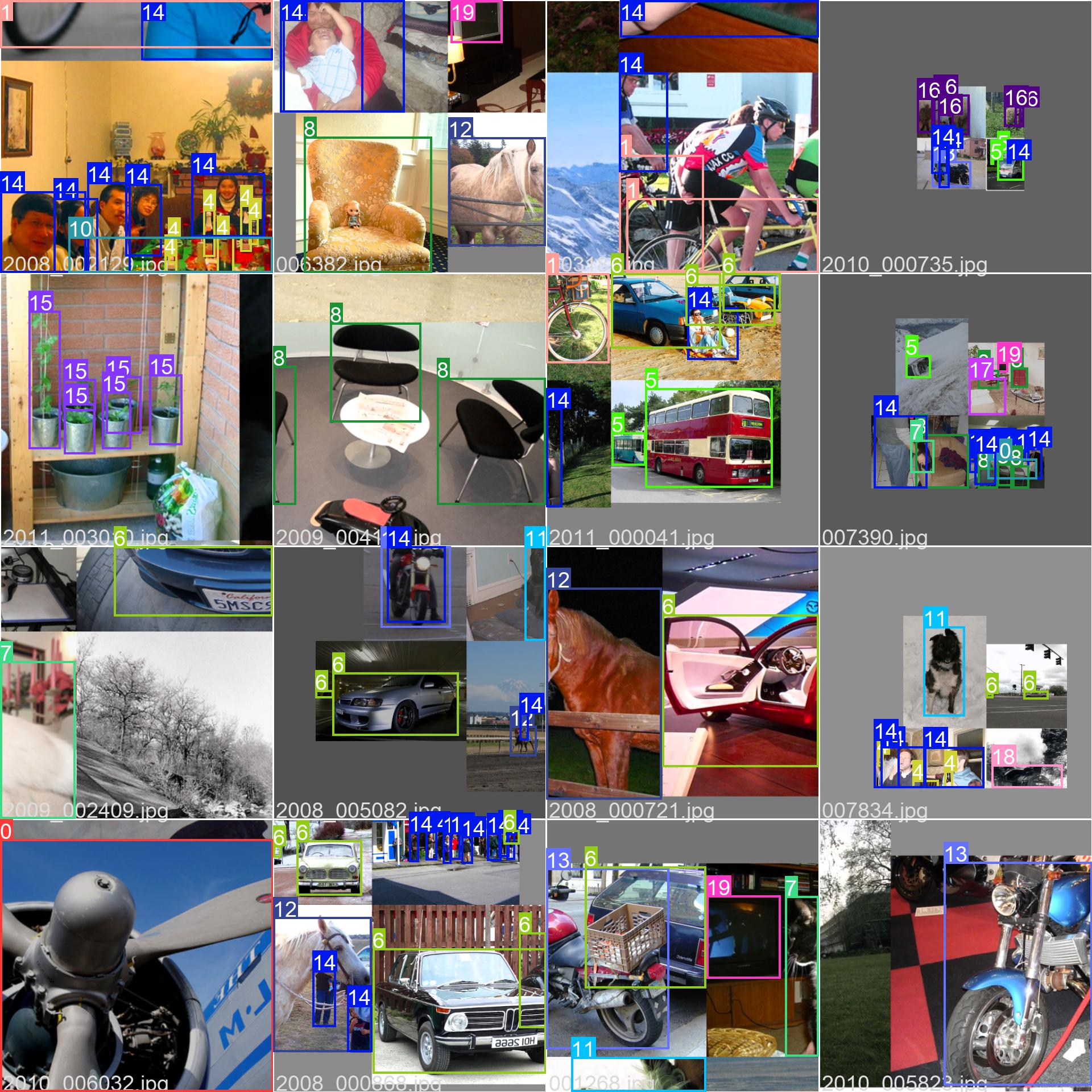

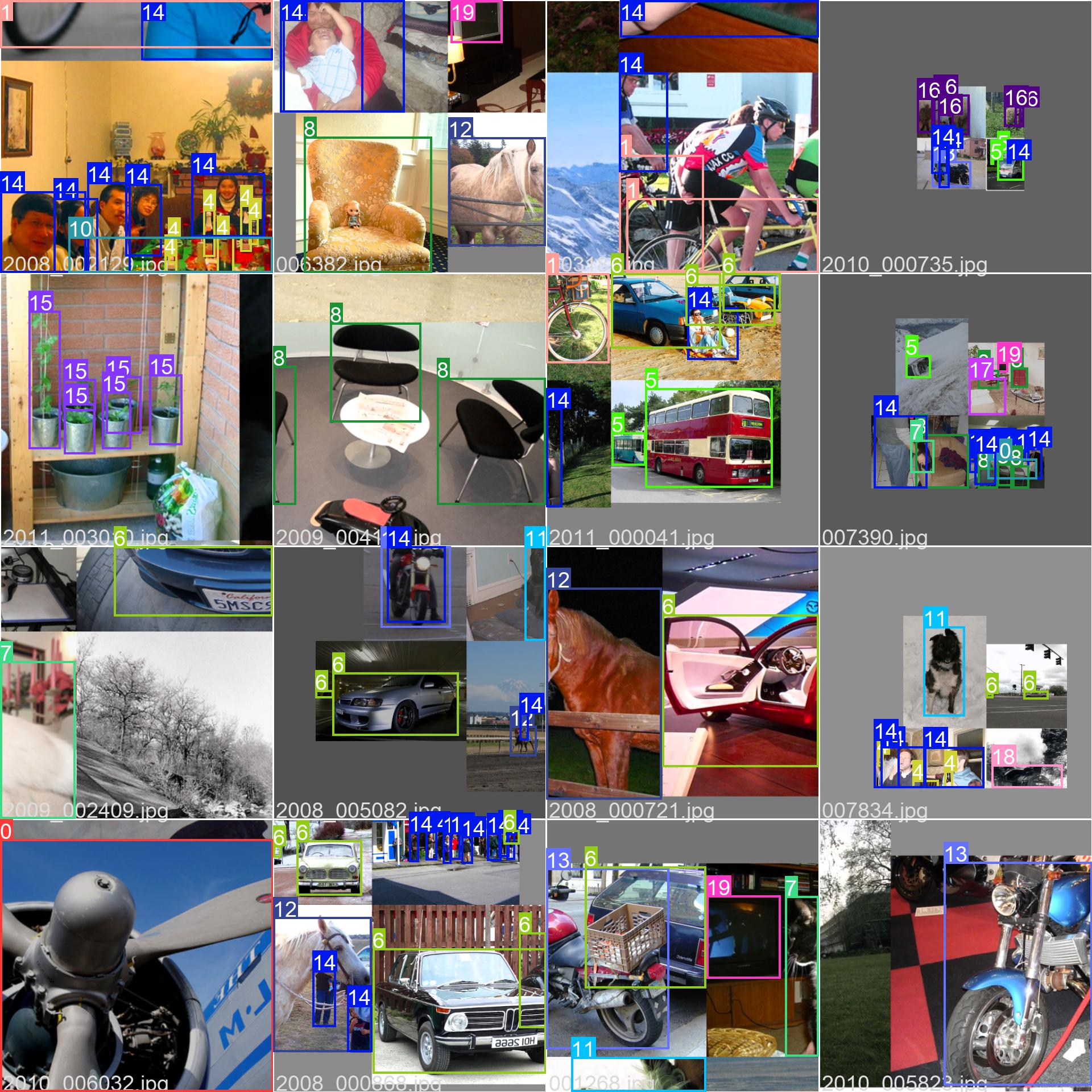

+The VOC dataset contains a diverse set of images with various object categories and complex scenes. Here are some examples of images from the dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the VOC dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the VOC dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{everingham2010pascal,

+ title={The PASCAL Visual Object Classes (VOC) Challenge},

+ author={Mark Everingham and Luc Van Gool and Christopher K. I. Williams and John Winn and Andrew Zisserman},

+ year={2010},

+ eprint={0909.5206},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the PASCAL VOC Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the VOC dataset and its creators, visit the [PASCAL VOC dataset website](http://host.robots.ox.ac.uk/pascal/VOC/).

\ No newline at end of file

diff --git a/docs/datasets/pose/coco8-pose.md b/docs/datasets/pose/coco8-pose.md

index 19480d2..cff6067 100644

--- a/docs/datasets/pose/coco8-pose.md

+++ b/docs/datasets/pose/coco8-pose.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Test and debug object detection models with Ultralytics COCO8-Pose Dataset - a small, versatile pose detection dataset with 8 images.

---

-# 🚧 Page Under Construction ⚒

+# COCO8-Pose Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first

+8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

+debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough

+to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before

+training larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Pose dataset, the `coco8-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml).

+

+!!! example "ultralytics/datasets/coco8-pose.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8-pose.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8-pose.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8-Pose dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8 dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/datasets/detect/voc.md b/docs/datasets/detect/voc.md

index 19480d2..e5850cf 100644

--- a/docs/datasets/detect/voc.md

+++ b/docs/datasets/detect/voc.md

@@ -1,7 +1,90 @@

---

comments: true

+description: Learn about the VOC dataset, designed to encourage research on object detection, segmentation, and classification with standardized evaluation metrics.

---

-# 🚧 Page Under Construction ⚒

+# VOC Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+The [PASCAL VOC](http://host.robots.ox.ac.uk/pascal/VOC/) (Visual Object Classes) dataset is a well-known object detection, segmentation, and classification dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and classification tasks.

+

+## Key Features

+

+- VOC dataset includes two main challenges: VOC2007 and VOC2012.

+- The dataset comprises 20 object categories, including common objects like cars, bicycles, and animals, as well as more specific categories such as boats, sofas, and dining tables.

+- Annotations include object bounding boxes and class labels for object detection and classification tasks, and segmentation masks for the segmentation tasks.

+- VOC provides standardized evaluation metrics like mean Average Precision (mAP) for object detection and classification, making it suitable for comparing model performance.

+

+## Dataset Structure

+

+The VOC dataset is split into three subsets:

+

+1. **Train**: This subset contains images for training object detection, segmentation, and classification models.

+2. **Validation**: This subset has images used for validation purposes during model training.

+3. **Test**: This subset consists of images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [PASCAL VOC evaluation server](http://host.robots.ox.ac.uk:8080/leaderboard/displaylb.php) for performance evaluation.

+

+## Applications

+

+The VOC dataset is widely used for training and evaluating deep learning models in object detection (such as YOLO, Faster R-CNN, and SSD), instance segmentation (such as Mask R-CNN), and image classification. The dataset's diverse set of object categories, large number of annotated images, and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners.

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the VOC dataset, the `VOC.yaml` file should be created and maintained.

+

+!!! example "ultralytics/datasets/VOC.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/VOC.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='VOC.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from

+ a pretrained *.pt model

+ yolo detect train data=VOC.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+The VOC dataset contains a diverse set of images with various object categories and complex scenes. Here are some examples of images from the dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the VOC dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the VOC dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{everingham2010pascal,

+ title={The PASCAL Visual Object Classes (VOC) Challenge},

+ author={Mark Everingham and Luc Van Gool and Christopher K. I. Williams and John Winn and Andrew Zisserman},

+ year={2010},

+ eprint={0909.5206},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the PASCAL VOC Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the VOC dataset and its creators, visit the [PASCAL VOC dataset website](http://host.robots.ox.ac.uk/pascal/VOC/).

\ No newline at end of file

diff --git a/docs/datasets/pose/coco8-pose.md b/docs/datasets/pose/coco8-pose.md

index 19480d2..cff6067 100644

--- a/docs/datasets/pose/coco8-pose.md

+++ b/docs/datasets/pose/coco8-pose.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Test and debug object detection models with Ultralytics COCO8-Pose Dataset - a small, versatile pose detection dataset with 8 images.

---

-# 🚧 Page Under Construction ⚒

+# COCO8-Pose Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first

+8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

+debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough

+to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before

+training larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Pose dataset, the `coco8-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml).

+

+!!! example "ultralytics/datasets/coco8-pose.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8-pose.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8-pose.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8-Pose dataset, along with their corresponding annotations:

+

+ +

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8-Pose dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/datasets/segment/coco8-seg.md b/docs/datasets/segment/coco8-seg.md

index 19480d2..2ff85a7 100644

--- a/docs/datasets/segment/coco8-seg.md

+++ b/docs/datasets/segment/coco8-seg.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Test and debug segmentation models on small, versatile COCO8-Seg instance segmentation dataset, now available for use with YOLOv8 and Ultralytics HUB.

---

-# 🚧 Page Under Construction ⚒

+# COCO8-Seg Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8-Seg is a small, but versatile instance segmentation dataset composed of the

+first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

+debugging segmentation models, or for experimenting with new detection approaches. With 8 images, it is small enough to

+be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

+larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Seg dataset, the `coco8-seg.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml).

+

+!!! example "ultralytics/datasets/coco8-seg.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8-seg.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8-seg.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8-Seg dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8-Pose dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/datasets/segment/coco8-seg.md b/docs/datasets/segment/coco8-seg.md

index 19480d2..2ff85a7 100644

--- a/docs/datasets/segment/coco8-seg.md

+++ b/docs/datasets/segment/coco8-seg.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Test and debug segmentation models on small, versatile COCO8-Seg instance segmentation dataset, now available for use with YOLOv8 and Ultralytics HUB.

---

-# 🚧 Page Under Construction ⚒

+# COCO8-Seg Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8-Seg is a small, but versatile instance segmentation dataset composed of the

+first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

+debugging segmentation models, or for experimenting with new detection approaches. With 8 images, it is small enough to

+be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

+larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Seg dataset, the `coco8-seg.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-seg.yaml).

+

+!!! example "ultralytics/datasets/coco8-seg.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8-seg.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8-seg.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8-Seg dataset, along with their corresponding annotations:

+

+ +

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8-Seg dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/models/index.md b/docs/models/index.md

index f594c05..e579b39 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -13,6 +13,7 @@ In this documentation, we provide information on four major models:

2. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture, offering better performance and speed tradeoffs compared to previous versions.

3. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

4. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

+5. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's RT-DETR model.

You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

diff --git a/docs/models/rtdetr.md b/docs/models/rtdetr.md

new file mode 100644

index 0000000..11d44c8

--- /dev/null

+++ b/docs/models/rtdetr.md

@@ -0,0 +1,52 @@

+---

+comments: true

+description: Explore RT-DETR, a high-performance real-time object detector. Learn how to use pre-trained models with Ultralytics Python API for various tasks.

+---

+

+# RT-DETR

+

+## Overview

+

+Real-Time Detection Transformer (RT-DETR) is an end-to-end object detector that provides real-time performance while maintaining high accuracy. It efficiently processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion, and supports flexible adjustment of inference speed using different decoder layers without retraining. RT-DETR outperforms many real-time object detectors on accelerated backends like CUDA with TensorRT.

+

+### Key Features

+

+- **Efficient Hybrid Encoder:** RT-DETR uses an efficient hybrid encoder that processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion. This design reduces computational costs and allows for real-time object detection.

+- **IoU-aware Query Selection:** RT-DETR improves object query initialization by utilizing IoU-aware query selection. This allows the model to focus on the most relevant objects in the scene.

+- **Adaptable Inference Speed:** RT-DETR supports flexible adjustments of inference speed by using different decoder layers without the need for retraining. This adaptability facilitates practical application in various real-time object detection scenarios.

+

+## Pre-trained Models

+

+Ultralytics RT-DETR provides several pre-trained models with different scales:

+

+- RT-DETR-L: 53.0% AP on COCO val2017, 114 FPS on T4 GPU

+- RT-DETR-X: 54.8% AP on COCO val2017, 74 FPS on T4 GPU

+

+## Usage

+

+### Python API

+

+```python

+from ultralytics import RTDETR

+

+model = RTDETR("rtdetr-l.pt")

+model.info() # display model information

+model.predict("path/to/image.jpg") # predict

+```

+

+### Supported Tasks

+

+| Model Type | Pre-trained Weights | Tasks Supported |

+|---------------------|---------------------|------------------|

+| RT-DETR Large | `rtdetr-l.pt` | Object Detection |

+| RT-DETR Extra-Large | `rtdetr-x.pt` | Object Detection |

+

+### Supported Modes

+

+| Mode | Supported |

+|------------|--------------------|

+| Inference | :heavy_check_mark: |

+| Validation | :heavy_check_mark: |

+| Training | :x: (Coming soon) |

+

+For more information about the RT-DETR model, please refer to the [original paper](https://arxiv.org/abs/2304.08069) and the [PaddleDetection repository](https://github.com/PaddlePaddle/PaddleDetection).

\ No newline at end of file

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 9f22963..d71ee96 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -1,26 +1,37 @@

---

comments: true

-description: Learn about the Vision Transformer (ViT) and segment anything with SAM models. Train and use pre-trained models with Python API.

+description: Learn about the Segment Anything Model (SAM) and how it provides promptable image segmentation through an advanced architecture and the SA-1B dataset.

---

-# Vision Transformers

+# Segment Anything Model (SAM)

-Vit models currently support Python environment:

+## Overview

+

+The Segment Anything Model (SAM) is a groundbreaking image segmentation model that enables promptable segmentation with real-time performance. It forms the foundation for the Segment Anything project, which introduces a new task, model, and dataset for image segmentation. SAM is designed to be promptable, allowing it to transfer zero-shot to new image distributions and tasks. The model is trained on the [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/), which contains over 1 billion masks on 11 million licensed and privacy-respecting images. SAM has demonstrated impressive zero-shot performance, often surpassing prior fully supervised results.

+

+

+

+## Key Features

+

+- **Promptable Segmentation Task:** SAM is designed for a promptable segmentation task, enabling it to return a valid segmentation mask given any segmentation prompt, such as spatial or text information identifying an object.

+- **Advanced Architecture:** SAM utilizes a powerful image encoder, a prompt encoder, and a lightweight mask decoder. This architecture enables flexible prompting, real-time mask computation, and ambiguity awareness in segmentation.

+- **SA-1B Dataset:** The Segment Anything project introduces the SA-1B dataset, which contains over 1 billion masks on 11 million images. This dataset is the largest segmentation dataset to date, providing SAM with a diverse and large-scale source of data for training.

+- **Zero-Shot Performance:** SAM demonstrates remarkable zero-shot performance across a range of segmentation tasks, allowing it to be used out-of-the-box with prompt engineering for various applications.

+

+For more information about the Segment Anything Model and the SA-1B dataset, please refer to the [Segment Anything website](https://segment-anything.com) and the research paper [Segment Anything](https://arxiv.org/abs/2304.02643).

+

+## Usage

+

+SAM can be used for a variety of downstream tasks involving object and image distributions beyond its training data. Examples include edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. By employing prompt engineering, SAM can adapt to new tasks and data distributions in a zero-shot manner, making it a versatile and powerful tool for image segmentation tasks.

```python

from ultralytics.vit import SAM

-# from ultralytics.vit import MODEL_TYPE

-

-model = SAM("sam_b.pt")

+model = SAM('sam_b.pt')

model.info() # display model information

-model.predict(...) # predict

+model.predict('path/to/image.jpg') # predict

```

-# Segment Anything

-

-## About

-

## Supported Tasks

| Model Type | Pre-trained Weights | Tasks Supported |

@@ -34,4 +45,21 @@ model.predict(...) # predict

|------------|--------------------|

| Inference | :heavy_check_mark: |

| Validation | :x: |

-| Training | :x: |

\ No newline at end of file

+| Training | :x: |

+

+# Citations and Acknowledgements

+

+If you use SAM in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{kirillov2023segment,

+ title={Segment Anything},

+ author={Alexander Kirillov and Eric Mintun and Nikhila Ravi and Hanzi Mao and Chloe Rolland and Laura Gustafson and Tete Xiao and Spencer Whitehead and Alexander C. Berg and Wan-Yen Lo and Piotr Dollár and Ross Girshick},

+ year={2023},

+ eprint={2304.02643},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge Meta AI for creating and maintaining this valuable resource for the computer vision community.

\ No newline at end of file

diff --git a/docs/models/yolov5.md b/docs/models/yolov5.md

index ae8b866..00a0a4b 100644

--- a/docs/models/yolov5.md

+++ b/docs/models/yolov5.md

@@ -5,9 +5,15 @@ description: Detect objects faster and more accurately using Ultralytics YOLOv5u

# YOLOv5u

-## About

+## Overview

-Anchor-free YOLOv5 models with improved accuracy-speed tradeoff.

+YOLOv5u is an updated version of YOLOv5 that incorporates the anchor-free split Ultralytics head used in the YOLOv8 models. It retains the same backbone and neck architecture as YOLOv5 but offers improved accuracy-speed tradeoff for object detection tasks.

+

+## Key Features

+

+- **Anchor-free Split Ultralytics Head:** YOLOv5u replaces the traditional anchor-based detection head with an anchor-free split Ultralytics head, resulting in improved performance.

+- **Optimized Accuracy-Speed Tradeoff:** The updated model offers a better balance between accuracy and speed, making it more suitable for a wider range of applications.

+- **Variety of Pre-trained Models:** YOLOv5u offers a range of pre-trained models tailored for various tasks, including Inference, Validation, and Training.

## Supported Tasks

diff --git a/docs/models/yolov8.md b/docs/models/yolov8.md

index d14d6fb..53df59c 100644

--- a/docs/models/yolov8.md

+++ b/docs/models/yolov8.md

@@ -5,7 +5,16 @@ description: Learn about YOLOv8's pre-trained weights supporting detection, inst

# YOLOv8

-## About

+## Overview

+

+YOLOv8 is the latest iteration in the YOLO series of real-time object detectors, offering cutting-edge performance in terms of accuracy and speed. Building upon the advancements of previous YOLO versions, YOLOv8 introduces new features and optimizations that make it an ideal choice for various object detection tasks in a wide range of applications.

+

+## Key Features

+

+- **Advanced Backbone and Neck Architectures:** YOLOv8 employs state-of-the-art backbone and neck architectures, resulting in improved feature extraction and object detection performance.

+- **Anchor-free Split Ultralytics Head:** YOLOv8 adopts an anchor-free split Ultralytics head, which contributes to better accuracy and a more efficient detection process compared to anchor-based approaches.

+- **Optimized Accuracy-Speed Tradeoff:** With a focus on maintaining an optimal balance between accuracy and speed, YOLOv8 is suitable for real-time object detection tasks in diverse application areas.

+- **Variety of Pre-trained Models:** YOLOv8 offers a range of pre-trained models to cater to various tasks and performance requirements, making it easier to find the right model for your specific use case.

## Supported Tasks

diff --git a/docs/reference/nn/modules/block.md b/docs/reference/nn/modules/block.md

index 0eb5470..32f0394 100644

--- a/docs/reference/nn/modules/block.md

+++ b/docs/reference/nn/modules/block.md

@@ -1,3 +1,7 @@

+---

+description: Explore ultralytics.nn.modules.block to build powerful YOLO object detection models. Master DFL, HGStem, SPP, CSP components and more.

+---

+

# DFL

---

:::ultralytics.nn.modules.block.DFL

@@ -81,4 +85,4 @@

# BottleneckCSP

---

:::ultralytics.nn.modules.block.BottleneckCSP

-

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8-Seg dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/models/index.md b/docs/models/index.md

index f594c05..e579b39 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -13,6 +13,7 @@ In this documentation, we provide information on four major models:

2. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture, offering better performance and speed tradeoffs compared to previous versions.

3. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

4. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

+5. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's RT-DETR model.

You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

diff --git a/docs/models/rtdetr.md b/docs/models/rtdetr.md

new file mode 100644

index 0000000..11d44c8

--- /dev/null

+++ b/docs/models/rtdetr.md

@@ -0,0 +1,52 @@

+---

+comments: true

+description: Explore RT-DETR, a high-performance real-time object detector. Learn how to use pre-trained models with Ultralytics Python API for various tasks.

+---

+

+# RT-DETR

+

+## Overview

+

+Real-Time Detection Transformer (RT-DETR) is an end-to-end object detector that provides real-time performance while maintaining high accuracy. It efficiently processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion, and supports flexible adjustment of inference speed using different decoder layers without retraining. RT-DETR outperforms many real-time object detectors on accelerated backends like CUDA with TensorRT.

+

+### Key Features

+

+- **Efficient Hybrid Encoder:** RT-DETR uses an efficient hybrid encoder that processes multi-scale features by decoupling intra-scale interaction and cross-scale fusion. This design reduces computational costs and allows for real-time object detection.

+- **IoU-aware Query Selection:** RT-DETR improves object query initialization by utilizing IoU-aware query selection. This allows the model to focus on the most relevant objects in the scene.

+- **Adaptable Inference Speed:** RT-DETR supports flexible adjustments of inference speed by using different decoder layers without the need for retraining. This adaptability facilitates practical application in various real-time object detection scenarios.

+

+## Pre-trained Models

+

+Ultralytics RT-DETR provides several pre-trained models with different scales:

+

+- RT-DETR-L: 53.0% AP on COCO val2017, 114 FPS on T4 GPU

+- RT-DETR-X: 54.8% AP on COCO val2017, 74 FPS on T4 GPU

+

+## Usage

+

+### Python API

+

+```python

+from ultralytics import RTDETR

+

+model = RTDETR("rtdetr-l.pt")

+model.info() # display model information

+model.predict("path/to/image.jpg") # predict

+```

+

+### Supported Tasks

+

+| Model Type | Pre-trained Weights | Tasks Supported |

+|---------------------|---------------------|------------------|

+| RT-DETR Large | `rtdetr-l.pt` | Object Detection |

+| RT-DETR Extra-Large | `rtdetr-x.pt` | Object Detection |

+

+### Supported Modes

+

+| Mode | Supported |

+|------------|--------------------|

+| Inference | :heavy_check_mark: |

+| Validation | :heavy_check_mark: |

+| Training | :x: (Coming soon) |

+

+For more information about the RT-DETR model, please refer to the [original paper](https://arxiv.org/abs/2304.08069) and the [PaddleDetection repository](https://github.com/PaddlePaddle/PaddleDetection).

\ No newline at end of file

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 9f22963..d71ee96 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -1,26 +1,37 @@

---

comments: true

-description: Learn about the Vision Transformer (ViT) and segment anything with SAM models. Train and use pre-trained models with Python API.

+description: Learn about the Segment Anything Model (SAM) and how it provides promptable image segmentation through an advanced architecture and the SA-1B dataset.

---

-# Vision Transformers

+# Segment Anything Model (SAM)

-Vit models currently support Python environment:

+## Overview

+

+The Segment Anything Model (SAM) is a groundbreaking image segmentation model that enables promptable segmentation with real-time performance. It forms the foundation for the Segment Anything project, which introduces a new task, model, and dataset for image segmentation. SAM is designed to be promptable, allowing it to transfer zero-shot to new image distributions and tasks. The model is trained on the [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/), which contains over 1 billion masks on 11 million licensed and privacy-respecting images. SAM has demonstrated impressive zero-shot performance, often surpassing prior fully supervised results.

+

+

+

+## Key Features

+

+- **Promptable Segmentation Task:** SAM is designed for a promptable segmentation task, enabling it to return a valid segmentation mask given any segmentation prompt, such as spatial or text information identifying an object.

+- **Advanced Architecture:** SAM utilizes a powerful image encoder, a prompt encoder, and a lightweight mask decoder. This architecture enables flexible prompting, real-time mask computation, and ambiguity awareness in segmentation.

+- **SA-1B Dataset:** The Segment Anything project introduces the SA-1B dataset, which contains over 1 billion masks on 11 million images. This dataset is the largest segmentation dataset to date, providing SAM with a diverse and large-scale source of data for training.

+- **Zero-Shot Performance:** SAM demonstrates remarkable zero-shot performance across a range of segmentation tasks, allowing it to be used out-of-the-box with prompt engineering for various applications.

+

+For more information about the Segment Anything Model and the SA-1B dataset, please refer to the [Segment Anything website](https://segment-anything.com) and the research paper [Segment Anything](https://arxiv.org/abs/2304.02643).

+

+## Usage

+

+SAM can be used for a variety of downstream tasks involving object and image distributions beyond its training data. Examples include edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. By employing prompt engineering, SAM can adapt to new tasks and data distributions in a zero-shot manner, making it a versatile and powerful tool for image segmentation tasks.

```python

from ultralytics.vit import SAM

-# from ultralytics.vit import MODEL_TYPE

-

-model = SAM("sam_b.pt")

+model = SAM('sam_b.pt')

model.info() # display model information

-model.predict(...) # predict

+model.predict('path/to/image.jpg') # predict

```

-# Segment Anything

-

-## About

-

## Supported Tasks

| Model Type | Pre-trained Weights | Tasks Supported |

@@ -34,4 +45,21 @@ model.predict(...) # predict

|------------|--------------------|

| Inference | :heavy_check_mark: |

| Validation | :x: |

-| Training | :x: |

\ No newline at end of file

+| Training | :x: |

+

+# Citations and Acknowledgements

+

+If you use SAM in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{kirillov2023segment,

+ title={Segment Anything},

+ author={Alexander Kirillov and Eric Mintun and Nikhila Ravi and Hanzi Mao and Chloe Rolland and Laura Gustafson and Tete Xiao and Spencer Whitehead and Alexander C. Berg and Wan-Yen Lo and Piotr Dollár and Ross Girshick},

+ year={2023},

+ eprint={2304.02643},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge Meta AI for creating and maintaining this valuable resource for the computer vision community.

\ No newline at end of file

diff --git a/docs/models/yolov5.md b/docs/models/yolov5.md

index ae8b866..00a0a4b 100644

--- a/docs/models/yolov5.md

+++ b/docs/models/yolov5.md

@@ -5,9 +5,15 @@ description: Detect objects faster and more accurately using Ultralytics YOLOv5u

# YOLOv5u

-## About

+## Overview

-Anchor-free YOLOv5 models with improved accuracy-speed tradeoff.

+YOLOv5u is an updated version of YOLOv5 that incorporates the anchor-free split Ultralytics head used in the YOLOv8 models. It retains the same backbone and neck architecture as YOLOv5 but offers improved accuracy-speed tradeoff for object detection tasks.

+

+## Key Features

+

+- **Anchor-free Split Ultralytics Head:** YOLOv5u replaces the traditional anchor-based detection head with an anchor-free split Ultralytics head, resulting in improved performance.

+- **Optimized Accuracy-Speed Tradeoff:** The updated model offers a better balance between accuracy and speed, making it more suitable for a wider range of applications.

+- **Variety of Pre-trained Models:** YOLOv5u offers a range of pre-trained models tailored for various tasks, including Inference, Validation, and Training.

## Supported Tasks

diff --git a/docs/models/yolov8.md b/docs/models/yolov8.md

index d14d6fb..53df59c 100644

--- a/docs/models/yolov8.md

+++ b/docs/models/yolov8.md

@@ -5,7 +5,16 @@ description: Learn about YOLOv8's pre-trained weights supporting detection, inst

# YOLOv8

-## About

+## Overview

+

+YOLOv8 is the latest iteration in the YOLO series of real-time object detectors, offering cutting-edge performance in terms of accuracy and speed. Building upon the advancements of previous YOLO versions, YOLOv8 introduces new features and optimizations that make it an ideal choice for various object detection tasks in a wide range of applications.

+

+## Key Features

+

+- **Advanced Backbone and Neck Architectures:** YOLOv8 employs state-of-the-art backbone and neck architectures, resulting in improved feature extraction and object detection performance.

+- **Anchor-free Split Ultralytics Head:** YOLOv8 adopts an anchor-free split Ultralytics head, which contributes to better accuracy and a more efficient detection process compared to anchor-based approaches.

+- **Optimized Accuracy-Speed Tradeoff:** With a focus on maintaining an optimal balance between accuracy and speed, YOLOv8 is suitable for real-time object detection tasks in diverse application areas.

+- **Variety of Pre-trained Models:** YOLOv8 offers a range of pre-trained models to cater to various tasks and performance requirements, making it easier to find the right model for your specific use case.

## Supported Tasks

diff --git a/docs/reference/nn/modules/block.md b/docs/reference/nn/modules/block.md

index 0eb5470..32f0394 100644

--- a/docs/reference/nn/modules/block.md

+++ b/docs/reference/nn/modules/block.md

@@ -1,3 +1,7 @@

+---

+description: Explore ultralytics.nn.modules.block to build powerful YOLO object detection models. Master DFL, HGStem, SPP, CSP components and more.

+---

+

# DFL

---

:::ultralytics.nn.modules.block.DFL

@@ -81,4 +85,4 @@

# BottleneckCSP

---

:::ultralytics.nn.modules.block.BottleneckCSP

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/conv.md b/docs/reference/nn/modules/conv.md

index 779dcd8..414fce5 100644

--- a/docs/reference/nn/modules/conv.md

+++ b/docs/reference/nn/modules/conv.md

@@ -1,3 +1,7 @@

+---

+description: Explore convolutional neural network modules & techniques such as LightConv, DWConv, ConvTranspose, GhostConv, CBAM & autopad with Ultralytics Docs.

+---

+

# Conv

---

:::ultralytics.nn.modules.conv.Conv

@@ -61,4 +65,4 @@

# autopad

---

:::ultralytics.nn.modules.conv.autopad

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/head.md b/docs/reference/nn/modules/head.md

index 2515ca8..c6d1be5 100644

--- a/docs/reference/nn/modules/head.md

+++ b/docs/reference/nn/modules/head.md

@@ -1,3 +1,7 @@

+---

+description: 'Learn about Ultralytics YOLO modules: Segment, Classify, and RTDETRDecoder. Optimize object detection and classification in your project.'

+---

+

# Detect

---

:::ultralytics.nn.modules.head.Detect

@@ -21,4 +25,4 @@

# RTDETRDecoder

---

:::ultralytics.nn.modules.head.RTDETRDecoder

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/transformer.md b/docs/reference/nn/modules/transformer.md

index c607b93..1fa0296 100644

--- a/docs/reference/nn/modules/transformer.md

+++ b/docs/reference/nn/modules/transformer.md

@@ -1,3 +1,7 @@

+---

+description: Explore the Ultralytics nn modules pages on Transformer and MLP blocks, LayerNorm2d, and Deformable Transformer Decoder Layer.

+---

+

# TransformerEncoderLayer

---

:::ultralytics.nn.modules.transformer.TransformerEncoderLayer

@@ -46,4 +50,4 @@

# DeformableTransformerDecoder

---

:::ultralytics.nn.modules.transformer.DeformableTransformerDecoder

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/utils.md b/docs/reference/nn/modules/utils.md

index 8ee2577..efc8841 100644

--- a/docs/reference/nn/modules/utils.md

+++ b/docs/reference/nn/modules/utils.md

@@ -1,3 +1,7 @@

+---

+description: 'Learn about Ultralytics NN modules: get_clones, linear_init_, and multi_scale_deformable_attn_pytorch. Code examples and usage tips.'

+---

+

# _get_clones

---

:::ultralytics.nn.modules.utils._get_clones

@@ -21,4 +25,4 @@

# multi_scale_deformable_attn_pytorch

---

:::ultralytics.nn.modules.utils.multi_scale_deformable_attn_pytorch

-

+

\ No newline at end of file

diff --git a/mkdocs.yml b/mkdocs.yml

index 5f50397..f68fafd 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -63,19 +63,19 @@ extra:

analytics:

provider: google

property: G-2M5EHKC0BH

- feedback:

- title: Was this page helpful?

- ratings:

- - icon: material/heart

- name: This page was helpful

- data: 1

- note: Thanks for your feedback!

- - icon: material/heart-broken

- name: This page could be improved

- data: 0

- note: >-

- Thanks for your feedback!

- Tell us what we can improve.

+ # feedback:

+ # title: Was this page helpful?

+ # ratings:

+ # - icon: material/heart

+ # name: This page was helpful

+ # data: 1

+ # note: Thanks for your feedback!

+ # - icon: material/heart-broken

+ # name: This page could be improved

+ # data: 0

+ # note: >-

+ # Thanks for your feedback!

+ # Tell us what we can improve.

social:

- icon: fontawesome/brands/github

@@ -164,7 +164,8 @@ nav:

- YOLOv3: models/yolov3.md

- YOLOv5: models/yolov5.md

- YOLOv8: models/yolov8.md

- - Segment Anything Model (SAM): models/sam.md

+ - SAM (Segment Anything Model): models/sam.md

+ - RT-DETR (Realtime Detection Transformer): models/rtdetr.md

- Datasets:

- datasets/index.md

- Detection:

@@ -348,9 +349,6 @@ plugins:

add_image: True

add_share_buttons: True

default_image: https://github.com/ultralytics/ultralytics/assets/26833433/6d09221c-c52a-4234-9a5d-b862e93c6529

- - git-revision-date-localized:

- type: timeago

- enable_creation_date: true

- redirects:

redirect_maps:

callbacks.md: usage/callbacks.md

diff --git a/setup.py b/setup.py

index 6418e08..1bb0f97 100644

--- a/setup.py

+++ b/setup.py

@@ -47,8 +47,7 @@ setup(

'mkdocs-material',

'mkdocstrings[python]',

'mkdocs-redirects', # for 301 redirects

- 'mkdocs-git-revision-date-localized-plugin', # for created/updated dates

- 'mkdocs-ultralytics-plugin', # for meta descriptions and images

+ 'mkdocs-ultralytics-plugin', # for meta descriptions and images, dates and authors

],

'export': ['coremltools>=6.0', 'openvino-dev>=2022.3', 'tensorflowjs'], # automatically installs tensorflow

},

diff --git a/ultralytics/__init__.py b/ultralytics/__init__.py

index 0e1c4da..3a424e9 100644

--- a/ultralytics/__init__.py

+++ b/ultralytics/__init__.py

@@ -1,6 +1,6 @@

# Ultralytics YOLO 🚀, AGPL-3.0 license

-__version__ = '8.0.98'

+__version__ = '8.0.99'

from ultralytics.hub import start

from ultralytics.vit.rtdetr import RTDETR

diff --git a/ultralytics/vit/rtdetr/model.py b/ultralytics/vit/rtdetr/model.py

index fc81b7c..d50c062 100644

--- a/ultralytics/vit/rtdetr/model.py

+++ b/ultralytics/vit/rtdetr/model.py

@@ -10,6 +10,7 @@ from ultralytics.yolo.cfg import get_cfg

from ultralytics.yolo.engine.exporter import Exporter

from ultralytics.yolo.utils import DEFAULT_CFG, DEFAULT_CFG_DICT

from ultralytics.yolo.utils.checks import check_imgsz

+from ultralytics.yolo.utils.torch_utils import model_info

from ...yolo.utils.torch_utils import smart_inference_mode

from .predict import RTDETRPredictor

@@ -84,6 +85,10 @@ class RTDETR:

self.metrics = validator.metrics

return validator.metrics

+ def info(self, verbose=True):

+ """Get model info"""

+ return model_info(self.model, verbose=verbose)

+

@smart_inference_mode()

def export(self, **kwargs):

"""

diff --git a/ultralytics/yolo/engine/trainer.py b/ultralytics/yolo/engine/trainer.py

index 557e8be..dcfeb86 100644

--- a/ultralytics/yolo/engine/trainer.py

+++ b/ultralytics/yolo/engine/trainer.py

@@ -190,6 +190,17 @@ class BaseTrainer:

else:

self._do_train(world_size)

+ def _pre_caching_dataset(self):

+ """

+ Caching dataset before training to avoid NCCL timeout.

+ Must be done before DDP initialization.

+ See https://github.com/ultralytics/ultralytics/pull/2549 for details.

+ """

+ if RANK in (-1, 0):

+ LOGGER.info('Pre-caching dataset to avoid NCCL timeout')

+ self.get_dataloader(self.trainset, batch_size=1, rank=RANK, mode='train')

+ self.get_dataloader(self.testset, batch_size=1, rank=-1, mode='val')

+

def _setup_ddp(self, world_size):

"""Initializes and sets the DistributedDataParallel parameters for training."""

torch.cuda.set_device(RANK)

@@ -263,6 +274,7 @@ class BaseTrainer:

def _do_train(self, world_size=1):

"""Train completed, evaluate and plot if specified by arguments."""

if world_size > 1:

+ self._pre_caching_dataset()

self._setup_ddp(world_size)

self._setup_train(world_size)

@@ -549,10 +561,15 @@ class BaseTrainer:

resume = self.args.resume

if resume:

try:

- last = Path(

- check_file(resume) if isinstance(resume, (str,

- Path)) and Path(resume).exists() else get_latest_run())

- self.args = get_cfg(attempt_load_weights(last).args)

+ exists = isinstance(resume, (str, Path)) and Path(resume).exists()

+ last = Path(check_file(resume) if exists else get_latest_run())

+

+ # Check that resume data YAML exists, otherwise strip to force re-download of dataset

+ ckpt_args = attempt_load_weights(last).args

+ if not Path(ckpt_args['data']).exists():

+ ckpt_args['data'] = self.args.data

+

+ self.args = get_cfg(ckpt_args)

self.args.model, resume = str(last), True # reinstate

except Exception as e:

raise FileNotFoundError('Resume checkpoint not found. Please pass a valid checkpoint to resume from, '

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8 dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the COCO Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the COCO dataset and its creators, visit the [COCO dataset website](https://cocodataset.org/#home).

\ No newline at end of file

diff --git a/docs/datasets/detect/voc.md b/docs/datasets/detect/voc.md

index 19480d2..e5850cf 100644

--- a/docs/datasets/detect/voc.md

+++ b/docs/datasets/detect/voc.md

@@ -1,7 +1,90 @@

---

comments: true

+description: Learn about the VOC dataset, designed to encourage research on object detection, segmentation, and classification with standardized evaluation metrics.

---

-# 🚧 Page Under Construction ⚒

+# VOC Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+The [PASCAL VOC](http://host.robots.ox.ac.uk/pascal/VOC/) (Visual Object Classes) dataset is a well-known object detection, segmentation, and classification dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and classification tasks.

+

+## Key Features

+

+- VOC dataset includes two main challenges: VOC2007 and VOC2012.

+- The dataset comprises 20 object categories, including common objects like cars, bicycles, and animals, as well as more specific categories such as boats, sofas, and dining tables.

+- Annotations include object bounding boxes and class labels for object detection and classification tasks, and segmentation masks for the segmentation tasks.

+- VOC provides standardized evaluation metrics like mean Average Precision (mAP) for object detection and classification, making it suitable for comparing model performance.

+

+## Dataset Structure

+

+The VOC dataset is split into three subsets:

+

+1. **Train**: This subset contains images for training object detection, segmentation, and classification models.

+2. **Validation**: This subset has images used for validation purposes during model training.

+3. **Test**: This subset consists of images used for testing and benchmarking the trained models. Ground truth annotations for this subset are not publicly available, and the results are submitted to the [PASCAL VOC evaluation server](http://host.robots.ox.ac.uk:8080/leaderboard/displaylb.php) for performance evaluation.

+

+## Applications

+

+The VOC dataset is widely used for training and evaluating deep learning models in object detection (such as YOLO, Faster R-CNN, and SSD), instance segmentation (such as Mask R-CNN), and image classification. The dataset's diverse set of object categories, large number of annotated images, and standardized evaluation metrics make it an essential resource for computer vision researchers and practitioners.

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the VOC dataset, the `VOC.yaml` file should be created and maintained.

+

+!!! example "ultralytics/datasets/VOC.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/VOC.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='VOC.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from

+ a pretrained *.pt model

+ yolo detect train data=VOC.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+The VOC dataset contains a diverse set of images with various object categories and complex scenes. Here are some examples of images from the dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the VOC dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the VOC dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{everingham2010pascal,

+ title={The PASCAL Visual Object Classes (VOC) Challenge},

+ author={Mark Everingham and Luc Van Gool and Christopher K. I. Williams and John Winn and Andrew Zisserman},

+ year={2010},

+ eprint={0909.5206},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+We would like to acknowledge the PASCAL VOC Consortium for creating and maintaining this valuable resource for the computer vision community. For more information about the VOC dataset and its creators, visit the [PASCAL VOC dataset website](http://host.robots.ox.ac.uk/pascal/VOC/).

\ No newline at end of file

diff --git a/docs/datasets/pose/coco8-pose.md b/docs/datasets/pose/coco8-pose.md

index 19480d2..cff6067 100644

--- a/docs/datasets/pose/coco8-pose.md

+++ b/docs/datasets/pose/coco8-pose.md

@@ -1,7 +1,79 @@

---

comments: true

+description: Test and debug object detection models with Ultralytics COCO8-Pose Dataset - a small, versatile pose detection dataset with 8 images.

---

-# 🚧 Page Under Construction ⚒

+# COCO8-Pose Dataset

-This page is currently under construction!️ 👷Please check back later for updates. 😃🔜

+## Introduction

+

+[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first

+8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

+debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough

+to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before

+training larger datasets.

+

+This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

+and [YOLOv8](https://github.com/ultralytics/ultralytics).

+

+## Dataset YAML

+

+A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Pose dataset, the `coco8-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/datasets/coco8-pose.yaml).

+

+!!! example "ultralytics/datasets/coco8-pose.yaml"

+

+ ```yaml

+ --8<-- "ultralytics/datasets/coco8-pose.yaml"

+ ```

+

+## Usage

+

+To train a YOLOv8n model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

+

+!!! example "Train Example"

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+

+ # Train the model

+ model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco8-pose.yaml model=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Sample Images and Annotations

+

+Here are some examples of images from the COCO8-Pose dataset, along with their corresponding annotations:

+

+

+

+- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

+

+The example showcases the variety and complexity of the images in the COCO8 dataset and the benefits of using mosaicing during the training process.

+

+## Citations and Acknowledgments

+

+If you use the COCO dataset in your research or development work, please cite the following paper:

+

+```bibtex

+@misc{lin2015microsoft,

+ title={Microsoft COCO: Common Objects in Context},

+ author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

+ year={2015},

+ eprint={1405.0312},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+