许可证

-YOLOv8 提供两种不同的许可证:

+Ultralytics 提供两种许可证选项以适应各种使用场景:

-- **AGPL-3.0 许可证**:详细信息请参阅 [LICENSE](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) 文件。

-- **企业许可证**:为商业产品开发提供更大的灵活性,无需遵循 AGPL-3.0 的开源要求。典型的用例是将 Ultralytics 软件和 AI 模型嵌入商业产品和应用中。在 [Ultralytics 授权](https://ultralytics.com/license) 处申请企业许可证。

+- **AGPL-3.0 许可证**:这个[OSI 批准](https://opensource.org/licenses/)的开源许可证非常适合学生和爱好者,可以推动开放的协作和知识分享。请查看[LICENSE](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) 文件以了解更多细节。

+- **企业许可证**:专为商业用途设计,该许可证允许将 Ultralytics 的软件和 AI 模型无缝集成到商业产品和服务中,从而绕过 AGPL-3.0 的开源要求。如果您的场景涉及将我们的解决方案嵌入到商业产品中,请通过 [Ultralytics Licensing](https://ultralytics.com/license)与我们联系。

## 联系方式

-对于 YOLOv8 的错误报告和功能请求,请访问 [GitHub Issues](https://github.com/ultralytics/ultralytics/issues),并加入我们的 [Discord](https://discord.gg/2wNGbc6g9X) 社区进行问题和讨论!

+对于 Ultralytics 的错误报告和功能请求,请访问 [GitHub Issues](https://github.com/ultralytics/ultralytics/issues),并加入我们的 [Discord](https://discord.gg/2wNGbc6g9X) 社区进行问题和讨论!

diff --git a/docs/CNAME b/docs/CNAME

index 773aac8..339382a 100644

--- a/docs/CNAME

+++ b/docs/CNAME

@@ -1 +1 @@

-docs.ultralytics.com

\ No newline at end of file

+docs.ultralytics.com

diff --git a/docs/README.md b/docs/README.md

index df1e554..fcd4540 100644

--- a/docs/README.md

+++ b/docs/README.md

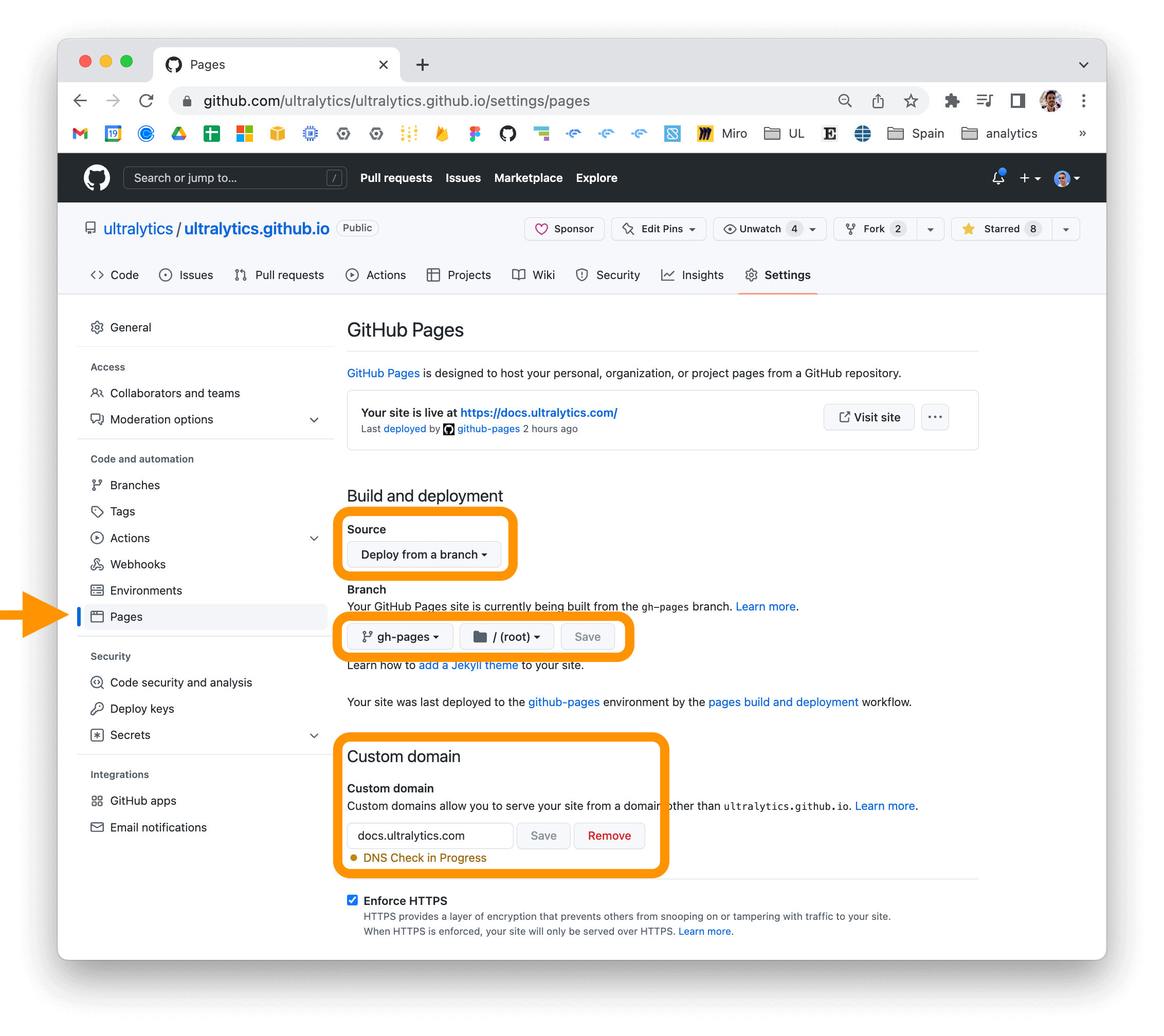

@@ -87,4 +87,4 @@ for your repository and updating the "Custom domain" field in the "GitHub Pages"

For more information on deploying your MkDocs documentation site, see

-the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

\ No newline at end of file

+the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

diff --git a/docs/SECURITY.md b/docs/SECURITY.md

index 1126b84..b7320e3 100644

--- a/docs/SECURITY.md

+++ b/docs/SECURITY.md

@@ -23,4 +23,4 @@ In addition to our Snyk scans, we also use GitHub's [CodeQL](https://docs.github

If you suspect or discover a security vulnerability in any of our repositories, please let us know immediately. You can reach out to us directly via our [contact form](https://ultralytics.com/contact) or via [security@ultralytics.com](mailto:security@ultralytics.com). Our security team will investigate and respond as soon as possible.

-We appreciate your help in keeping all Ultralytics open-source projects secure and safe for everyone.

\ No newline at end of file

+We appreciate your help in keeping all Ultralytics open-source projects secure and safe for everyone.

diff --git a/docs/build_reference.py b/docs/build_reference.py

index f945310..273c5a2 100644

--- a/docs/build_reference.py

+++ b/docs/build_reference.py

@@ -21,8 +21,8 @@ def extract_classes_and_functions(filepath):

with open(filepath, 'r') as file:

content = file.read()

- class_pattern = r"(?:^|\n)class\s(\w+)(?:\(|:)"

- func_pattern = r"(?:^|\n)def\s(\w+)\("

+ class_pattern = r'(?:^|\n)class\s(\w+)(?:\(|:)'

+ func_pattern = r'(?:^|\n)def\s(\w+)\('

classes = re.findall(class_pattern, content)

functions = re.findall(func_pattern, content)

@@ -34,18 +34,21 @@ def create_markdown(py_filepath, module_path, classes, functions):

md_filepath = py_filepath.with_suffix('.md')

# Read existing content and keep header content between first two ---

- header_content = ""

+ header_content = ''

if md_filepath.exists():

with open(md_filepath, 'r') as file:

existing_content = file.read()

- header_parts = existing_content.split('---', 2)

- if 'description:' in header_parts or 'comments:' in header_parts and len(header_parts) >= 3:

- header_content = f"{header_parts[0]}---{header_parts[1]}---\n\n"

+ header_parts = existing_content.split('---')

+ for part in header_parts:

+ if 'description:' in part or 'comments:' in part:

+ header_content += f'---{part}---\n\n'

module_path = module_path.replace('.__init__', '')

- md_content = [f"## {class_name}\n---\n### ::: {module_path}.{class_name}\n

\n" for class_name in classes] - md_content.extend(f"## {func_name}\n---\n### ::: {module_path}.{func_name}\n

\n" for func_name in functions) - md_content = header_content + "\n".join(md_content) + md_content = [f'## {class_name}\n---\n### ::: {module_path}.{class_name}\n

\n' for class_name in classes] + md_content.extend(f'## {func_name}\n---\n### ::: {module_path}.{func_name}\n