diff --git a/docs/guides/kfold-cross-validation.md b/docs/guides/kfold-cross-validation.md

index 4600486..2accea6 100644

--- a/docs/guides/kfold-cross-validation.md

+++ b/docs/guides/kfold-cross-validation.md

@@ -4,12 +4,16 @@ description: An in-depth guide demonstrating the implementation of K-Fold Cross

keywords: K-Fold cross validation, Ultralytics, YOLO detection format, Python, sklearn, object detection

---

-# K-Fold Cross Validation in the Ultralytics Ecosystem

+# K-Fold Cross Validation with Ultralytics

## Introduction

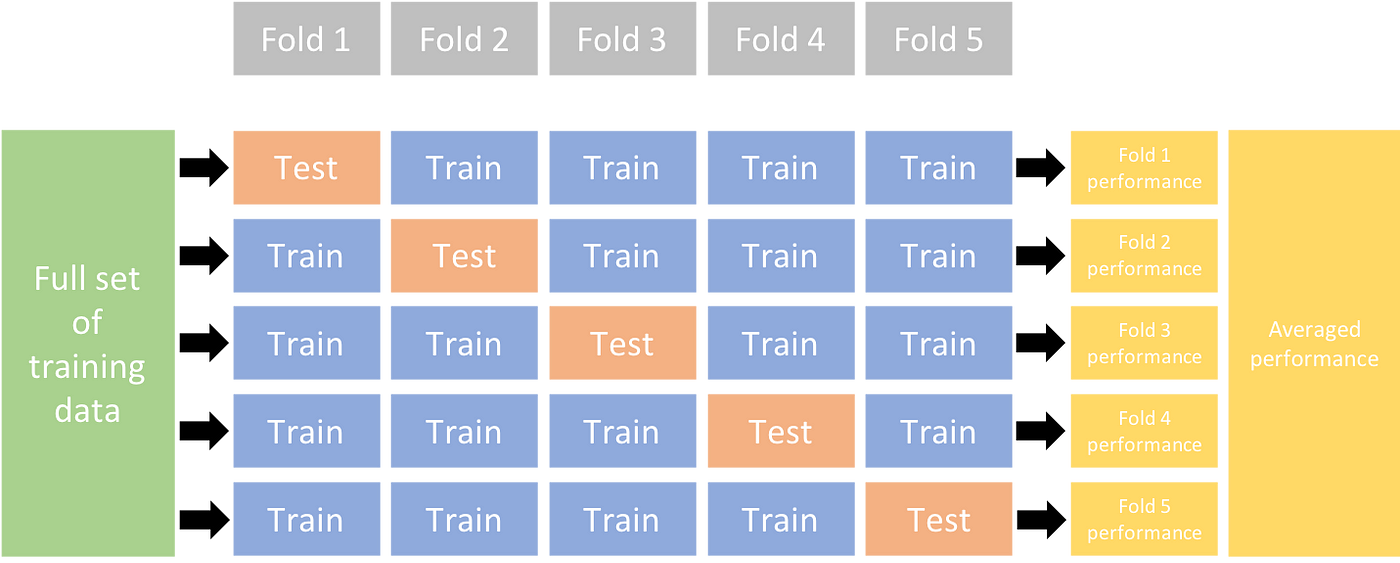

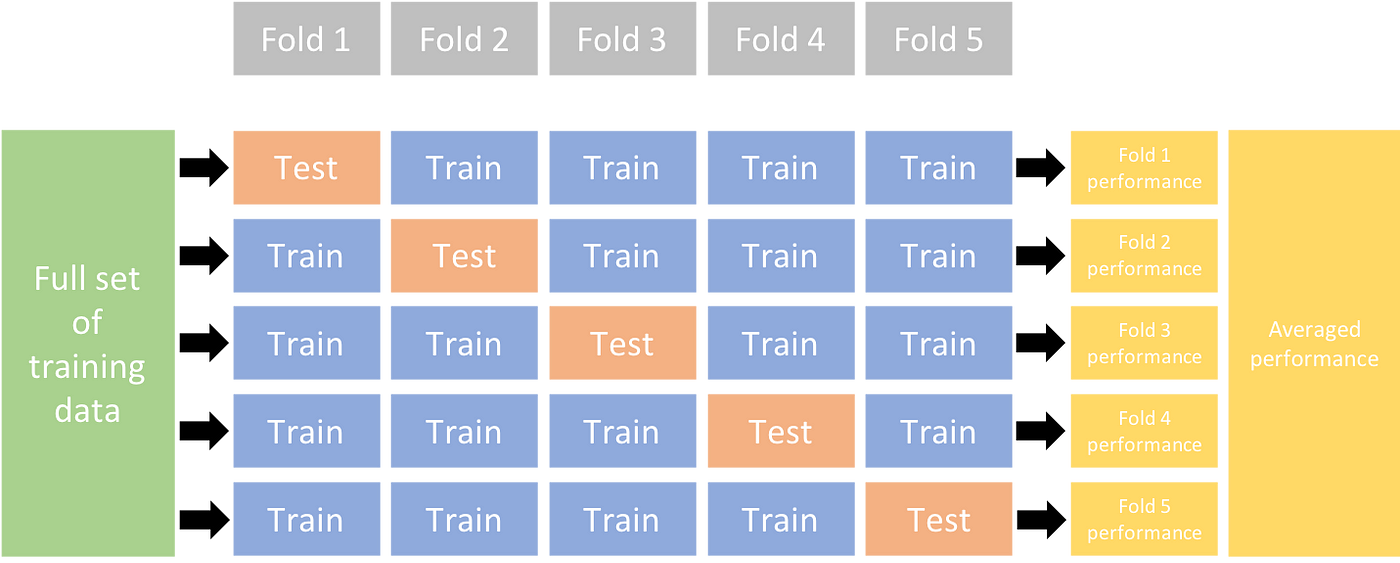

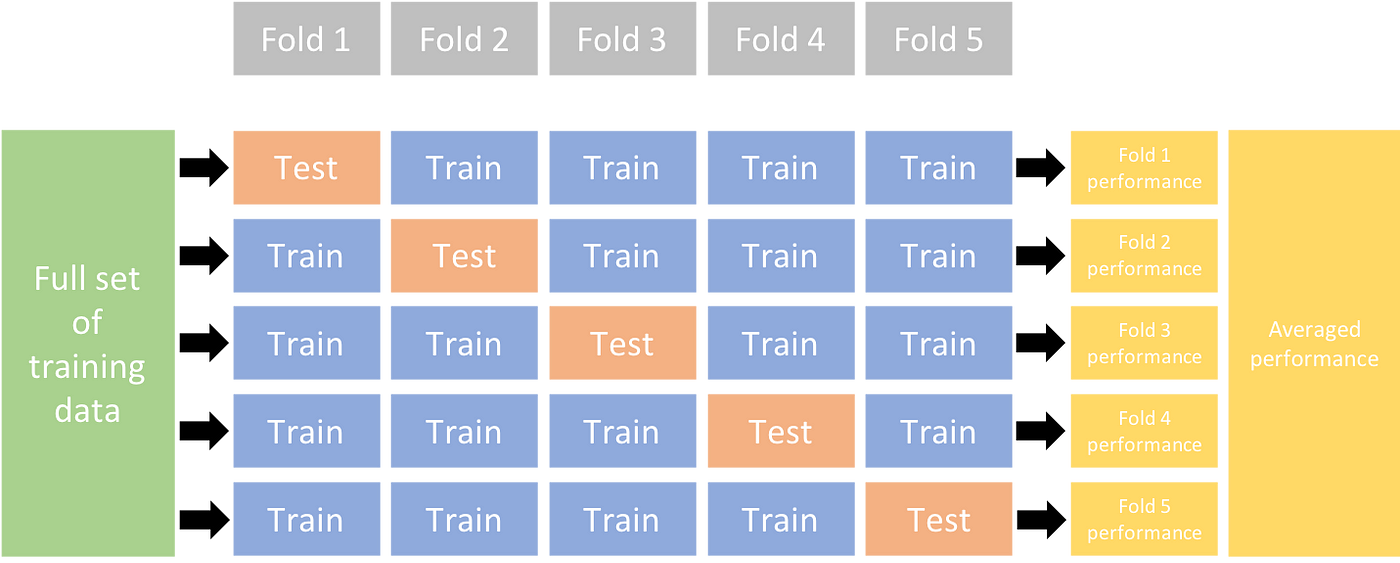

This comprehensive guide illustrates the implementation of K-Fold Cross Validation for object detection datasets within the Ultralytics ecosystem. We'll leverage the YOLO detection format and key Python libraries such as sklearn, pandas, and PyYaml to guide you through the necessary setup, the process of generating feature vectors, and the execution of a K-Fold dataset split.

+

+  +

+

+

Whether your project involves the Fruit Detection dataset or a custom data source, this tutorial aims to help you comprehend and apply K-Fold Cross Validation to bolster the reliability and robustness of your machine learning models. While we're applying `k=5` folds for this tutorial, keep in mind that the optimal number of folds can vary depending on your dataset and the specifics of your project.

Without further ado, let's dive in!

diff --git a/docs/integrations/openvino.md b/docs/integrations/openvino.md

index 9460449..02108de 100644

--- a/docs/integrations/openvino.md

+++ b/docs/integrations/openvino.md

@@ -4,7 +4,7 @@ description: Discover the power of deploying your Ultralytics YOLOv8 model using

keywords: ultralytics docs, YOLOv8, export YOLOv8, YOLOv8 model deployment, exporting YOLOv8, OpenVINO, OpenVINO format

---

- +

+ **Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this guide, we specifically cover exporting to OpenVINO, which can provide up to 3x [CPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_CPU.html) speedup as well as accelerating on other Intel hardware ([iGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [dGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [VPU](https://docs.openvino.ai/2022.3/openvino_docs_OV_UG_supported_plugins_VPU.html), etc.).

diff --git a/docs/integrations/ray-tune.md b/docs/integrations/ray-tune.md

index a6b5b78..06f7db9 100644

--- a/docs/integrations/ray-tune.md

+++ b/docs/integrations/ray-tune.md

@@ -14,7 +14,9 @@ Hyperparameter tuning is vital in achieving peak model performance by discoverin

### Ray Tune

-

+

**Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this guide, we specifically cover exporting to OpenVINO, which can provide up to 3x [CPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_CPU.html) speedup as well as accelerating on other Intel hardware ([iGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [dGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [VPU](https://docs.openvino.ai/2022.3/openvino_docs_OV_UG_supported_plugins_VPU.html), etc.).

diff --git a/docs/integrations/ray-tune.md b/docs/integrations/ray-tune.md

index a6b5b78..06f7db9 100644

--- a/docs/integrations/ray-tune.md

+++ b/docs/integrations/ray-tune.md

@@ -14,7 +14,9 @@ Hyperparameter tuning is vital in achieving peak model performance by discoverin

### Ray Tune

-

+

+  +

+

[Ray Tune](https://docs.ray.io/en/latest/tune/index.html) is a hyperparameter tuning library designed for efficiency and flexibility. It supports various search strategies, parallelism, and early stopping strategies, and seamlessly integrates with popular machine learning frameworks, including Ultralytics YOLOv8.

@@ -43,7 +45,10 @@ To install the required packages, run:

```python

from ultralytics import YOLO

+ # Load a YOLOv8n model

model = YOLO("yolov8n.pt")

+

+ # Start tuning hyperparameters for YOLOv8n training on the COCO128 dataset

result_grid = model.tune(data="coco128.yaml")

```

@@ -51,14 +56,14 @@ To install the required packages, run:

The `tune()` method in YOLOv8 provides an easy-to-use interface for hyperparameter tuning with Ray Tune. It accepts several arguments that allow you to customize the tuning process. Below is a detailed explanation of each parameter:

-| Parameter | Type | Description | Default Value |

-|-----------------|----------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------|

-| `data` | str | The dataset configuration file (in YAML format) to run the tuner on. This file should specify the training and validation data paths, as well as other dataset-specific settings. | |

-| `space` | dict, optional | A dictionary defining the hyperparameter search space for Ray Tune. Each key corresponds to a hyperparameter name, and the value specifies the range of values to explore during tuning. If not provided, YOLOv8 uses a default search space with various hyperparameters. | |

-| `grace_period` | int, optional | The grace period in epochs for the [ASHA scheduler](https://docs.ray.io/en/latest/tune/api/schedulers.html) in Ray Tune. The scheduler will not terminate any trial before this number of epochs, allowing the model to have some minimum training before making a decision on early stopping. | 10 |

-| `gpu_per_trial` | int, optional | The number of GPUs to allocate per trial during tuning. This helps manage GPU usage, particularly in multi-GPU environments. If not provided, the tuner will use all available GPUs. | None |

-| `max_samples` | int, optional | The maximum number of trials to run during tuning. This parameter helps control the total number of hyperparameter combinations tested, ensuring the tuning process does not run indefinitely. | 10 |

-| `**train_args` | dict, optional | Additional arguments to pass to the `train()` method during tuning. These arguments can include settings like the number of training epochs, batch size, and other training-specific configurations. | {} |

+| Parameter | Type | Description | Default Value |

+|-----------------|------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------|

+| `data` | `str` | The dataset configuration file (in YAML format) to run the tuner on. This file should specify the training and validation data paths, as well as other dataset-specific settings. | |

+| `space` | `dict, optional` | A dictionary defining the hyperparameter search space for Ray Tune. Each key corresponds to a hyperparameter name, and the value specifies the range of values to explore during tuning. If not provided, YOLOv8 uses a default search space with various hyperparameters. | |

+| `grace_period` | `int, optional` | The grace period in epochs for the [ASHA scheduler](https://docs.ray.io/en/latest/tune/api/schedulers.html) in Ray Tune. The scheduler will not terminate any trial before this number of epochs, allowing the model to have some minimum training before making a decision on early stopping. | 10 |

+| `gpu_per_trial` | `int, optional` | The number of GPUs to allocate per trial during tuning. This helps manage GPU usage, particularly in multi-GPU environments. If not provided, the tuner will use all available GPUs. | None |

+| `max_samples` | `int, optional` | The maximum number of trials to run during tuning. This parameter helps control the total number of hyperparameter combinations tested, ensuring the tuning process does not run indefinitely. | 10 |

+| `**train_args` | `dict, optional` | Additional arguments to pass to the `train()` method during tuning. These arguments can include settings like the number of training epochs, batch size, and other training-specific configurations. | {} |

By customizing these parameters, you can fine-tune the hyperparameter optimization process to suit your specific needs and available computational resources.

@@ -66,29 +71,29 @@ By customizing these parameters, you can fine-tune the hyperparameter optimizati

The following table lists the default search space parameters for hyperparameter tuning in YOLOv8 with Ray Tune. Each parameter has a specific value range defined by `tune.uniform()`.

-| Parameter | Value Range | Description |

-|-----------------|----------------------------|------------------------------------------|

-| lr0 | `tune.uniform(1e-5, 1e-1)` | Initial learning rate |

-| lrf | `tune.uniform(0.01, 1.0)` | Final learning rate factor |

-| momentum | `tune.uniform(0.6, 0.98)` | Momentum |

-| weight_decay | `tune.uniform(0.0, 0.001)` | Weight decay |

-| warmup_epochs | `tune.uniform(0.0, 5.0)` | Warmup epochs |

-| warmup_momentum | `tune.uniform(0.0, 0.95)` | Warmup momentum |

-| box | `tune.uniform(0.02, 0.2)` | Box loss weight |

-| cls | `tune.uniform(0.2, 4.0)` | Class loss weight |

-| hsv_h | `tune.uniform(0.0, 0.1)` | Hue augmentation range |

-| hsv_s | `tune.uniform(0.0, 0.9)` | Saturation augmentation range |

-| hsv_v | `tune.uniform(0.0, 0.9)` | Value (brightness) augmentation range |

-| degrees | `tune.uniform(0.0, 45.0)` | Rotation augmentation range (degrees) |

-| translate | `tune.uniform(0.0, 0.9)` | Translation augmentation range |

-| scale | `tune.uniform(0.0, 0.9)` | Scaling augmentation range |

-| shear | `tune.uniform(0.0, 10.0)` | Shear augmentation range (degrees) |

-| perspective | `tune.uniform(0.0, 0.001)` | Perspective augmentation range |

-| flipud | `tune.uniform(0.0, 1.0)` | Vertical flip augmentation probability |

-| fliplr | `tune.uniform(0.0, 1.0)` | Horizontal flip augmentation probability |

-| mosaic | `tune.uniform(0.0, 1.0)` | Mosaic augmentation probability |

-| mixup | `tune.uniform(0.0, 1.0)` | Mixup augmentation probability |

-| copy_paste | `tune.uniform(0.0, 1.0)` | Copy-paste augmentation probability |

+| Parameter | Value Range | Description |

+|-------------------|----------------------------|------------------------------------------|

+| `lr0` | `tune.uniform(1e-5, 1e-1)` | Initial learning rate |

+| `lrf` | `tune.uniform(0.01, 1.0)` | Final learning rate factor |

+| `momentum` | `tune.uniform(0.6, 0.98)` | Momentum |

+| `weight_decay` | `tune.uniform(0.0, 0.001)` | Weight decay |

+| `warmup_epochs` | `tune.uniform(0.0, 5.0)` | Warmup epochs |

+| `warmup_momentum` | `tune.uniform(0.0, 0.95)` | Warmup momentum |

+| `box` | `tune.uniform(0.02, 0.2)` | Box loss weight |

+| `cls` | `tune.uniform(0.2, 4.0)` | Class loss weight |

+| `hsv_h` | `tune.uniform(0.0, 0.1)` | Hue augmentation range |

+| `hsv_s` | `tune.uniform(0.0, 0.9)` | Saturation augmentation range |

+| `hsv_v` | `tune.uniform(0.0, 0.9)` | Value (brightness) augmentation range |

+| `degrees` | `tune.uniform(0.0, 45.0)` | Rotation augmentation range (degrees) |

+| `translate` | `tune.uniform(0.0, 0.9)` | Translation augmentation range |

+| `scale` | `tune.uniform(0.0, 0.9)` | Scaling augmentation range |

+| `shear` | `tune.uniform(0.0, 10.0)` | Shear augmentation range (degrees) |

+| `perspective` | `tune.uniform(0.0, 0.001)` | Perspective augmentation range |

+| `flipud` | `tune.uniform(0.0, 1.0)` | Vertical flip augmentation probability |

+| `fliplr` | `tune.uniform(0.0, 1.0)` | Horizontal flip augmentation probability |

+| `mosaic` | `tune.uniform(0.0, 1.0)` | Mosaic augmentation probability |

+| `mixup` | `tune.uniform(0.0, 1.0)` | Mixup augmentation probability |

+| `copy_paste` | `tune.uniform(0.0, 1.0)` | Copy-paste augmentation probability |

## Custom Search Space Example

diff --git a/docs/modes/predict.md b/docs/modes/predict.md

index 207e0ee..d7a62d6 100644

--- a/docs/modes/predict.md

+++ b/docs/modes/predict.md

@@ -571,7 +571,7 @@ You can use the `plot()` method of a `Result` objects to visualize predictions.

# Show the results

for r in results:

im_array = r.plot() # plot a BGR numpy array of predictions

- im = Image.fromarray(im[..., ::-1]) # RGB PIL image

+ im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

im.show() # show image

im.save('results.jpg') # save image

```

diff --git a/setup.py b/setup.py

index 129cebe..ce6673f 100644

--- a/setup.py

+++ b/setup.py

@@ -46,7 +46,7 @@ setup(

'mkdocs-material',

'mkdocstrings[python]',

'mkdocs-redirects', # for 301 redirects

- 'mkdocs-ultralytics-plugin>=0.0.24', # for meta descriptions and images, dates and authors

+ 'mkdocs-ultralytics-plugin>=0.0.25', # for meta descriptions and images, dates and authors

],

'export': [

'coremltools>=6.0,<=6.2',

diff --git a/ultralytics/__init__.py b/ultralytics/__init__.py

index 73b5d89..7cb9607 100644

--- a/ultralytics/__init__.py

+++ b/ultralytics/__init__.py

@@ -1,6 +1,6 @@

# Ultralytics YOLO 🚀, AGPL-3.0 license

-__version__ = '8.0.147'

+__version__ = '8.0.148'

from ultralytics.hub import start

from ultralytics.models import RTDETR, SAM, YOLO

diff --git a/ultralytics/utils/__init__.py b/ultralytics/utils/__init__.py

index a7ada26..b966c4d 100644

--- a/ultralytics/utils/__init__.py

+++ b/ultralytics/utils/__init__.py

@@ -329,7 +329,10 @@ def yaml_load(file='data.yaml', append_filename=False):

s = re.sub(r'[^\x09\x0A\x0D\x20-\x7E\x85\xA0-\uD7FF\uE000-\uFFFD\U00010000-\U0010ffff]+', '', s)

# Add YAML filename to dict and return

- return {**yaml.safe_load(s), 'yaml_file': str(file)} if append_filename else yaml.safe_load(s)

+ data = yaml.safe_load(s) or {} # always return a dict (yaml.safe_load() may return None for empty files)

+ if append_filename:

+ data['yaml_file'] = str(file)

+ return data

def yaml_print(yaml_file: Union[str, Path, dict]) -> None:

+

+ +

+ +

+ **Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this guide, we specifically cover exporting to OpenVINO, which can provide up to 3x [CPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_CPU.html) speedup as well as accelerating on other Intel hardware ([iGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [dGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [VPU](https://docs.openvino.ai/2022.3/openvino_docs_OV_UG_supported_plugins_VPU.html), etc.).

diff --git a/docs/integrations/ray-tune.md b/docs/integrations/ray-tune.md

index a6b5b78..06f7db9 100644

--- a/docs/integrations/ray-tune.md

+++ b/docs/integrations/ray-tune.md

@@ -14,7 +14,9 @@ Hyperparameter tuning is vital in achieving peak model performance by discoverin

### Ray Tune

-

+

**Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this guide, we specifically cover exporting to OpenVINO, which can provide up to 3x [CPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_CPU.html) speedup as well as accelerating on other Intel hardware ([iGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [dGPU](https://docs.openvino.ai/2023.0/openvino_docs_OV_UG_supported_plugins_GPU.html), [VPU](https://docs.openvino.ai/2022.3/openvino_docs_OV_UG_supported_plugins_VPU.html), etc.).

diff --git a/docs/integrations/ray-tune.md b/docs/integrations/ray-tune.md

index a6b5b78..06f7db9 100644

--- a/docs/integrations/ray-tune.md

+++ b/docs/integrations/ray-tune.md

@@ -14,7 +14,9 @@ Hyperparameter tuning is vital in achieving peak model performance by discoverin

### Ray Tune

-

+ +

+